a. Derivatives of various activation functions. Show how the derivatives of activation functions, including sigmoid, tanh, and

Question:

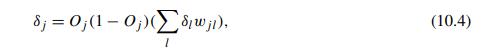

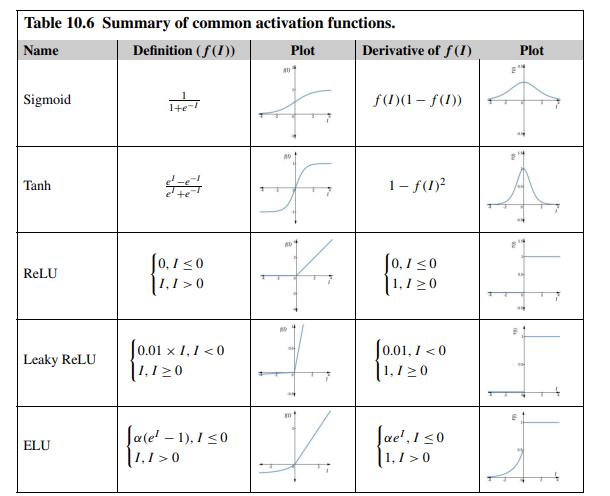

a. Derivatives of various activation functions. Show how the derivatives of activation functions, including sigmoid, tanh, and ELU in Table 10.6, are derived in mathematical details.

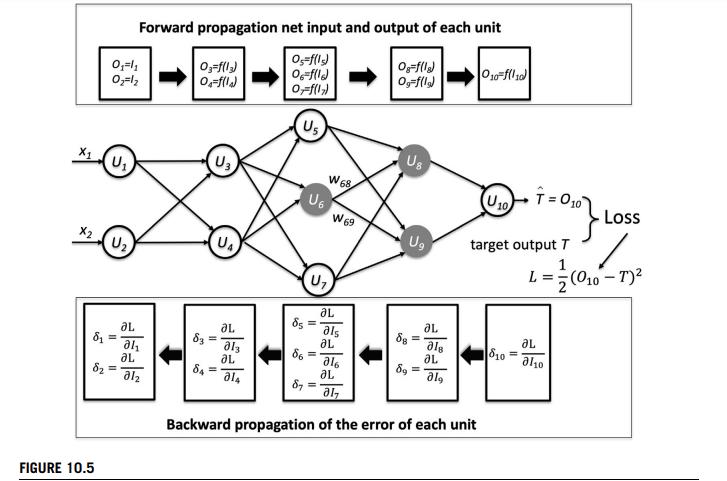

b. Backpropagation algorithm. Consider a multilayer feed-forward neural network as shown in Fig. 10.5 with sigmoid activation and mean-squared loss function . Prove (1) Eq. (10.3) for computing the error in the output unit ; (2) Eq. (10.4) for computing the errors in hidden units and ; (hint: consider the chain rule); and (3) Eq. (10.5) for updating weights (hint: consider the derivative of the loss with respect to weights).

![]()

![]()

c. Relation between different activation functions. Given the sigmoid function and hyperbolic tangent function , show in mathematics how can be transformed from sigmoid through shifting and re-scaling.

Step by Step Answer:

Data Mining Concepts And Techniques

ISBN: 9780128117613

4th Edition

Authors: Jiawei Han, Jian Pei, Hanghang Tong