Consider the following piece of C code: for (j = 2;jD[j] = D[j 1]+D[j 2];

Question:

Consider the following piece of C code:

for (j = 2;jD[j] = D[j − 1]+D[j − 2];

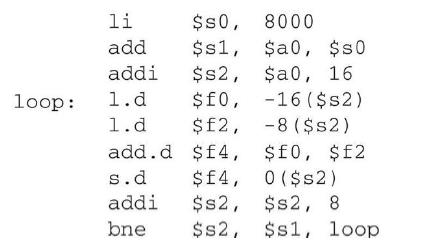

The MIPS code corresponding to the above fragment is:

Instructions have the following associated latencies (in cycles):

![]()

1. How many cycles does it take to execute this code?

2. Reorder the code to reduce stalls. Now, how many cycles does it take to execute this code? (Hint: You can remove additional stalls by changing the offset on the fsd instruction.)

3. When an instruction in a later iteration of a loop depends upon a data value produced in an earlier iteration of the same loop, we say that there is a loop carried dependence between iterations of the loop. Identify the loopcarried dependences in the above code. Identify the dependent program variable and assembly-level registers. You can ignore the loop induction variable j.

4. Rewrite the code by using registers to carry the data between iterations of the loop (as opposed to storing and reloading the data from main memory). Show where this code stalls, and calculate the number of cycles required to execute. Note that for this problem you will need to use the assembler pseudoinstruction "mov.d rd, rs", which writes the value of floating-point register rs1 into floating-point register rd. Assume that mov, d executes in a single cycle.

5. Loop unrolling was described in Chapter 4. Unroll and optimize the loop above so that each unrolled loop handles three iterations of the original loop. Show where this code stalls and calculate the number of cycles required to execute.

6. The unrolling from Exercise 6.4.5. works nicely because we happen to want a multiple of three iterations. What happens if the number of iterations is not known at compile time? How can we efficiently handle a number of iterations that is not a multiple of the number of iterations per unrolled loop?

7. Consider running this code on a two-node distributed memory message passing system. Assume that we are going to use message passing as described in Section 6.7, where we introduce a new operation send (x, ty) that sends to node x the value y, and an operation receive() that waits for the value being sent to it. Assume that send operations take one cycle to issue (i.e., later instructions on the same node can proceed on the next cycle) but take several cycles to be received on the receiving node. Receive instructions stall execution on the node where they are executed until they receive a message. Can you use such a system to speedup the code for this exercise? If so, what is the maximum latency for receiving information that can be tolerated? If not, why not?

Step by Step Answer:

Computer Organization And Design MIPS Edition The Hardware/Software Interface

ISBN: 9780128201091

6th Edition

Authors: David A. Patterson, John L. Hennessy