The entropy (H(X)) of a discrete random variable (X) measures the uncertainty about predicting the value of

Question:

The entropy \(H(X)\) of a discrete random variable \(X\) measures the uncertainty about predicting the value of \(X\) (Cover \(\&\) Thomas, 2006). If \(X\) has the probability distribution \(p_{X}(x)\), its entropy is determined by

\[H(X)=-\sum_{x} p_{X}(x) \log _{b} p_{X}(x)\]

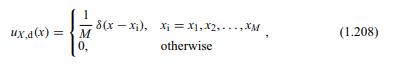

where the base \(b\) of the logarithm determines the entropy unit. If \(b=2\), for instance, the entropy is measured in bits/symbol. Determine in bits/symbol the entropy of a random variable \(X\) characterized by the discrete uniform distribution \(u_{X, \mathrm{~d}}(x)\) given in Equation (1.208).

Fantastic news! We've Found the answer you've been seeking!

Step by Step Answer:

Related Book For

Digital Signal Processing System Analysis And Design

ISBN: 9780521887755

2nd Edition

Authors: Paulo S. R. Diniz, Eduardo A. B. Da Silva , Sergio L. Netto

Question Posted: