12.6 The three-layer Perceptron network shown in Figure 12.11, when properly trained, should respond with a desired

Question:

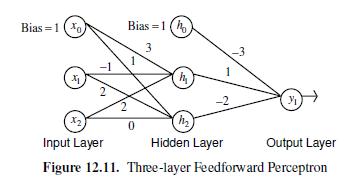

12.6 The three-layer Perceptron network shown in Figure 12.11, when properly trained, should respond with a desired output d = 0.9 at y1 to the augmented input vector x = [1,x1,x2]T = [1,1,3]T. The network weights have been initialised as shown in Figure 12.11. Assume sigmoidal activation functions at the outputs of the hidden and output nodes and learning gains of η = μ = 0.1 and no momentum term. Analyse a single feedforward and backpropagation step for the initialised network by doing the following,

a. Give the weight matrices W (input-hidden) and U (hidden-output).

b. Calculate the output of hidden layer, h = [1, h1, h2]T and output y1.

c. Compute the error signals δ y1, δ h1 and δ h2.

d. Compute all the nine weight updates, Δwij and Δuij.

Use the activation function f z e z ( ) = + -

1 1 .

Step by Step Answer: