Question: Computing the standard inner product between two vectors a, b R n requires n multiplications and additions. When the dimension n is huge (say,

Computing the standard inner product between two vectors a, b ∈ Rn requires n multiplications and additions. When the dimension n is huge (say, e.g., of the order of 1012, or larger), even computing a simple inner product can be computationally prohibitive.

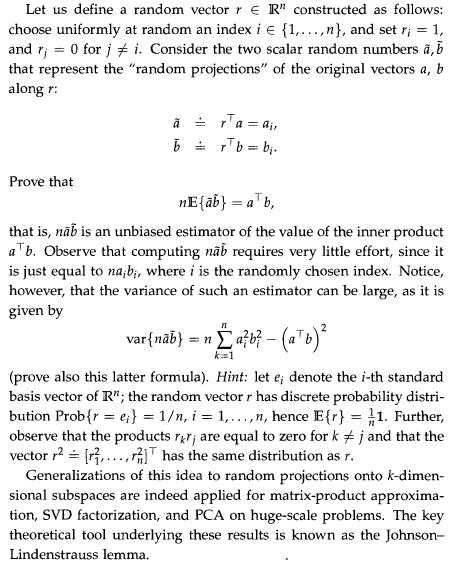

Let us define a random vector r ER" constructed as follows: choose uniformly at random an index i = {1,...,n}, and set r; = 1, and r;= 0 for ji. Consider the two scalar random numbers a, b that represent the "random projections" of the original vectors a, b along r: Prove that = ra = a, b = rb = b. nE{ab} = ab, that is, nb is an unbiased estimator of the value of the inner product ab. Observe that computing no requires very little effort, since it is just equal to najb;, where i is the randomly chosen index. Notice, however, that the variance of such an estimator can be large, as it is given by [ab - (a + b) k=1 var{nab}= (prove also this latter formula). Hint: let e; denote the i-th standard basis vector of R"; the random vector r has discrete probability distri- bution Prob{r = e;} = 1/n, i = 1,...,n, hence E{r} = 11. Further, observe that the products rr are equal to zero for k j and that the vector r = [...] has the same distribution as r. Generalizations of this idea to random projections onto k-dimen- sional subspaces are indeed applied for matrix-product approxima- tion, SVD factorization, and PCA on huge-scale problems. The key theoretical tool underlying these results is known as the Johnson- Lindenstrauss lemma.

Step by Step Solution

3.50 Rating (167 Votes )

There are 3 Steps involved in it

Indeed when dealing with highdimensional vectors in Rn the computational cost of computing the stand... View full answer

Get step-by-step solutions from verified subject matter experts