Answered step by step

Verified Expert Solution

Question

1 Approved Answer

1 Formal Definition Recall a pool-based teacher cannot create arbitrary data points. Instead, the teacher is given a pool of data points P =

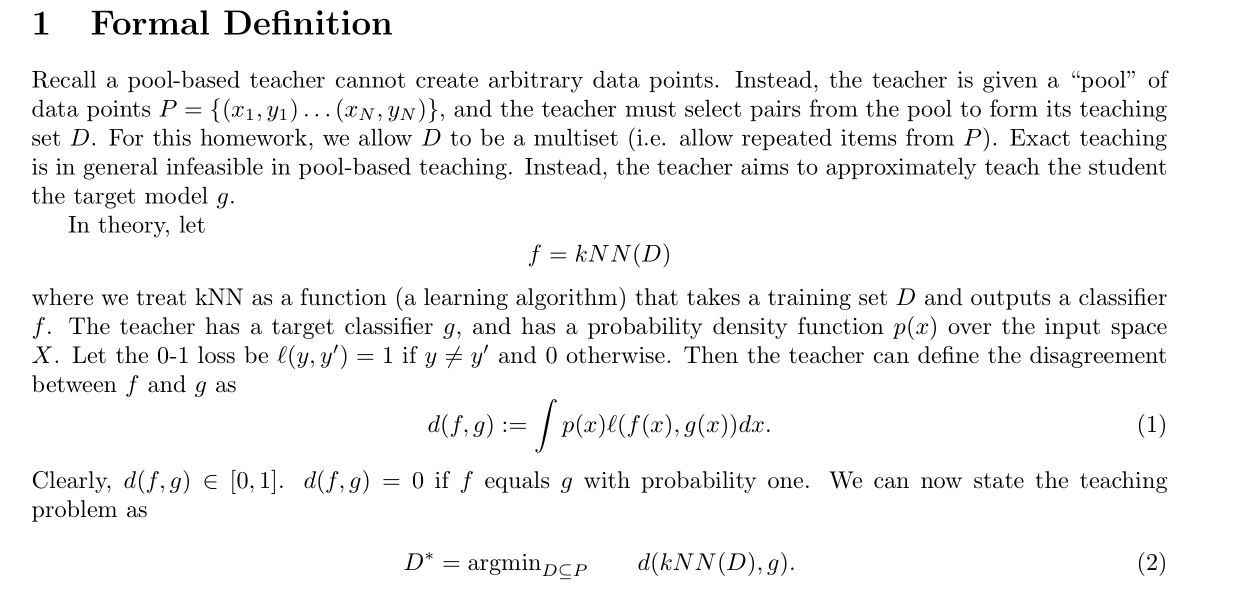

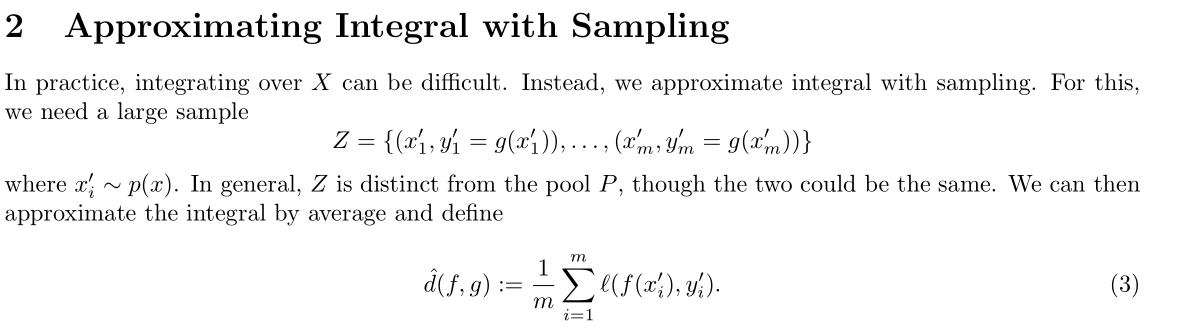

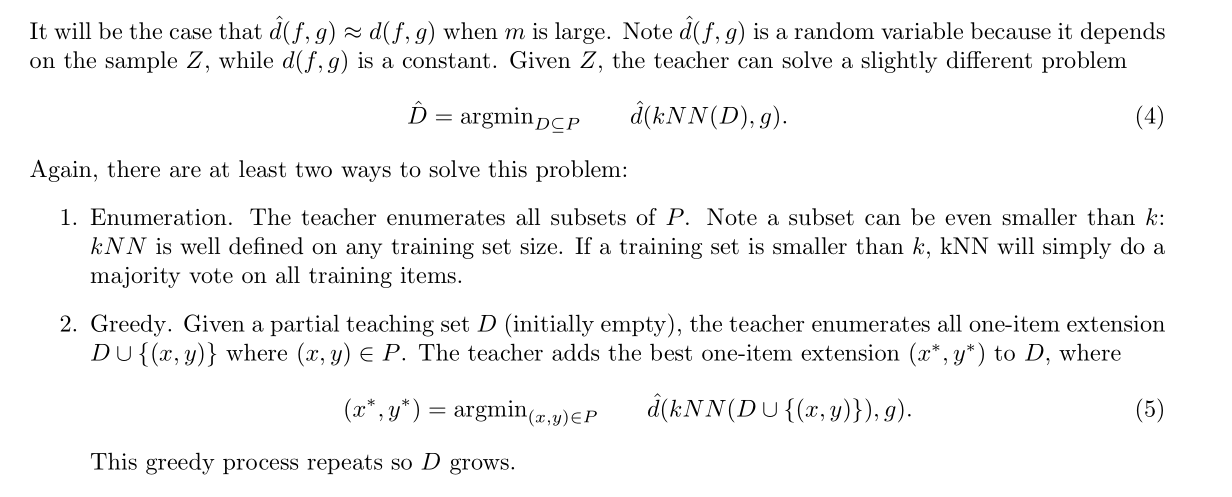

1 Formal Definition Recall a pool-based teacher cannot create arbitrary data points. Instead, the teacher is given a "pool" of data points P = {(x1,y1)... (xN, YN)}, and the teacher must select pairs from the pool to form its teaching set D. For this homework, we allow D to be a multiset (i.e. allow repeated items from P). Exact teaching is in general infeasible in pool-based teaching. Instead, the teacher aims to approximately teach the student the target model g. In theory, let f = kNN(D) where we treat kNN as a function (a learning algorithm) that takes a training set D and outputs a classifier f. The teacher has a target classifier g, and has a probability density function p(x) over the input space X. Let the 0-1 loss be l(y, y') = 1 if y y' and 0 otherwise. Then the teacher can define the disagreement between f and gas d(f,g) := [ p(x)l(f(x), g(x))dx. (1) Clearly, d(f,g) [0,1]. d(f,g) = 0 if equals 9 with probability one. We can now state the teaching problem as D* = argmin DCP d(kNN(D), g). (2) 2 Approximating Integral with Sampling In practice, integrating over X can be difficult. Instead, we approximate integral with sampling. For this, we need a large sample Z = {(x1,y1 = g(x1)), . . ., (x'm, Y'm = g(x'm))} where x ~ p(x). In general, Z is distinct from the pool P, though the two could be the same. We can then approximate the integral by average and define (f,g): == 1 m m l (f(x^{), yk). (3) i=1 It will be the case that d(f, g) d(f, g) when m is large. Note d(f, g) is a random variable because it depends on the sample Z, while d(f, g) is a constant. Given Z, the teacher can solve a slightly different problem D = argmin DCP Again, there are at least two ways to solve this problem: (kNN(D), g). (4) 1. Enumeration. The teacher enumerates all subsets of P. Note a subset can be even smaller than k: kNN is well defined on any training set size. If a training set is smaller than k, kNN will simply do a majority vote on all training items. 2. Greedy. Given a partial teaching set D (initially empty), the teacher enumerates all one-item extension DU {(x, y)} where (x, y) = P. The teacher adds the best one-item extension (x*, y*) to D, where (x*, y*) = argmin(x,y) EP This greedy process repeats so D grows. (kNN(DU {(x, y)}), g). 3 Adding a Teaching Cost The teacher's objective so far is to guide the student close to g. Finally, we introduce the notation of a "teaching cost" function c(D) which specifies how costly it is for the teacher to use a teaching set D. The new teaching problem is D= argminDCP (kNN(D), g) + c(D). (6) c(D) can be thought of as a "regularizer" in the data space that encourages cheap teaching sets. For this homework, we will consider a particularly simple teaching cost: c(D) = 0 if |D| n*, and otherwise, for a given threshold n*. This simply prevents D from being larger than n*. For enumeration, you will consider subsets of size at most n*; for greedy, simply stop after size n*. 4 Hand in For this homework let k = 1 (INN), n* = 20, P = Z=hw5data.txt. Each row x1 x2 y is a data item with 2D feature (x1, x2) and binary label y. Implement both enumeration and greedy. For each teaching set size, show: 1. the number of teaching sets of that size that you have to search through; 2. number of seconds it takes for that size; 3. (kNN(D), g) of the best teaching set of that size 4. for enumeration, plot the best teaching set D in relation to P (i.e. plot both, but use different symbols for D) For greedy, only plot one figure for the last teaching set D with size n* in relation to P, but see if you can mark the order of teaching items entering the greedy teaching set (e.g. use numbers instead of symbols to show items in D). Again you may not be able to enumerate teaching sets of large size, and that is OK.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started