Answered step by step

Verified Expert Solution

Question

1 Approved Answer

1. Random Sampling a data stream - 75 points The Problem Statement: Your data is a stream of items of unknown length that we

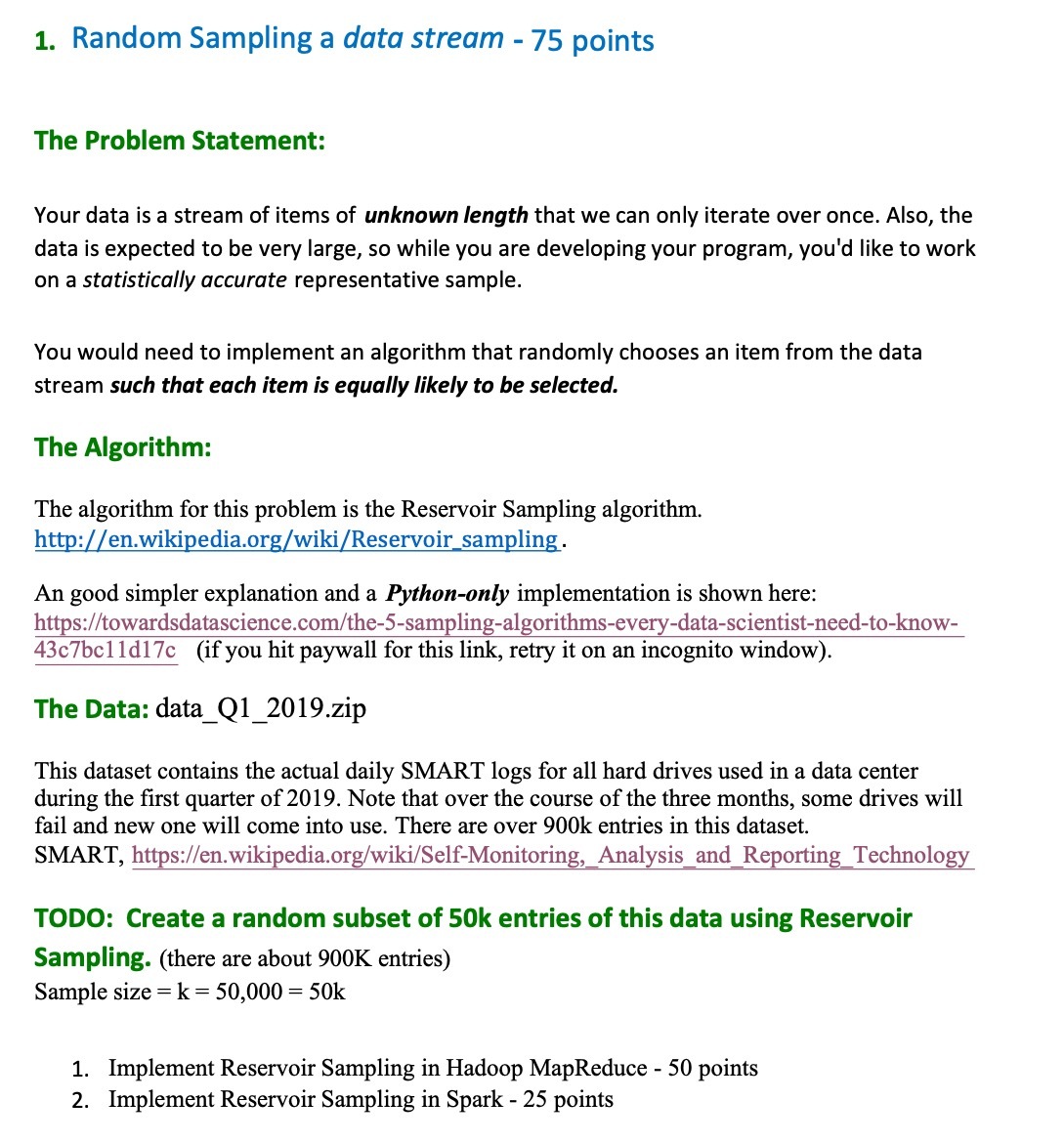

1. Random Sampling a data stream - 75 points The Problem Statement: Your data is a stream of items of unknown length that we can only iterate over once. Also, the data is expected to be very large, so while you are developing your program, you'd like to work on a statistically accurate representative sample. You would need to implement an algorithm that randomly chooses an item from the data stream such that each item is equally likely to be selected. The Algorithm: The algorithm for this problem is the Reservoir Sampling algorithm. http://en.wikipedia.org/wiki/Reservoir_sampling. An good simpler explanation and a Python-only implementation is shown here: https://towardsdatascience.com/the-5-sampling-algorithms-every-data-scientist-need-to-know- 43c7bc11d17c (if you hit paywall for this link, retry it on an incognito window). The Data: data_Q1_2019.zip This dataset contains the actual daily SMART logs for all hard drives used in a data center during the first quarter of 2019. Note that over the course of the three months, some drives will fail and new one will come into use. There are over 900k entries in this dataset. SMART, https://en.wikipedia.org/wiki/Self-Monitoring, Analysis_and_Reporting_Technology TODO: Create a random subset of 50k entries of this data using Reservoir Sampling. (there are about 900K entries) Sample size k = 50,000 = 50k 1. Implement Reservoir Sampling in Hadoop MapReduce - 50 points 2. Implement Reservoir Sampling in Spark - 25 points

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started