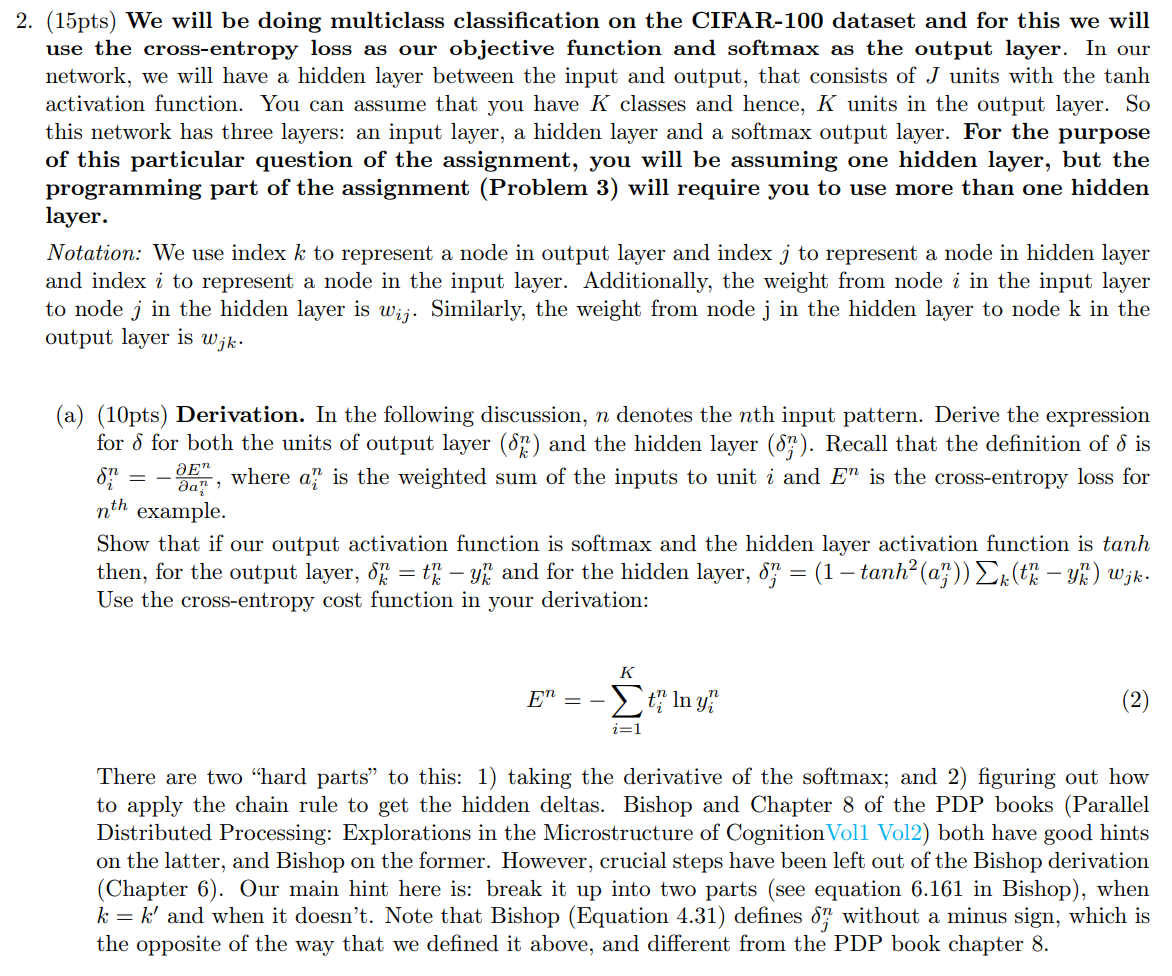

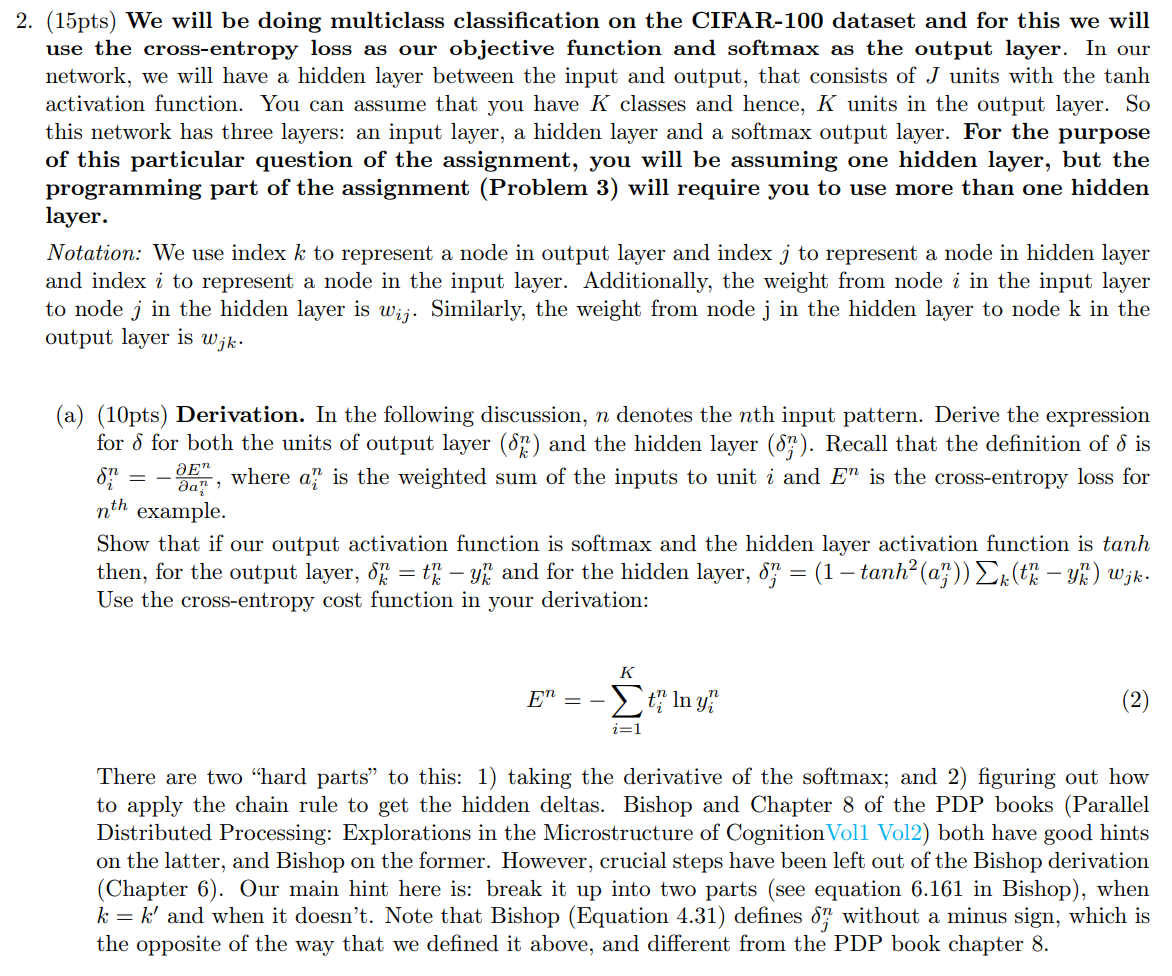

(15pts) We will be doing multiclass classification on the CIFAR-100 dataset and for this we will use the cross-entropy loss as our objective function and softmax as the output layer. In our network, we will have a hidden layer between the input and output, that consists of J units with the tanh activation function. You can assume that you have K classes and hence, K units in the output layer. So this network has three layers: an input layer, a hidden layer and a softmax output layer. For the purpose of this particular question of the assignment, you will be assuming one hidden layer, but the programming part of the assignment (Problem 3) will require you to use more than one hidden layer. Notation: We use index k to represent a node in output layer and index j to represent a node in hidden layer and index i to represent a node in the input layer. Additionally, the weight from node i in the input layer to node j in the hidden layer is wij. Similarly, the weight from node j in the hidden layer to node k in the output layer is wjk. (a) (10pts) Derivation. In the following discussion, n denotes the nth input pattern. Derive the expression for for both the units of output layer (kn) and the hidden layer (jn). Recall that the definition of is in=ainEn, where ain is the weighted sum of the inputs to unit i and En is the cross-entropy loss for nth example. Show that if our output activation function is softmax and the hidden layer activation function is tanh then, for the output layer, kn=tknykn and for the hidden layer, jn=(1tanh2(ajn))k(tknykn)wjk. Use the cross-entropy cost function in your derivation: En=i=1Ktinlnyin There are two "hard parts" to this: 1) taking the derivative of the softmax; and 2) figuring out how to apply the chain rule to get the hidden deltas. Bishop and Chapter 8 of the PDP books (Parallel Distributed Processing: Explorations in the Microstructure of CognitionVol1 Vol2) both have good hints on the latter, and Bishop on the former. However, crucial steps have been left out of the Bishop derivation (Chapter 6). Our main hint here is: break it up into two parts (see equation 6.161 in Bishop), when k=k and when it doesn't. Note that Bishop (Equation 4.31) defines jn without a minus sign, which is the opposite of the way that we defined it above, and different from the PDP book chapter 8