3. Fully Connected Neural Networks [6 marks] Consider a trained Neural Network that has an input layer with 4 inputs, a single hidden layer

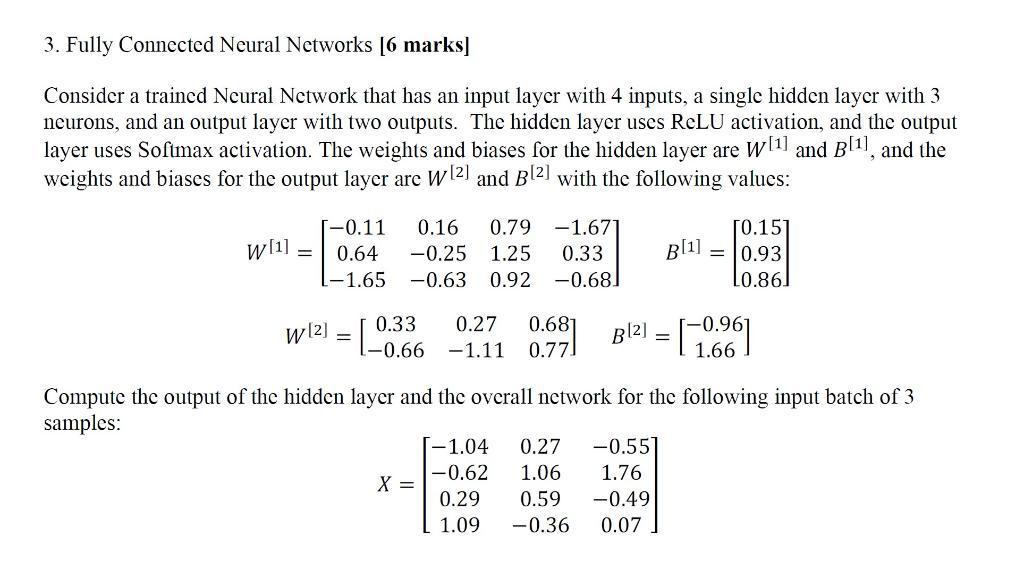

3. Fully Connected Neural Networks [6 marks] Consider a trained Neural Network that has an input layer with 4 inputs, a single hidden layer with 3 neurons, and an output layer with two outputs. The hidden layer uses ReLU activation, and the output layer uses Softmax activation. The weights and biases for the hidden layer are W[1] and B[1], and the weights and biases for the output layer are W[2] and B[2] with the following values: [-0.11 0.16 0.79 -1.671 W[1] = 0.64 L-1.65 -0.25 1.25 0.33 -0.63 0.92 -0.68] B[1] [0.151 = 0.93 10.86 W[2] = [ 0.33 B[2] = = [-0.96] 0.27 0.681 -0.66 -1.11 0.77 1.66 Compute the output of the hidden layer and the overall network for the following input batch of 3 samples: -1.04 0.27 -0.551 -0.62 1.06 1.76 X = 0.29 0.59 -0.49 1.09 -0.36 0.07

Step by Step Solution

There are 3 Steps involved in it

Step: 1

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started