4. A certain computer algorithm used to solve very complicated differential equations uses an iterative method. That is, the algorithm solves the problem the

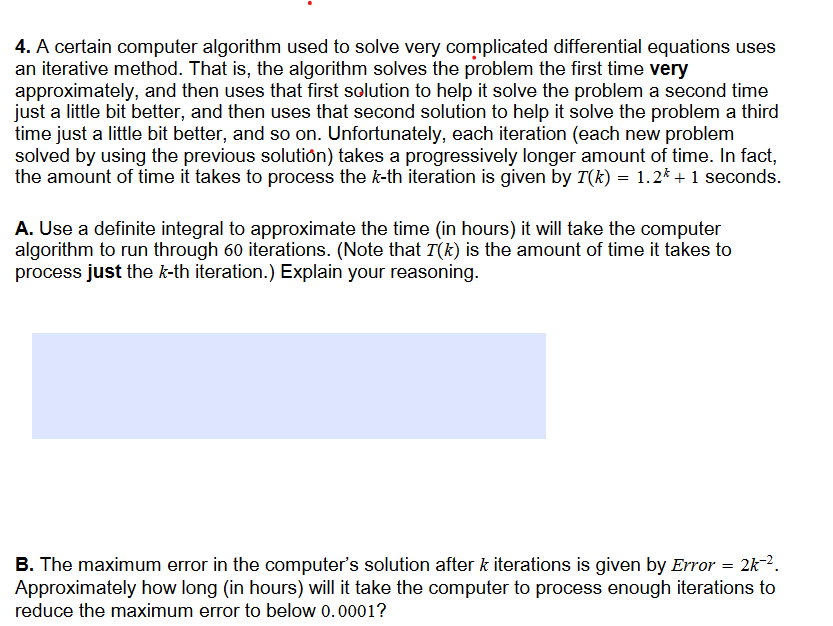

4. A certain computer algorithm used to solve very complicated differential equations uses an iterative method. That is, the algorithm solves the problem the first time very approximately, and then uses that first solution to help it solve the problem a second time just a little bit better, and then uses that second solution to help it solve the problem a third time just a little bit better, and so on. Unfortunately, each iteration (each new problem solved by using the previous solution) takes a progressively longer amount of time. In fact, the amount of time it takes to process the k-th iteration is given by T(k) = 1.2* + 1 seconds. A. Use a definite integral to approximate the time (in hours) it will take the computer algorithm to run through 60 iterations. (Note that T(k) is the amount of time it takes to process just the k-th iteration.) Explain your reasoning. B. The maximum error in the computer's solution after & iterations is given by Error = 2k-2. Approximately how long (in hours) will it take the computer to process enough iterations to reduce the maximum error to below 0.0001?

Step by Step Solution

There are 3 Steps involved in it

Step: 1

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started