Question: 4. Consider the following variant of string alignment: given two strings x, y, and a positive integer L, find all contiguous substrings of length at

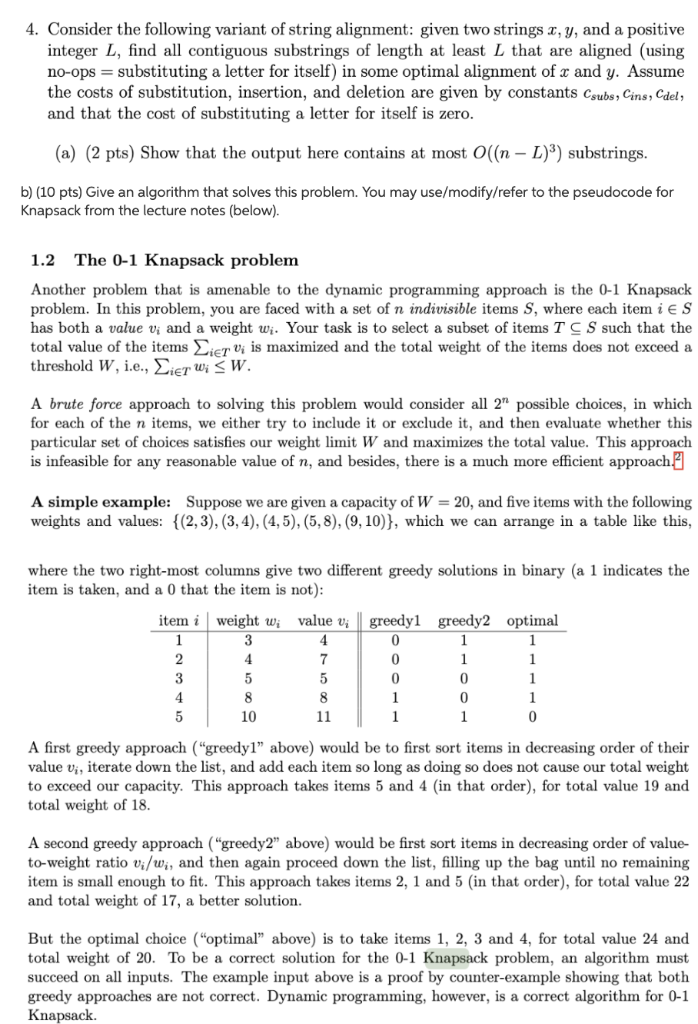

4. Consider the following variant of string alignment: given two strings x, y, and a positive integer L, find all contiguous substrings of length at least L that are aligned (using no-ops substituting a letter for itself) in some optimal alignment of x and y. Assume the costs of substitution, insertion, and deletion are given by constants Csubs, Cins, Cdel, and that the cost of substituting a letter for itself is zero (a) (2 pts) Show that the output here contains at most O((n - L)3) substrings b) (10 pts) Give an algorithm that solves this problem. You may use/modify/refer to the pseudocode for Knapsack from the lecture notes (below). 1.2 The 0-1 Knapsack problem Another problem that is amenable to the dynamic programming approach is the 0-1 Knapsack problem. In this problem, you are faced with a set of n indivisible items S, where each itemiE S has both a value v, and a weight wi. Your task is to select a subset of items T C S such that the total value of the items ETt s maximized and the total weight of the items does not exceed a threshold W. i.e., erui W. A brute force approach to solving this problem would consider all 2" possible choices, in which for each of the n items, we either try to include it or exclude it, and then evaluate whether this particular set of choices satisfies our weight limit W and maximizes the total value. This approach is infeasible for any reasonable value of n, and besides, there is a much more efficient approach A simple example: Suppose we are given a capacity of W = 20, and five items with the following weights and values: ((2,3), (3,4), (4,5), (5,8), (9,10)), which we can arrange in a table like this where the two right-most columns give two different greedy solutions in binary (a 1 indicates the item is taken, and a 0 that the item is not) itemi weight w valuevgreedyl greedy2 optimal 0 10 A first greedy approach "greedy1" above) would be to first sort items in decreasing order of their value vi, iterate down the list, and add each item so long as doing so does not cause our total weight to exceed our capacity. This approach takes items 5 and 4 (in that order), for total value 19 and total weight of 18 A second greedy approach ("greedy2" above) would be first sort items in decreasing order of value- to-weight ratio vi/wi, and then again proceed down the list, filling up the bag until no remaining item is small enough to fit. This approach takes items 2, 1 and 5 (in that order), for total value 22 and total weight of 17, a better solution But the optimal choice ("optimal above) is to take items 1, 2, 3 and 4, for total value 24 and total weight of 20. To be a correct solution for the 0-1 Knapsack problem, an algorithm must succeed on all inputs. The example input above is a proof by counter-example showing that both greedy approaches are not correct. Dynamic programming, however, is a correct algorithm for 0-1 Knapsack. 1.3 The algorithm To develop a dynamic programming solution, we need to understand how to exploit the substruc- ture to build up an optimal solution. That is, how can we break a current problem down into simple decisions that combine the optimal solutions to subproblems to produce an optimal solution for the current problem? Or, suppose we have an optimal solution to a 0-1 Knapsack problem with n items and W capacity. How could we extend this solution to be an optimal solution for a problem with n +1 items or with W +1 capacity? This question presents a key insight: every choice of k e [0, n] and capacity w E [0, W] is a subprob- lem for parameters n and W. The parameter k lets us vary how many fewer items we consider, and the parameter w lets us vary how much smaller capacity bag to consider Crucially, both param- eters are necessary. At home exercise: prove that an approach using only k will fail on some inputs.) Suppose we have already computed the optimal solution for some particular value of k- 1 and for all values 0 W. That is, for every choice of a smaller bag than W, we know the optimal value that can be obtained. Now consider adding one more item, with weight wk and value vk, meaning we have k items total to consider. There are three possibilities (No room) If wk > w, then our bag is too small to include the kth item, regardless of what subproblem we might build off of. (Take it) If our bag is large enough, i.e., wk S w, the optimal value is this item's value vjk plus the optimal value for the subproblem on k items and weight w - wk, i.e., a bag with just (Leave it) If our bag is large enough, i.e., wk S w, the optimal solution on k - 1 items for write them down as a simple recursive expression that enough extra capacity to hold item k capacity uw We can take these three possibilities and gives the optimal solution for k items and capacity w, which we denote B(k,w), in terms of optimal solutions to smaller problems B(k-1, ) max( B(k - 1, w) , B(k -1,w -k) vk) otherwise if wk w B(k,w)- The upper branch governs the Leave it" conditions. Crucially, because we want the optimal value for B(k, w), we take the larger value of the two possibilities where we could take the item. "No room" condition, while the lower branch covers "Take it" and As with the path counting problem, the recursive formula immediately implies a memoization scheme by which to store the optimal solutions for the (k, w) subproblems. Here, we use a table B with n rows and W1 columns. Because our formula for B(k, w) only ever refers to subproblems on the k 1 row, we may fill in this table one row at a time. Here is pseudocode: for w 0 to W f B[0,w]-0 // base case: no items for k-1 tontBCk,0]O// base case: no capacity for k = 1 to n { for w 0 to W { // item could fit take b[k]B[ k-1, w-k leave BC k-1, w ] B(k,y] -max ( take , leave ) // optimal if ve take // optimal if we leave // optimal either way // optimal if item couldn't fit 1.3.1 Running time The running time of the 0-1 Knapsack problem is straightforward. Allocating the B matrix requires time (n W), and the two initialization loops take (W) and (n) time. Every elem ent in the table is filled by the double loop. The inner part of the loop takes (1) time, because it consists only of atomic operations (maximum takes constant time -do you see why?). Hence, the double loop takes (nW) time, and the algorithm as a whole takes (nW) time 1.3.2 Correctness The correctness of the 0-1 Knapsack algorithm follows a proof by strong induction on the contents of B. (Left as an exercise. Also left as an exercise: running the algorithm on the small example given above 1.4 Items from the table When the Knapsack algorithm completes, the optimal value is stored in the B(n, W) entry of the table. This does not tell us which items were chosen, however. But this information is contained inside the table B, and we can recover it by using a "trace back" algorithm, which reconstructs a sequence of take it / leave it decisions that are consistent with the optimal value The idea is straightforward. For a particular choice of k and w, there were only two possibilities by which we could have obtained the value B(k,w). First, if B(k,w) = B(k-1, w-wk) + Uk, then item k is in the optimal set and the next subproblem to consider is k - 1 and w - wk; otherwise, Blk, u) = B(k-1, w), inplying that k is not in the optimal set, and the next subproblem to consider is K-1 and w. Or, in pseudocode: for k- 1 to n { solution [k] = 0 } // binary array: is in optimal set? // starting capacity for k-n down to 1 takenb[k] B[ k-1, w-w[k] ] // optimal value if we took k if B[k,w]-taken f solution [k]1 w-w-w[k] // did we take k? // add k to optimal set // update capacity size cy The result is a binary array solution of length n, which contains a 1 anywhere there was an item that was "taken" in order to construct a solution with value B(n, W). This algorithrn runs in (n) time, because it only examines one element per row of the table B 4. Consider the following variant of string alignment: given two strings x, y, and a positive integer L, find all contiguous substrings of length at least L that are aligned (using no-ops substituting a letter for itself) in some optimal alignment of x and y. Assume the costs of substitution, insertion, and deletion are given by constants Csubs, Cins, Cdel, and that the cost of substituting a letter for itself is zero (a) (2 pts) Show that the output here contains at most O((n - L)3) substrings b) (10 pts) Give an algorithm that solves this problem. You may use/modify/refer to the pseudocode for Knapsack from the lecture notes (below). 1.2 The 0-1 Knapsack problem Another problem that is amenable to the dynamic programming approach is the 0-1 Knapsack problem. In this problem, you are faced with a set of n indivisible items S, where each itemiE S has both a value v, and a weight wi. Your task is to select a subset of items T C S such that the total value of the items ETt s maximized and the total weight of the items does not exceed a threshold W. i.e., erui W. A brute force approach to solving this problem would consider all 2" possible choices, in which for each of the n items, we either try to include it or exclude it, and then evaluate whether this particular set of choices satisfies our weight limit W and maximizes the total value. This approach is infeasible for any reasonable value of n, and besides, there is a much more efficient approach A simple example: Suppose we are given a capacity of W = 20, and five items with the following weights and values: ((2,3), (3,4), (4,5), (5,8), (9,10)), which we can arrange in a table like this where the two right-most columns give two different greedy solutions in binary (a 1 indicates the item is taken, and a 0 that the item is not) itemi weight w valuevgreedyl greedy2 optimal 0 10 A first greedy approach "greedy1" above) would be to first sort items in decreasing order of their value vi, iterate down the list, and add each item so long as doing so does not cause our total weight to exceed our capacity. This approach takes items 5 and 4 (in that order), for total value 19 and total weight of 18 A second greedy approach ("greedy2" above) would be first sort items in decreasing order of value- to-weight ratio vi/wi, and then again proceed down the list, filling up the bag until no remaining item is small enough to fit. This approach takes items 2, 1 and 5 (in that order), for total value 22 and total weight of 17, a better solution But the optimal choice ("optimal above) is to take items 1, 2, 3 and 4, for total value 24 and total weight of 20. To be a correct solution for the 0-1 Knapsack problem, an algorithm must succeed on all inputs. The example input above is a proof by counter-example showing that both greedy approaches are not correct. Dynamic programming, however, is a correct algorithm for 0-1 Knapsack. 1.3 The algorithm To develop a dynamic programming solution, we need to understand how to exploit the substruc- ture to build up an optimal solution. That is, how can we break a current problem down into simple decisions that combine the optimal solutions to subproblems to produce an optimal solution for the current problem? Or, suppose we have an optimal solution to a 0-1 Knapsack problem with n items and W capacity. How could we extend this solution to be an optimal solution for a problem with n +1 items or with W +1 capacity? This question presents a key insight: every choice of k e [0, n] and capacity w E [0, W] is a subprob- lem for parameters n and W. The parameter k lets us vary how many fewer items we consider, and the parameter w lets us vary how much smaller capacity bag to consider Crucially, both param- eters are necessary. At home exercise: prove that an approach using only k will fail on some inputs.) Suppose we have already computed the optimal solution for some particular value of k- 1 and for all values 0 W. That is, for every choice of a smaller bag than W, we know the optimal value that can be obtained. Now consider adding one more item, with weight wk and value vk, meaning we have k items total to consider. There are three possibilities (No room) If wk > w, then our bag is too small to include the kth item, regardless of what subproblem we might build off of. (Take it) If our bag is large enough, i.e., wk S w, the optimal value is this item's value vjk plus the optimal value for the subproblem on k items and weight w - wk, i.e., a bag with just (Leave it) If our bag is large enough, i.e., wk S w, the optimal solution on k - 1 items for write them down as a simple recursive expression that enough extra capacity to hold item k capacity uw We can take these three possibilities and gives the optimal solution for k items and capacity w, which we denote B(k,w), in terms of optimal solutions to smaller problems B(k-1, ) max( B(k - 1, w) , B(k -1,w -k) vk) otherwise if wk w B(k,w)- The upper branch governs the Leave it" conditions. Crucially, because we want the optimal value for B(k, w), we take the larger value of the two possibilities where we could take the item. "No room" condition, while the lower branch covers "Take it" and As with the path counting problem, the recursive formula immediately implies a memoization scheme by which to store the optimal solutions for the (k, w) subproblems. Here, we use a table B with n rows and W1 columns. Because our formula for B(k, w) only ever refers to subproblems on the k 1 row, we may fill in this table one row at a time. Here is pseudocode: for w 0 to W f B[0,w]-0 // base case: no items for k-1 tontBCk,0]O// base case: no capacity for k = 1 to n { for w 0 to W { // item could fit take b[k]B[ k-1, w-k leave BC k-1, w ] B(k,y] -max ( take , leave ) // optimal if ve take // optimal if we leave // optimal either way // optimal if item couldn't fit 1.3.1 Running time The running time of the 0-1 Knapsack problem is straightforward. Allocating the B matrix requires time (n W), and the two initialization loops take (W) and (n) time. Every elem ent in the table is filled by the double loop. The inner part of the loop takes (1) time, because it consists only of atomic operations (maximum takes constant time -do you see why?). Hence, the double loop takes (nW) time, and the algorithm as a whole takes (nW) time 1.3.2 Correctness The correctness of the 0-1 Knapsack algorithm follows a proof by strong induction on the contents of B. (Left as an exercise. Also left as an exercise: running the algorithm on the small example given above 1.4 Items from the table When the Knapsack algorithm completes, the optimal value is stored in the B(n, W) entry of the table. This does not tell us which items were chosen, however. But this information is contained inside the table B, and we can recover it by using a "trace back" algorithm, which reconstructs a sequence of take it / leave it decisions that are consistent with the optimal value The idea is straightforward. For a particular choice of k and w, there were only two possibilities by which we could have obtained the value B(k,w). First, if B(k,w) = B(k-1, w-wk) + Uk, then item k is in the optimal set and the next subproblem to consider is k - 1 and w - wk; otherwise, Blk, u) = B(k-1, w), inplying that k is not in the optimal set, and the next subproblem to consider is K-1 and w. Or, in pseudocode: for k- 1 to n { solution [k] = 0 } // binary array: is in optimal set? // starting capacity for k-n down to 1 takenb[k] B[ k-1, w-w[k] ] // optimal value if we took k if B[k,w]-taken f solution [k]1 w-w-w[k] // did we take k? // add k to optimal set // update capacity size cy The result is a binary array solution of length n, which contains a 1 anywhere there was an item that was "taken" in order to construct a solution with value B(n, W). This algorithrn runs in (n) time, because it only examines one element per row of the table B

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts