Answered step by step

Verified Expert Solution

Question

1 Approved Answer

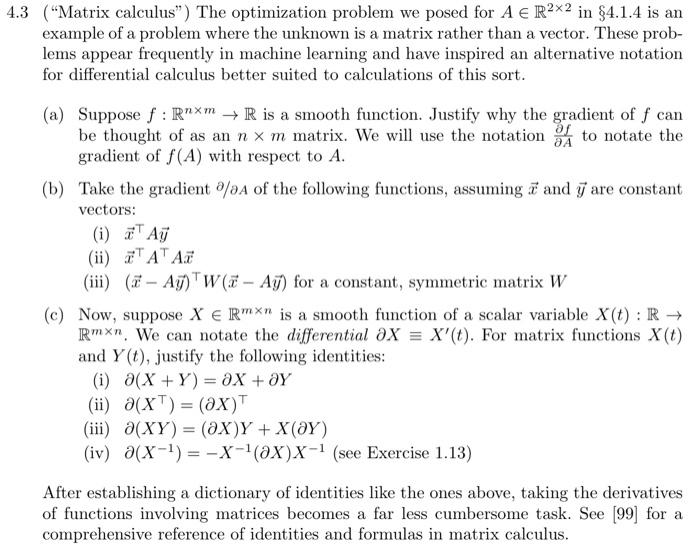

4.3 (Matrix calculus) The optimization problem we posed for A R in 4.1.4 is an example of a problem where the unknown is a matrix

4.3 ("Matrix calculus") The optimization problem we posed for A R in 4.1.4 is an example of a problem where the unknown is a matrix rather than a vector. These prob- lems appear frequently in machine learning and have inspired an alternative notation for differential calculus better suited to calculations of this sort. (a) Suppose f: Rnxm R is a smooth function. Justify why the gradient of f can be thought of as an n x m matrix. We will use the notation to notate the gradient of f(A) with respect to A. af JA (b) Take the gradient /A of the following functions, assuming and y are constant vectors: (i) Ay (ii) x A Ax T (iii) (x - Ay) W(- Ay) for a constant, symmetric matrix W (i) (ii) (c) Now, suppose X e Rmxn is a smooth function of a scalar variable X(t) : R Rmxn. We can notate the differential X = X'(t). For matrix functions X(t) and Y(t), justify the following identities: (X+Y) = ax + ay (XT) = (2x)T (iii) a(XY)= (X)Y + X(OY) (iv) (X) = X(@X)X- (see Exercise 1.13) After establishing a dictionary of identities like the ones above, taking the derivatives of functions involving matrices becomes a far less cumbersome task. See [99] for a comprehensive reference of identities and formulas in matrix calculus.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started