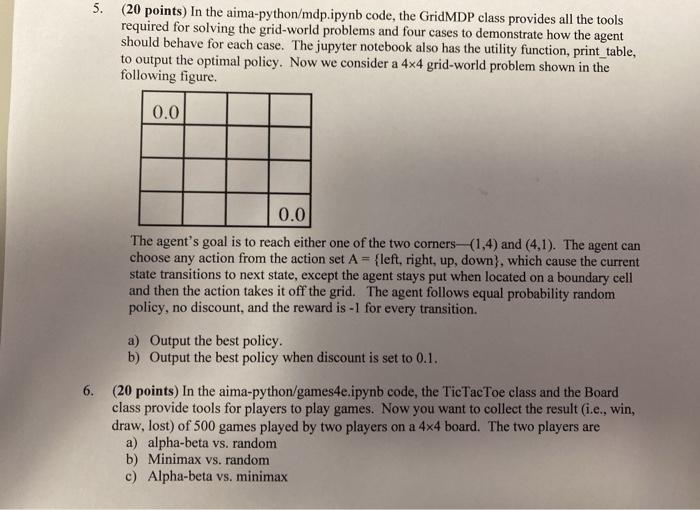

5. (20 points) In the aima-python/mdp.ipynb code, the GridMDP class provides all the tools required for solving the grid-world problems and four cases to demonstrate how the agent should behave for each case. The jupyter notebook also has the utility function, print_table, to output the optimal policy. Now we consider a 4x4 grid-world problem shown in the following figure. 0.0 0.0 The agent's goal is to reach either one of the two corners (1.4) and (4,1). The agent can choose any action from the action set A = {left, right, up, down), which cause the current state transitions to next state, except the agent stays put when located on a boundary cell and then the action takes it off the grid. The agent follows equal probability random policy, no discount, and the reward is -1 for every transition. a) Output the best policy. b) Output the best policy when discount is set to 0.1. 6. (20 points) In the aima-python/games4e.ipynb code, the TicTac Toe class and the Board class provide tools for players to play games. Now you want to collect the result (i.e., win, draw, lost) of 500 games played by two players on a 4x4 board. The two players are a) alpha-beta vs. random b) Minimax vs. random c) Alpha-beta vs. minimax 5. (20 points) In the aima-python/mdp.ipynb code, the GridMDP class provides all the tools required for solving the grid-world problems and four cases to demonstrate how the agent should behave for each case. The jupyter notebook also has the utility function, print_table, to output the optimal policy. Now we consider a 4x4 grid-world problem shown in the following figure. 0.0 0.0 The agent's goal is to reach either one of the two corners (1.4) and (4,1). The agent can choose any action from the action set A = {left, right, up, down), which cause the current state transitions to next state, except the agent stays put when located on a boundary cell and then the action takes it off the grid. The agent follows equal probability random policy, no discount, and the reward is -1 for every transition. a) Output the best policy. b) Output the best policy when discount is set to 0.1. 6. (20 points) In the aima-python/games4e.ipynb code, the TicTac Toe class and the Board class provide tools for players to play games. Now you want to collect the result (i.e., win, draw, lost) of 500 games played by two players on a 4x4 board. The two players are a) alpha-beta vs. random b) Minimax vs. random c) Alpha-beta vs. minimax