5 Just One Tail, Please

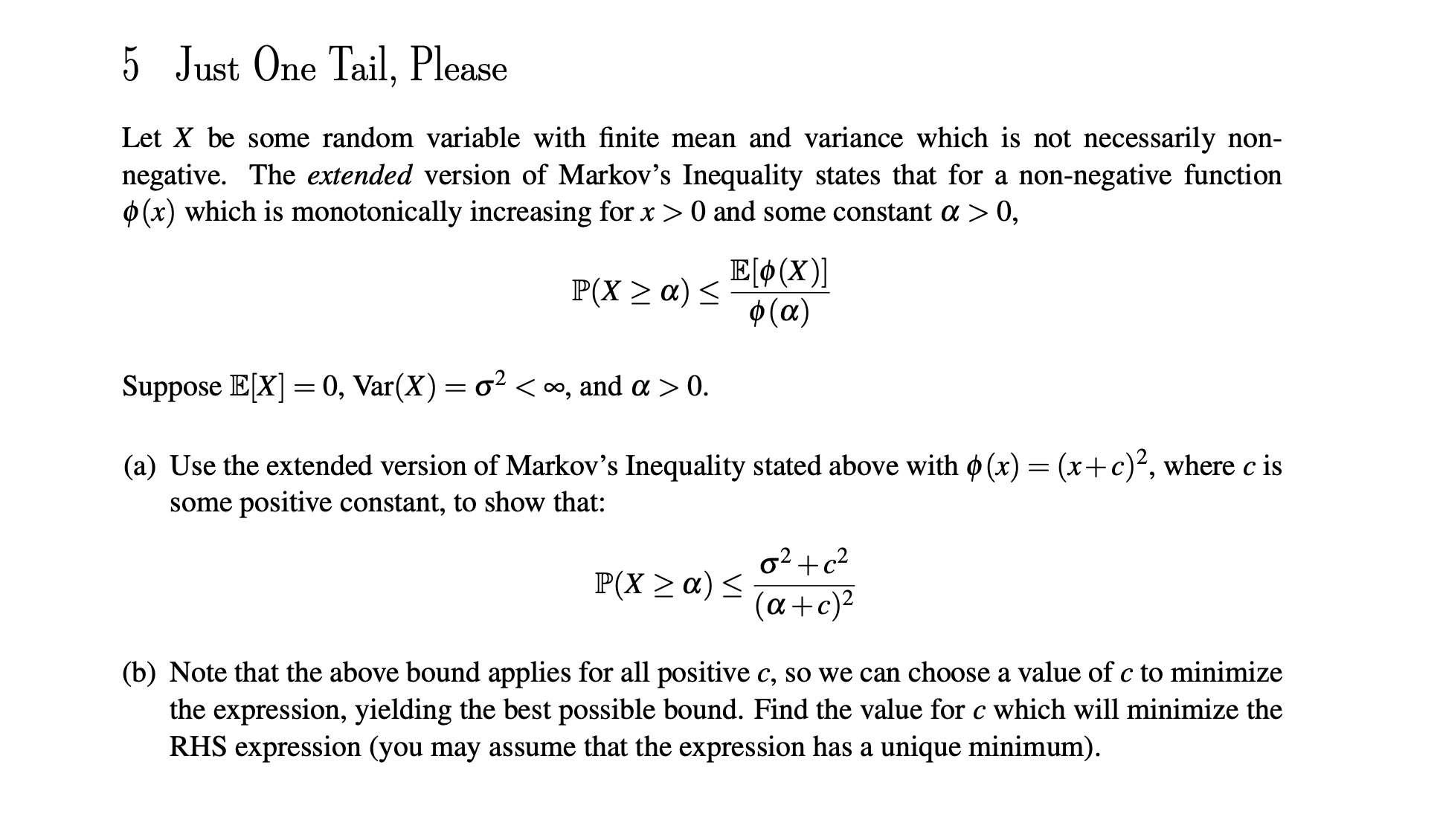

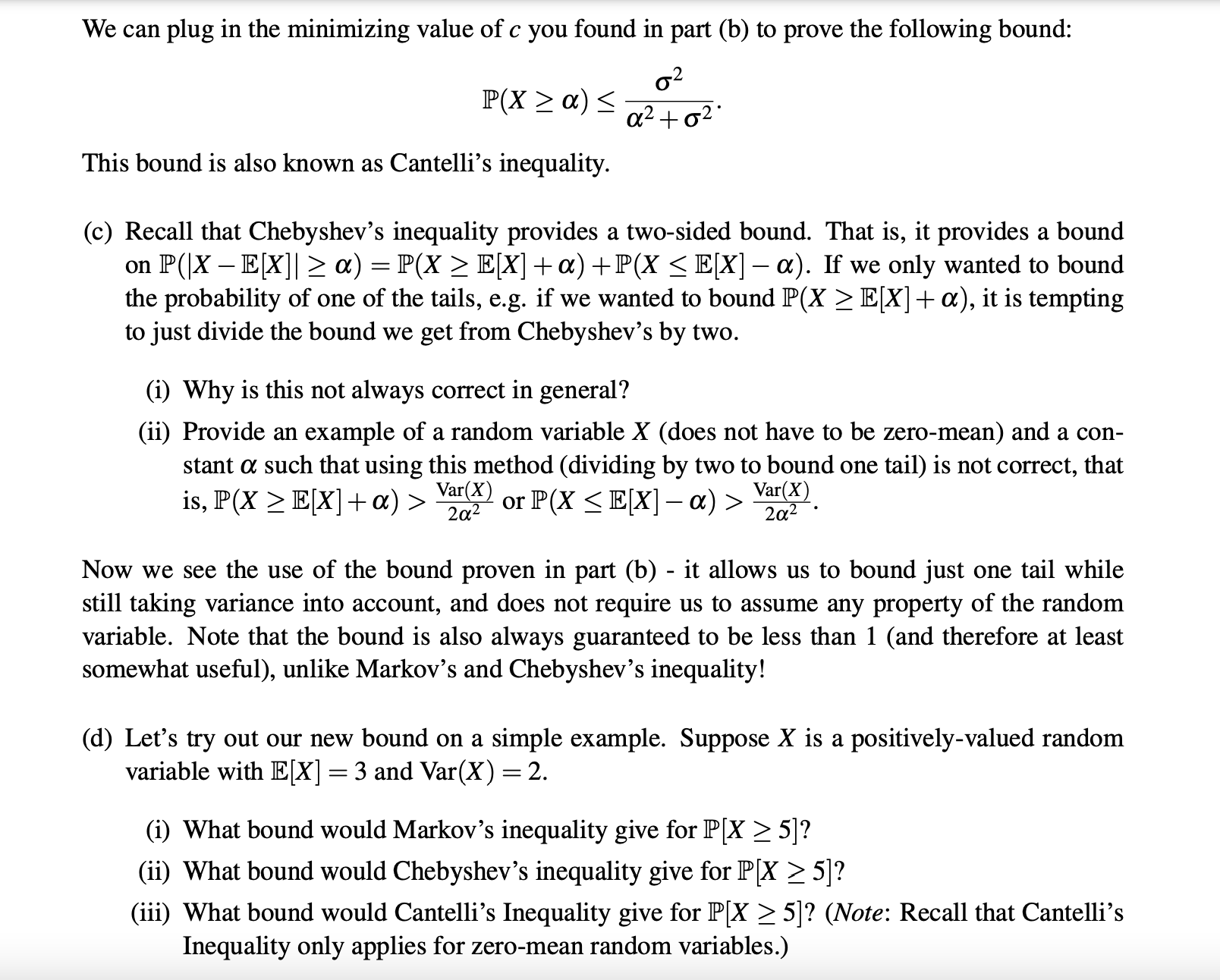

5 Just One Tail, Please Let X be some random variable with nite mean and variance which is not necessarily non- negative. The extended version of Markov's Inequality states that for a non-negative function (x) which is monotonically increasing for x > 0 and some constant a > 0, 1E[(X)] (P01) P(X2a)s Suppose ]E[X] = 0, Var(X) = 0'2 0. (a) Use the extended version of Markov's Inequality stated above with (i) (x) = (x+ c)2, where c is some positive constant, to show that: 2 2 IP(X2a) g a \"2 (a+c) (b) Note that the above bound applies for all positive 6, so we can choose a value of c to minimize the expression, yielding the best possible bound. Find the value for c which will minimize the RHS expression (you may assume that the expression has a unique minimum). We can plug in the minimizing value of c you found in part (b) to prove the following bound: 0.2 a2+o2' P(X 2 a) S This bound is also known as Cantelli's inequality. (c) Recall that Chebyshev's inequality provides a two-sided bound. That is, it provides a bound on ]P'(|X IE[X]| 2 a) = ]P(X 2 IE[X] +05) +]P'(X g IE[X] a). Ifwe only wanted to bound the probability of one of the tails, e.g. if we wanted to bound ]P'(X 2 ]E[X] + a), it is tempting to just divide the bound we get from Chebyshev's by two. (i) Why is this not always correct in general? (ii) Provide an example of a random variable X (does not have to be zero-mean) and a con- stant a such that using this method (dividing by two to bound one tail) is not correct, that is, no; 2 ]E[X] +05) > $3311 or]P(X g ]E[X] a) > M. 2'052 Now we see the use of the bound proven in part (b) - it allows us to bound just one tail while still taking variance into account, and does not require us to assume any property of the random variable. Note that the bound is also always guaranteed to be less than 1 (and therefore at least somewhat useful), unlike Markov's and Chebyshev's inequality! ((1) Let's try out our new bound on a simple example. Suppose X is a positively-valued random variable with ]E[X] = 3 and Var(X) = 2. (i) What bound would Markov's inequality give for ]P'[X 2 5]? (ii) What bound would Chebyshev's inequality give for ]P[X Z 5]? (iii) What bound would Cantelli's Inequality give for ]P[X 2 5]? (Note: Recall that Cantelli's Inequality only applies for zero-mean random variables.)