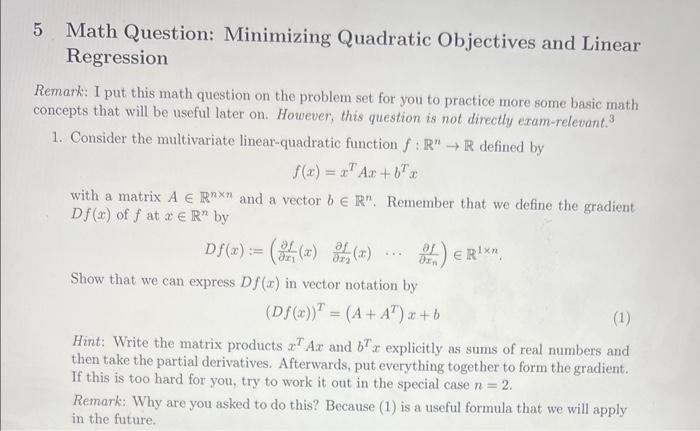

5 Math Question: Minimizing Quadratic Objectives and Linear Regression Remark: I put this math question on the problem set for you to practice more some basic math concepts that will be useful later on. However, this question is not directly exam-relevant. 3 1. Consider the multivariate linear-quadratic function f:RnR defined by f(x)=xTAx+bTx with a matrix ARnn and a vector bRn. Remember that we define the gradient Df(x) of f at xRn by Df(x):=(x1f(x)x2f(x)xnf)R1n. Show that we can express Df(x) in vector notation by (Df(x))T=(A+AT)x+b Hint: Write the matrix products xTAx and bTx explicitly as sums of real numbers and then take the partial derivatives. Afterwards, put everything together to form the gradient. If this is too hard for you, try to work it out in the special case n=2. Remark: Why are you asked to do this? Because (1) is a useful formula that we will apply in the future. 5 Math Question: Minimizing Quadratic Objectives and Linear Regression Remark: I pat this math question on the jroblean set for you to practioe mote some banic math concepts that will be uscful later on. Howecer. thas question is not dircetly exum-rclevant. 1. Consider the multivariate linear-cquadratic function f:RAtR defined by f(x)=xTAx+bTx with a taatrix ARuive and a vector bRn. Reusuber that wo define the gradieut Df(x) of f at xR11 by Df(x)=(L1f(x)2f(x),rHf)R1N. Show that we can express Df(x) in vector notation by (Df(x))T=(A+AT)x+b Hint: Write the matrix products aTAx and bT I explicitly ns suns of wel auabers and thea taloe the partial derivatives. Afterwardis, put every thing together to form the gradient. If this is too bard for yout, try to work it out in the special case n=2. Remark: Why are you asked to do this? Becalase (1) is a useful formula that we will apply in the future. 2. Consider f from the previous part and assume that A is symuetric and imvertible. Assume further that f has a mininuan at x. Using equation (1) from the previous part, determine x, 3. Blonus qarstion (no solation is providiod for this oae): Apply the tusults from the ptevious qustion to prove the formula for the OLS coefficiests in the (population) regreseion of y oa x1,,xn discussed on slide 42 of Lecture Oa ("Math Revicw"). More precisely, shom that =(E[xxT])1E[yx] minimizes E[(yTx)2] for aty R-valued random variable y and Rn-valued random variable x, such that the matrix E[r2]] has full rank: 5 Math Question: Minimizing Quadratic Objectives and Linear Regression Remark: I put this math question on the problem set for you to practice more some basic math concepts that will be useful later on. However, this question is not directly exam-relevant. 3 1. Consider the multivariate linear-quadratic function f:RnR defined by f(x)=xTAx+bTx with a matrix ARnn and a vector bRn. Remember that we define the gradient Df(x) of f at xRn by Df(x):=(x1f(x)x2f(x)xnf)R1n. Show that we can express Df(x) in vector notation by (Df(x))T=(A+AT)x+b Hint: Write the matrix products xTAx and bTx explicitly as sums of real numbers and then take the partial derivatives. Afterwards, put everything together to form the gradient. If this is too hard for you, try to work it out in the special case n=2. Remark: Why are you asked to do this? Because (1) is a useful formula that we will apply in the future. 5 Math Question: Minimizing Quadratic Objectives and Linear Regression Remark: I pat this math question on the jroblean set for you to practioe mote some banic math concepts that will be uscful later on. Howecer. thas question is not dircetly exum-rclevant. 1. Consider the multivariate linear-cquadratic function f:RAtR defined by f(x)=xTAx+bTx with a taatrix ARuive and a vector bRn. Reusuber that wo define the gradieut Df(x) of f at xR11 by Df(x)=(L1f(x)2f(x),rHf)R1N. Show that we can express Df(x) in vector notation by (Df(x))T=(A+AT)x+b Hint: Write the matrix products aTAx and bT I explicitly ns suns of wel auabers and thea taloe the partial derivatives. Afterwardis, put every thing together to form the gradient. If this is too bard for yout, try to work it out in the special case n=2. Remark: Why are you asked to do this? Becalase (1) is a useful formula that we will apply in the future. 2. Consider f from the previous part and assume that A is symuetric and imvertible. Assume further that f has a mininuan at x. Using equation (1) from the previous part, determine x, 3. Blonus qarstion (no solation is providiod for this oae): Apply the tusults from the ptevious qustion to prove the formula for the OLS coefficiests in the (population) regreseion of y oa x1,,xn discussed on slide 42 of Lecture Oa ("Math Revicw"). More precisely, shom that =(E[xxT])1E[yx] minimizes E[(yTx)2] for aty R-valued random variable y and Rn-valued random variable x, such that the matrix E[r2]] has full rank