Answered step by step

Verified Expert Solution

Question

1 Approved Answer

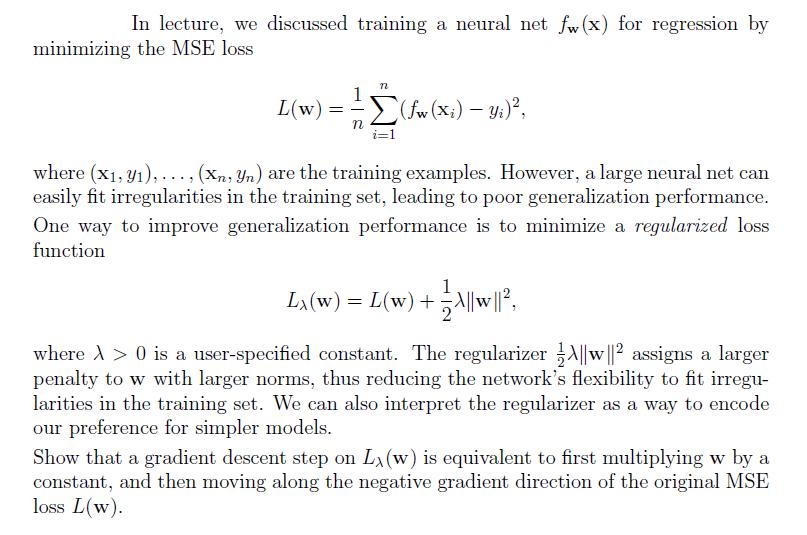

In lecture, we discussed training a neural net fw (x) for regression by minimizing the MSE loss L(w) = n 72 (fw (Xi) -

In lecture, we discussed training a neural net fw (x) for regression by minimizing the MSE loss L(w) = n 72 (fw (Xi) - Yi) , i=1 where (x1, y),..., (Xn, Yn) are the training examples. However, a large neural net can easily fit irregularities in the training set, leading to poor generalization performance. One way to improve generalization performance is to minimize a regularized loss function 1 La(w) = L(w) + A||w||, where A> 0 is a user-specified constant. The regularizer ||w||2 assigns a larger penalty to w with larger norms, thus reducing the network's flexibility to fit irregu- larities in the training set. We can also interpret the regularizer as a way to encode our preference for simpler models. Show that a gradient descent step on Lx (w) is equivalent to first multiplying w by a constant, and then moving along the negative gradient direction of the original MSE loss L(w).

Step by Step Solution

There are 3 Steps involved in it

Step: 1

To show that a gradient descent step on Lw is equivalent to first multiplying w by a constant and th...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started