Answered step by step

Verified Expert Solution

Question

1 Approved Answer

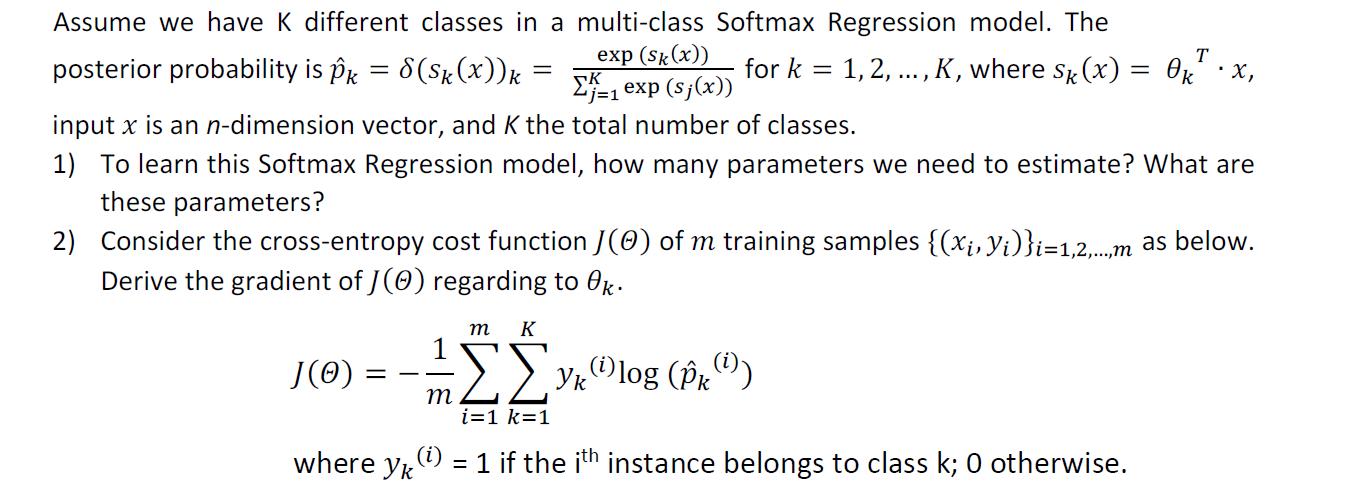

Assume we have K different classes in a multi-class Softmax Regression model. The for k=1,2,..., K, where Sk (x) = Ok.x, T - posterior

Assume we have K different classes in a multi-class Softmax Regression model. The for k=1,2,..., K, where Sk (x) = Ok.x, T - posterior probability is pk = 8(Sk(x))k exp (Sk(x)) 1 exp (sj(x)) j=1 input x is an n-dimension vector, and K the total number of classes. 1) To learn this Softmax Regression model, how many parameters we need to estimate? What are these parameters? 2) Consider the cross-entropy cost function J(0) of m training samples {(xi, Yi)}i=1,2,...,m as below. Derive the gradient of J(0) regarding to 0k. m K yklog (p) i=1 k=1 where y() = 1 if the ith instance belongs to class k; 0 otherwise. J(0) = = Assume we have K different classes in a multi-class Softmax Regression model. The for k=1,2,..., K, where Sk (x) = Ok.x, T - posterior probability is pk = 8(Sk(x))k exp (Sk(x)) 1 exp (sj(x)) j=1 input x is an n-dimension vector, and K the total number of classes. 1) To learn this Softmax Regression model, how many parameters we need to estimate? What are these parameters? 2) Consider the cross-entropy cost function J(0) of m training samples {(xi, Yi)}i=1,2,...,m as below. Derive the gradient of J(0) regarding to 0k. m K yklog (p) i=1 k=1 where y() = 1 if the ith instance belongs to class k; 0 otherwise. J(0) = =

Step by Step Solution

There are 3 Steps involved in it

Step: 1

The image you have provided contains text relating to a question on the Softmax Regression model specifically pertaining to the number of parameters n...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started