can someone please help me with the following java question please Algorithm Description: .The algorithm is called with three parameters: D, attribute list, and Attribute

can someone please help me with the following java question please

Algorithm Description:

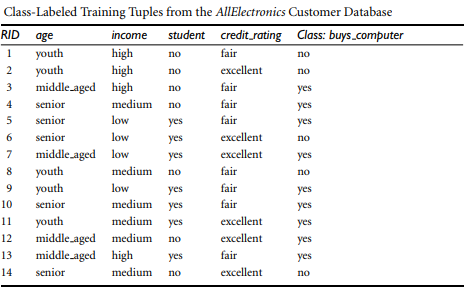

.The algorithm is called with three parameters: D, attribute list, and Attribute selection method. We refer to D as a data partition. Initially, it is the complete set of training tuples and their associated class labels. The parameter attribute list is a list of attributes describing the tuples. Attribute selection method speci?es a heuristic procedure for selecting the attribute that best discriminates the given tuples according to class. This procedure employs an attribute selection measure such as information gain or the Gini index. Whether the tree is strictly binary is generally driven by the attribute selection measure. Some attribute selection measures, such as the Gini index, enforce the resulting tree to be binary. Others, like information gain, donot,thereinallowingmultiwaysplits(i.e.,twoormorebranchestobegrownfrom a node).

.The tree starts as a single node, N, representing the training tuples in D (step 1).

Algorithm: Generate decision tree. Generate a decision tree from the training tuples of data partition, D.

Input:

Data partition, D, which is a set of training tuples and their associated class labels;

attribute list, the set of candidate attributes;

Attribute selection method, a procedure to determine the splitting criterion that best partitions the data tuples into individual classes. This criterion consists of a splitting attribute and, possibly, either a split-point or splitting subset.

Output: A decision tree.

Method:

(1) create a node N;

(2) if tuples in D are all of the same class, C, then

(3) return N as a leaf node labeled with the class C;

(4) if attribute list is empty then

(5) return N as a leaf node labeled with the majority class in D; // majority voting

(6) apply Attribute selection method(D, attribute list) to ?nd the best splitting criterion;

(7) label node N with splitting criterion;

(8) if splitting attribute is discrete-valued and multiway splits allowed then // not restricted to binary trees

(9) attribute list ?attribute list ?splitting attribute; // remove splitting attribute

(10) foreach outcome j of splitting criterion // partition the tuples and grow subtrees for each partition

(11) let Dj be the set of data tuples in D satisfying outcome j; // a partition

(12) if Dj is empty then

(13) attach a leaf labeled with the majority class in D to node N;

(14) else attach the node returned by Generate decision tree(Dj, attribute list) to node N; endfor

(15) return N;

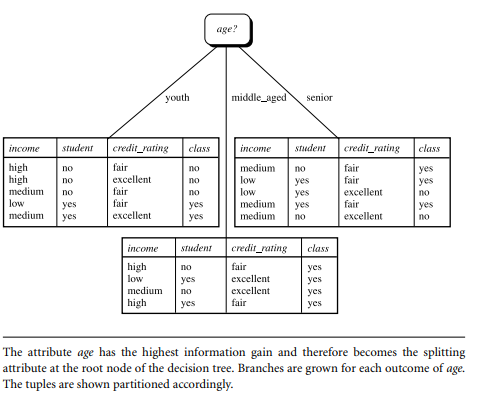

Tree:

Table:

using the above write a Java program that implements the basic algorithm for inducing a decision tree.

Note the following requirements:

You are not required to fully implement the algorithm, but to implement the partition that occurs at the root node, i.e. the generation of the first level after the root, use the description and the tree as a reference of the expected output.

Consider the splitting attribute to be discrete-valued and use information gain as the attribute selection measure.

Your implementation should use the training tuples given in the Table When run, the program should output the information given in the tree (the output does not need to look exactly in the way shown there, but must convey the same meaning).

please help

age? middle_agedsenio inconie studenr credit raring class income credit rating class fair fair excellent fair excellent medium no no no medium no no no excellent no mediumyes mediumno medium yes excellent no incomestudenr rdi rating class hi excellent excellent medium no high The attribute age has the highest information gain and therefore becomes the splitting attribute at the root node of the decision tree. Branches are grown for each outcome of age. The tuples are shown partitioned accordingly. age? middle_agedsenio inconie studenr credit raring class income credit rating class fair fair excellent fair excellent medium no no no medium no no no excellent no mediumyes mediumno medium yes excellent no incomestudenr rdi rating class hi excellent excellent medium no high The attribute age has the highest information gain and therefore becomes the splitting attribute at the root node of the decision tree. Branches are grown for each outcome of age. The tuples are shown partitioned accordingly

Step by Step Solution

There are 3 Steps involved in it

Step: 1

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started