Answered step by step

Verified Expert Solution

Question

1 Approved Answer

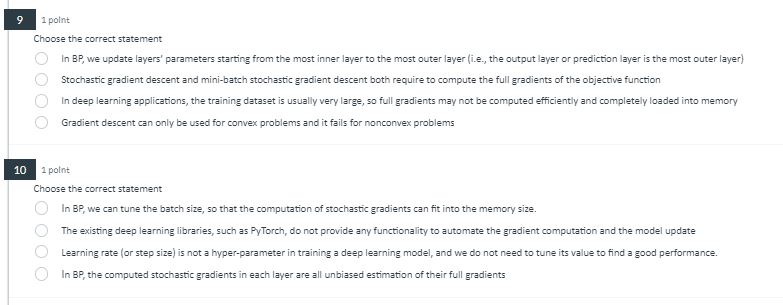

Choose the correct statement In BP , we update layers' parameters starting from the most inner layer to the most outer layer ( i .

Choose the correct statement

In BP we update layers' parameters starting from the most inner layer to the most outer layer ie the output layer or prediction layer is the most outer layer

Stochastic gradient descent and minibatch stochastic gradient descent both require to compute the full gradients of the objective function

In deep learning applications, the training dataset is usually very large, so full gradients may not be computed efficiently and completely loaded into memory

Gradient descent can only be used for convex problems and it fails for nonconvex problems

point

Choose the correct statement

In we can tune the batch size, so that the computation of stochastic gradients can fit into the memory size.

The existing deep learning libraries, such as PyTorch, do not provide any functionality to automate the gradient computation and the model update

Learning rate or step size is not a hyperparameter in training a deep learning model, and we do not need to tune its value to find a good performance.

In BP the computed stochastic gradients in each layer are all unbiased estimation of their full gradients

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started