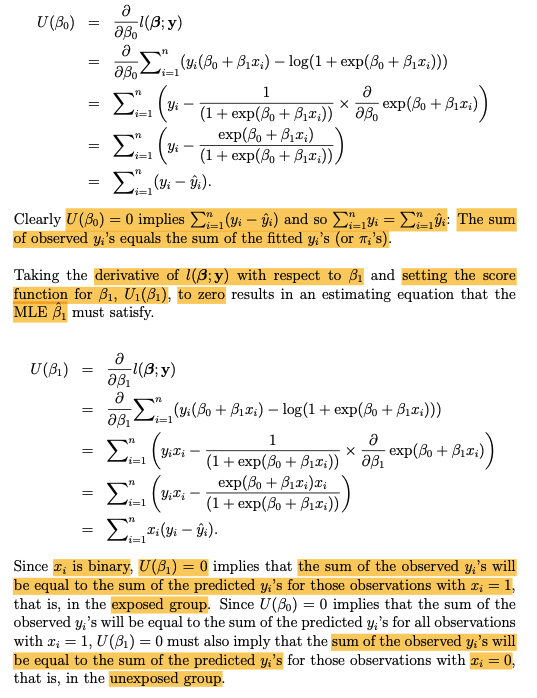

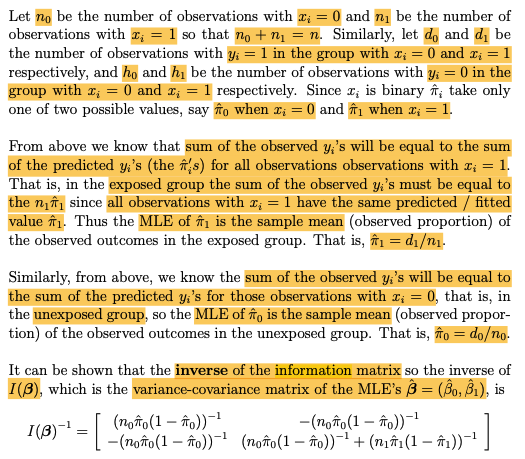

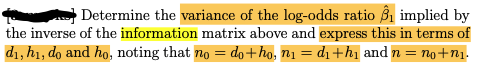

Consider 2 = 1, . .., n independently sampled data points each consisting of measurements of a binary (0/1) exposure variable z; and a binary outcome yi Let m = E(yi) be the expected value of y: (interpretable as the population mean of y for the subset of the population with covariate pattern identical to that of observation i), I= n 12!_,I; and y= n Ally. A simple logistic regression model for y; given r; is logit (7;) = log 1 - mil = Po + Bili where 3 = (Bo, 81) is a vector of regression coefficients. From this we can form the likelihood function L(3, y), the joint probability of the data vector y = (31, y2, . . . ; n), as L(B, y) = [" exp(Bo + Biz;)]Hi i=1 1 + exp(Bo + Biri) Let Go and , be the maximum likelihood estimates (MLE's) of Po and B1 respectively. Let exp(Bo + BIT;) Mi = 1 + exp(Bo + Bir;) be the MLE or fitted value of *; (equivalently, the fitted value of y: denoted 9:). The log-likelihood function ((; y) is 1(B;y) = _ _yi(Bo + Biz.) - log(1 + exp(Po + BIZ.)). Taking the derivative of I(3; y) with respect to Do generates the score function for Bo, Vo(Bo). Setting Vo(Po) to zero generates an estimating equation that the MLE Bo must satisfy.a U(Bo) aBo 1(B; y) a = aBoy:(Bo + Bir;) - log(1 + exp(Bo + BI;))) = EN 1 a yi - (1 + exp(Bo + Bir;)) X aBo exp ( Bo + BIZ.)) exp(Bo + BIT;) (1 + exp(Bo + Bir;)). En, (y: - 9.). Clearly U(Bo) = 0 implies EL, (yi - 9:) and so Et_ yi = EL y: The sum of observed y's equals the sum of the fitted yi's (or mi's). Taking the derivative of ((3;y) with respect to S and setting the score function for 1, U1(B1), to zero results in an estimating equation that the MLE /, must satisfy. U(B]) = 2 1(3:y) aB1 a = aB Zi-(3:(Po + Biz,) - log(1 + exp(Bo + BIZ.))) 1 a X (1 + exp(Bo + BIIi)) aB1 exp(Bo + BII;) ) exp(Po + Biri)ri (1 + exp(80 + BIIi)) = _(yi - y:). Since I; is binary, U(8, ) = 0 implies that the sum of the observed y;'s will be equal to the sum of the predicted y's for those observations with r; = 1, that is, in the exposed group. Since U(8o) = 0 implies that the sum of the observed y's will be equal to the sum of the predicted y's for all observations with r; = 1, U(31 ) = 0 must also imply that the sum of the observed yi's will be equal to the sum of the predicted y's for those observations with r; = 0, that is, in the unexposed group.Let no be the number of observations with ; = 0 and nj be the number of observations with r; = 1 so that no + n = n. Similarly, let do and dj be the number of observations with y = 1 in the group with ; = 0 and ; = 1 respectively, and ho and hi be the number of observations with y: = 0 in the group with z; = 0 and r; = 1 respectively. Since I; is binary it, take only one of two possible values, say fto when r; = 0 and i1 when r; = 1. From above we know that sum of the observed y's will be equal to the sum of the predicted y's (the #;s) for all observations observations with I; = 1. That is, in the exposed group the sum of the observed y's must be equal to the mm since all observations with r; = 1 have the same predicted / fitted value #1. Thus the MLE of 1 is the sample mean (observed proportion) of the observed outcomes in the exposed group. That is, #1 = dii. Similarly, from above, we know the sum of the observed yi's will be equal to the sum of the predicted y's for those observations with ; = 0, that is, in the unexposed group, so the MLE of *, is the sample mean (observed propor- tion) of the observed outcomes in the unexposed group. That is, no = doo. It can be shown that the inverse of the information matrix so the inverse of I(3), which is the variance-covariance matrix of the MLE's 3 = (Bo, B,), is I( B)-1 = (nonto(1 - 70))-1 -(notto(1 - 10))-1 (nonto(1 - no)) (notto(1 - no)) + (nit(1 - #))-1Determine the variance of the log-odds ratio 81 implied by the inverse of the information matrix above and express this in terms of di, hi, do and ho, noting that no = do tho, ni = di thi and n = no+n1