Answered step by step

Verified Expert Solution

Question

1 Approved Answer

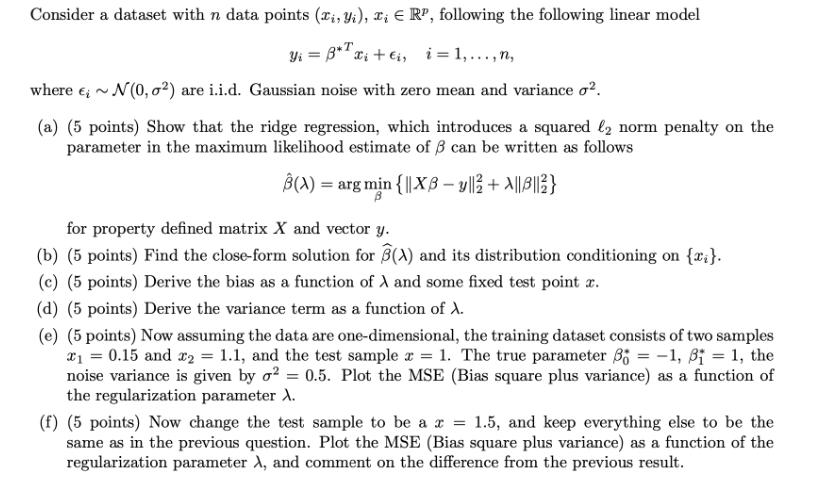

Consider a dataset with n data points (x, y), x, ERP, following the following linear model +t, i=1. where ; N(0,02) are i.i.d. Gaussian

Consider a dataset with n data points (x, y), x, ERP, following the following linear model +t, i=1. where ; N(0,02) are i.i.d. Gaussian noise with zero mean and variance o. (a) (5 points) Show that the ridge regression, which introduces a squared 2 norm penalty on the parameter in the maximum likelihood estimate of can be written as follows B(A) = arg min {XB-y|| + ||||} for property defined matrix X and vector y. (b) (5 points) Find the close-form solution for B(A) and its distribution conditioning on {x}. (c) (5 points) Derive the bias as a function of A and some fixed test point x. (d) (5 points) Derive the variance term as a function of A. (e) (5 points) Now assuming the data are one-dimensional, the training dataset consists of two samples x = 0.15 and x2 = 1.1, and the test sample x = 1. The true parameter 3 = -1, B = 1, the noise variance is given by 2 = 0.5. Plot the MSE (Bias square plus variance) as a function of the regularization parameter A. (f) (5 points) Now change the test sample to be a x = 1.5, and keep everything else to be the same as in the previous question. Plot the MSE (Bias square plus variance) as a function of the regularization parameter A, and comment on the difference from the previous result.

Step by Step Solution

★★★★★

3.40 Rating (144 Votes )

There are 3 Steps involved in it

Step: 1

a To show that ridge regression introduces a squared L2 norm penalty on the parameter vector we star...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started