Answered step by step

Verified Expert Solution

Question

1 Approved Answer

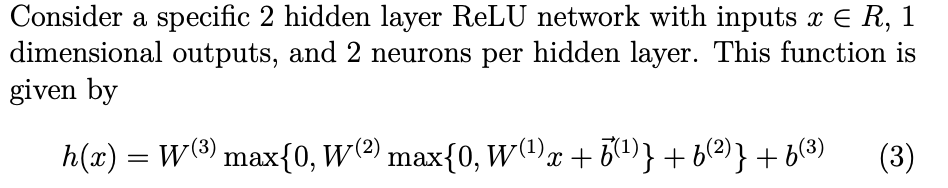

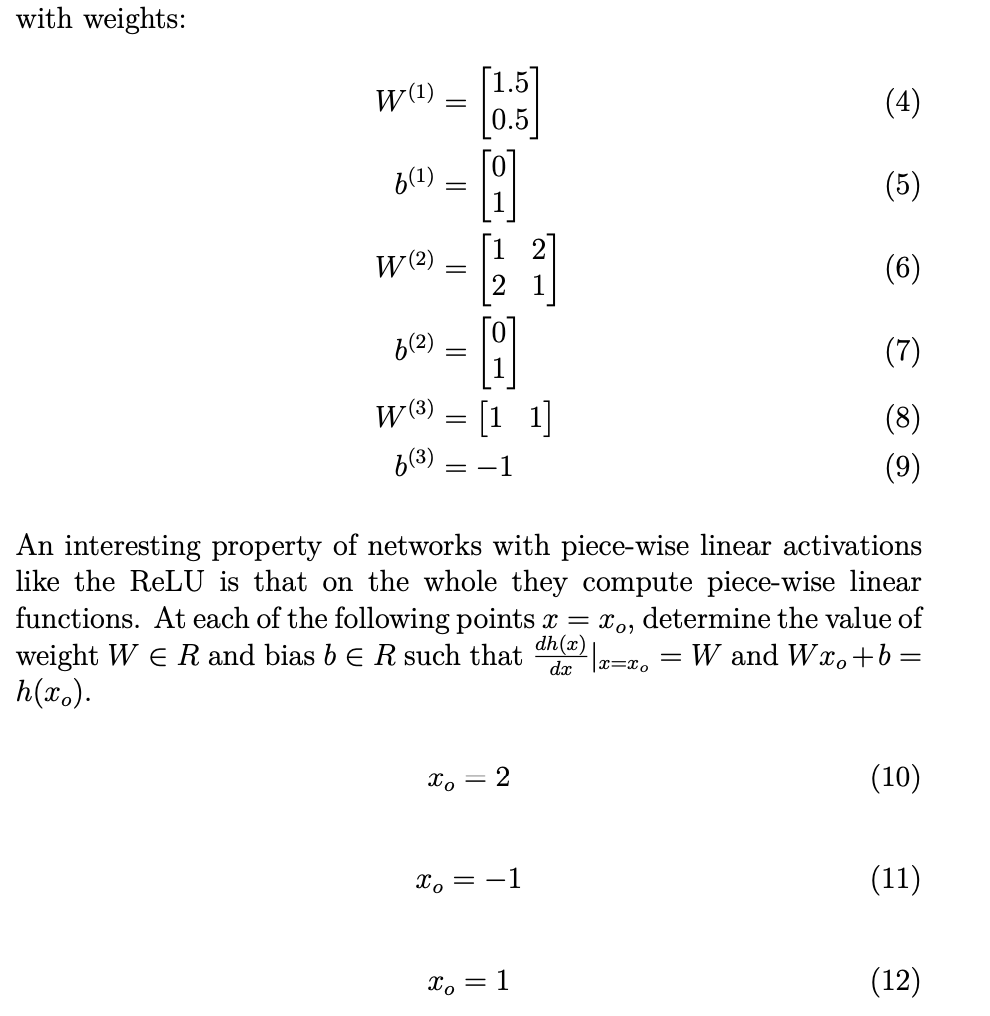

Consider a specific 2 hidden layer ReLU network with inputs x R, 1 dimensional outputs, and 2 neurons per hidden layer. This function is

Consider a specific 2 hidden layer ReLU network with inputs x R, 1 dimensional outputs, and 2 neurons per hidden layer. This function is given by h(x) = W() max{0, W() max{0, W() x + b() } + 6()} + 6(3) (3) with weights: W(1) b(1) W(2) b(2) W(3) = = = = [1.5] 0.5 A 21 [11] (4) (5) (6) (7) (8) b(3) = 1 An interesting property of networks with piece-wise linear activations like the ReLU is that on the whole they compute piece-wise linear functions. At each of the following points x = xo, determine the value of weight WR and bias b R such that W and Wxo+b= h(xo). dh(x) dx x=xo == xo 2 (10) x = -1 (11) xo = 1 (12)

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started