Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Consider the following neural network with weights w for the edges and offset o for the nodes: W, 0.4 W=0.6 b=-0.3 Y3 W=2.0 b=0.0

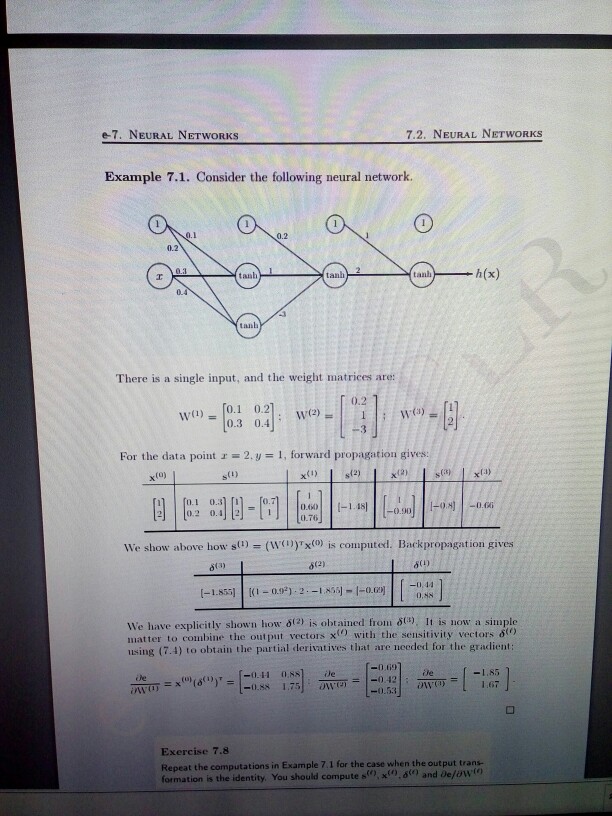

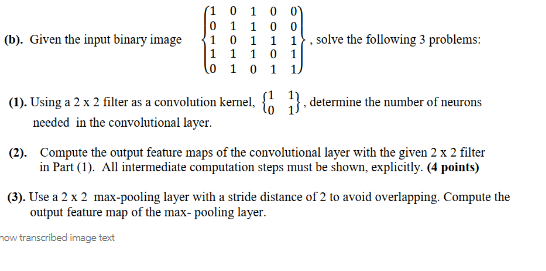

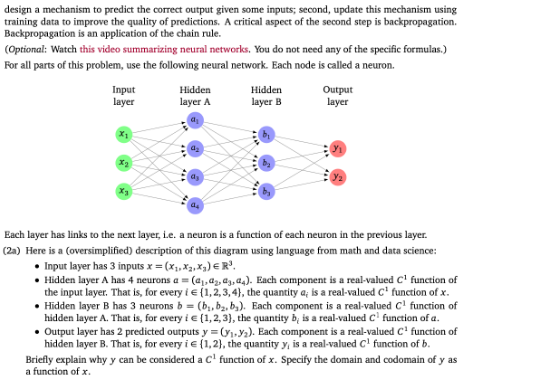

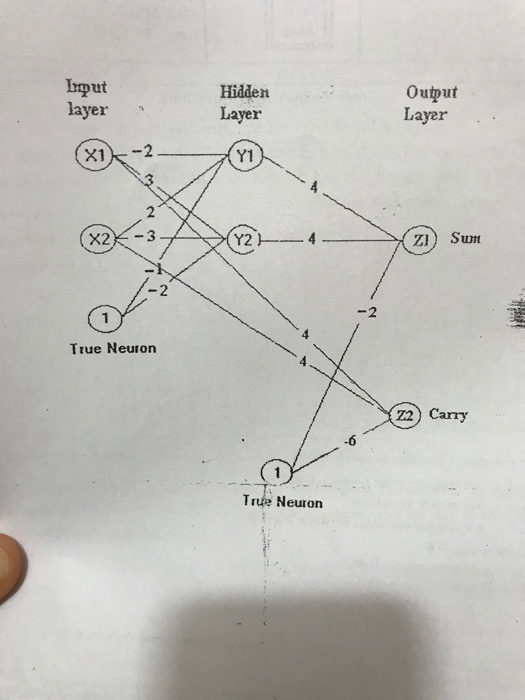

Consider the following neural network with weights w for the edges and offset o for the nodes: W, 0.4 W=0.6 b=-0.3 Y3 W=2.0 b=0.0 b=0.4 Y5 W--0.7 W = -0.5 Y W-1.0 4 Assume that the outputs of nodes Y3, Y, and Y are based on rectified linear functions: Ys=max(0+ba or Y = max 035+ us+b). x (0.% For the following problems, consider input values X = 1 and X = 1: (a) What is the value of Ys for this input? (2 points) (b) What is the value of Y4 for this input? (2 points) (c) What is the value of Y, for this input? (3 points) Assuming the desired output is 0.6 and the squared loss function, loss (Ys, 0.6) = (Y-0.6), is used: (d) What is the gradient of this loss for this example with respect to by? (6 points) (e) What is the gradient of this loss for this example with respect to (f) What is the gradient of this loss for this example with respect to 13? (6 points) 24? (6 points) e-7. NEURAL NETWORKS. 7.2. NEURAL NETWORKS Example 7.1. Consider the following neural network. 0.2 0.1 I 0.3 tanh 0.4 tanb 0.2 tanh tanh - - h(x) There is a single input, and the weight matrices are: W(1) [0.1 0.2 0.2 = [0.3 0.4] W(2) = W() For the data point r = 2.y = 1, forward propagation gives: = 12 H x(0) 8(1) x(1) 8(2) x(2) 8(3) x(3) A [0.1 0.3] 0.2 0.4 88-0 0.60 0.76 -1.48 -0.50 -0.81 -0.66 We show above how s() = (W)x(0) is computed. Backpropagation gives == 8(3) 8(2) (-1.855) (1-0.92)-2--1-855-1-0.00 6(4) -0.44 0.88 We have explicitly shown how 6(2) is obtained from 8(3), It is now a simple matter to combine the output vectors x with the sensitivity vectors & using (7.4) to obtain the partial derivatives that are needed for the gradient: Je i = x(0) x) (8)) = [-0.44 0.88] Je -0.88 1.75 Owe -0.69 -0.42 -0.53 Je -1.85 = 1.67 Exercise 7.8 Repeat the computations in Example 7.1 for the case when the output trans- formation is the identity. You should compute s), x, 80 and De/aw (1 0 1 00 0 1 1 0 0 (b). Given the input binary image + solve the following 3 problems: 1 1 0 1 1 1 11 10 0 1 0 1 1 (1). Using a 2 x 2 filter as a convolution kernel, (1 1), determine the number of neurons needed in the convolutional layer. (2). Compute the output feature maps of the convolutional layer with the given 2 x 2 filter in Part (1). All intermediate computation steps must be shown, explicitly. (4 points) (3). Use a 2 x 2 max-pooling layer with a stride distance of 2 to avoid overlapping. Compute the output feature map of the max- pooling layer. now transcribed image text design a mechanism to predict the correct output given some inputs; second, update this mechanism using training data to improve the quality of predictions. A critical aspect of the second step is backpropagation. Backpropagation is an application of the chain rule. (Optional: Watch this video summarizing neural networks. You do not need any of the specific formulas.) For all parts of this problem, use the following neural network. Each node is called a neuron. Input layer Hidden layer A Hidden layer B Output layer b x1 2 ds Each layer has links to the next layer, i.e. a neuron is a function of each neuron in the previous layer. (2a) Here is a (oversimplified) description of this diagram using language from math and data science: Input layer has 3 inputs x = (x1, x2,x,) = R. Hidden layer A has 4 neurons a = (a1,2,3,4). Each component is a real-valued C function of the input layer. That is, for every ie (1,2,3,4), the quantity a; is a real-valued C function of x. Hidden layer B has 3 neurons b = (b, b, by). Each component is a real-valued C function of hidden layer A. That is, for every ie (1,2,3), the quantity b, is a real-valued C function of a. Output layer has 2 predicted outputs y = (1.2). Each component is a real-valued C function of hidden layer B. That is, for every ie (1,2), the quantity y, is a real-valued C function of b. Briefly explain why y can be considered a C function of x. Specify the domain and codomain of y as a function of x. Input Hidden layer Layer (X1) Y1 X2 True Neuron 23 Output Layer Y2 4 ZI Sum True Neuron Z2) Carry

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started