Consider the standard dot-product self-attention mechanism that computes alignment scores between all pairs of input symbols; so if there are n tokens in a

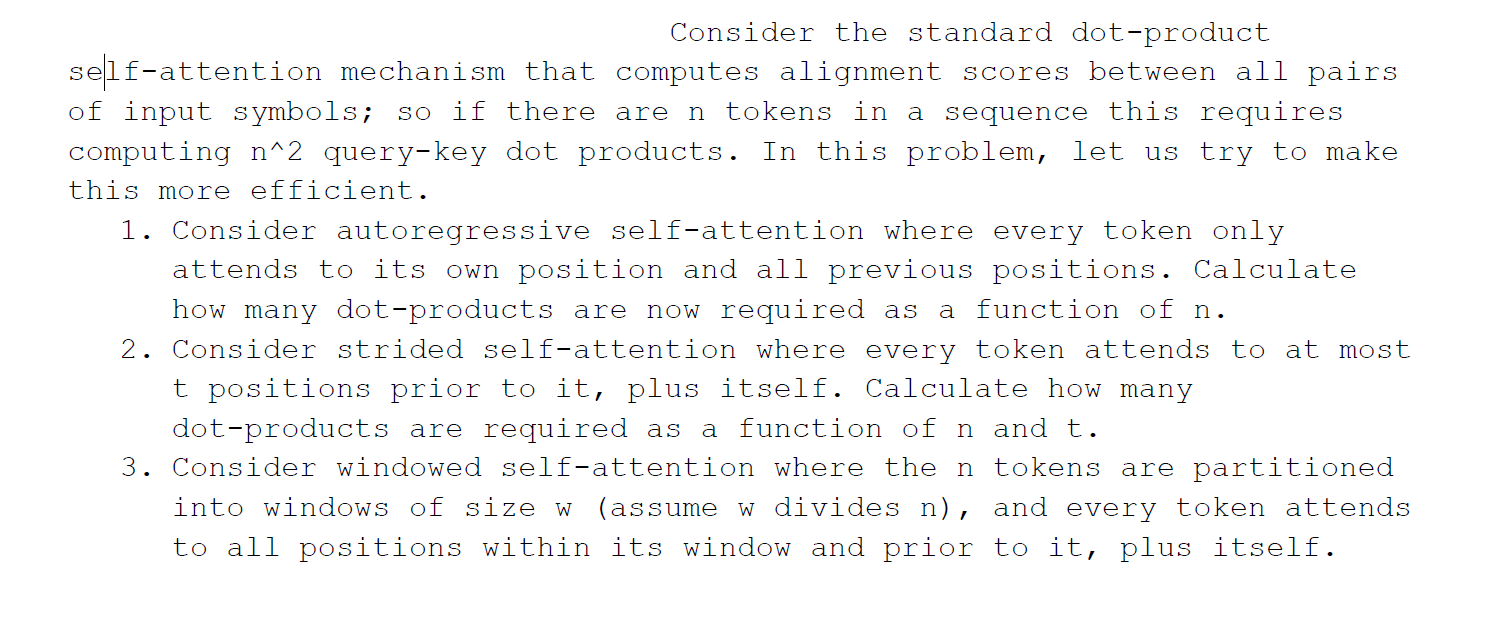

Consider the standard dot-product self-attention mechanism that computes alignment scores between all pairs of input symbols; so if there are n tokens in a sequence this requires computing n^2 query-key dot products. In this problem, let us try to make this more efficient. 1. Consider autoregressive self-attention where every token only attends to its own position and all previous positions. Calculate how many dot-products are now required as a function of n. 2. Consider strided self-attention where every token attends to at most t positions prior to it, plus itself. Calculate how many dot-products are required as a function of n and t. 3. Consider windowed self-attention where the n tokens are partitioned into windows of size w (assume w divides n), and every token attends to all positions within its window and prior to it, plus itself.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Answer 1 Let n be the number of tokens in the sequence Then the number of dot products required is n ...

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started