Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Could somebody explain me what should i do in every question of this exercise? In this question we will deal with a distributed optimization problem.

Could somebody explain me what should i do in every question of this exercise?

In this question we will deal with a distributed optimization problem.

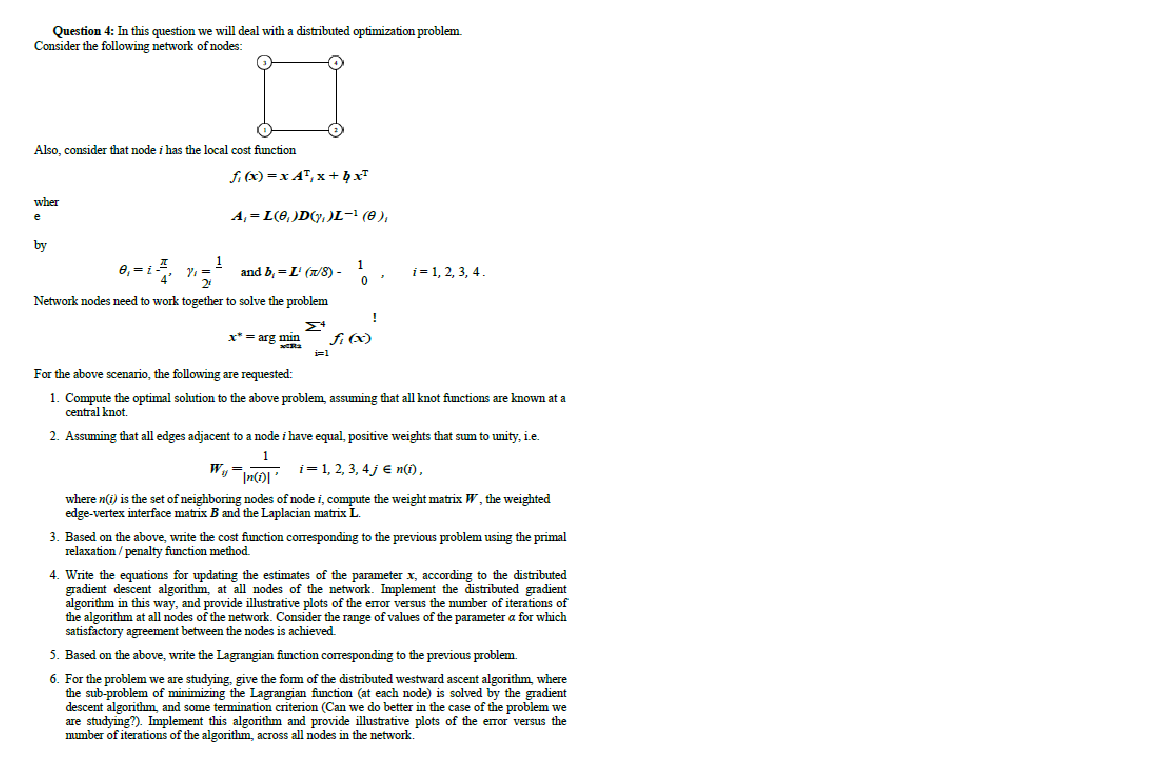

Consider the following network of nodes as they seem in the attached image:

Also, consider that node i has the local cost tunction

wher

e

by

and

Network nodes need to work together to solve the problem

For the above scenario, the following are requested:

Compute the optimal solution to the above problem, assuming that all knot functions are known at a

central knot.

Assuming that all edges adjacent to a node i have equal, positive weights that sum to unity, ie

jinn

where is the set of neighboring nodes of node compute the weight matrix the weighted

edgevertex interface matrix and the Laplacian matrix

Based on the above, write the cost function corresponding to the previous problem using the primal

relaxation penalty function method.

Write the equations for updating the estimates of the parameter according to the distributed

gradient descent algorithm, at all nodes of the network. Implement the distributed gradient

algorithm in this way, and provide illustrative plots of the error versus the number of iterations of

the algorithm at all nodes of the network. Consider the range of values of the parameter for which

satisfactory agreement between the nodes is achieved.

i

Compute the optimal solution to the above problem, assuming that all knot functions are known at a central knot.

Assuming that all edges adjacent to a node i have equal, positive weights that sum to unity, ie

Wij ni

i and j in ni

where ni is the set of neighboring nodes of node i compute the weight matrix W the weighted edgevertex interface matrix B and the Laplacian matrix L

Based on the above, write the cost function corresponding to the previous problem using the primal relaxation penalty function method.

Write the equations for updating the estimates of the parameter x according to the distributed gradient descent algorithm, at all nodes of the network. Implement the distributed gradient algorithm in this way, and provide illustrative plots of the error versus the number of iterations of the algorithm at all nodes of the network. Consider the range of values of the parameter alpha for which satisfactory agreement between the nodes is achieved.

Based on the above, write the Lagrangian function corresponding to the previous problem.

For the problem we are studying, give the form of the distributed westward ascent algorithm, where the subproblem of minimizing the Lagrangian function at each node is solved by the gradient descent algorithm, and some termination criterion Can we do better in the case of the problem we are studying? Implement this algorithm and provide illustrative plots of the error versus the number of iterations of the algorithm, across all nodes in the network.

Please address each question individually.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started