Answered step by step

Verified Expert Solution

Question

1 Approved Answer

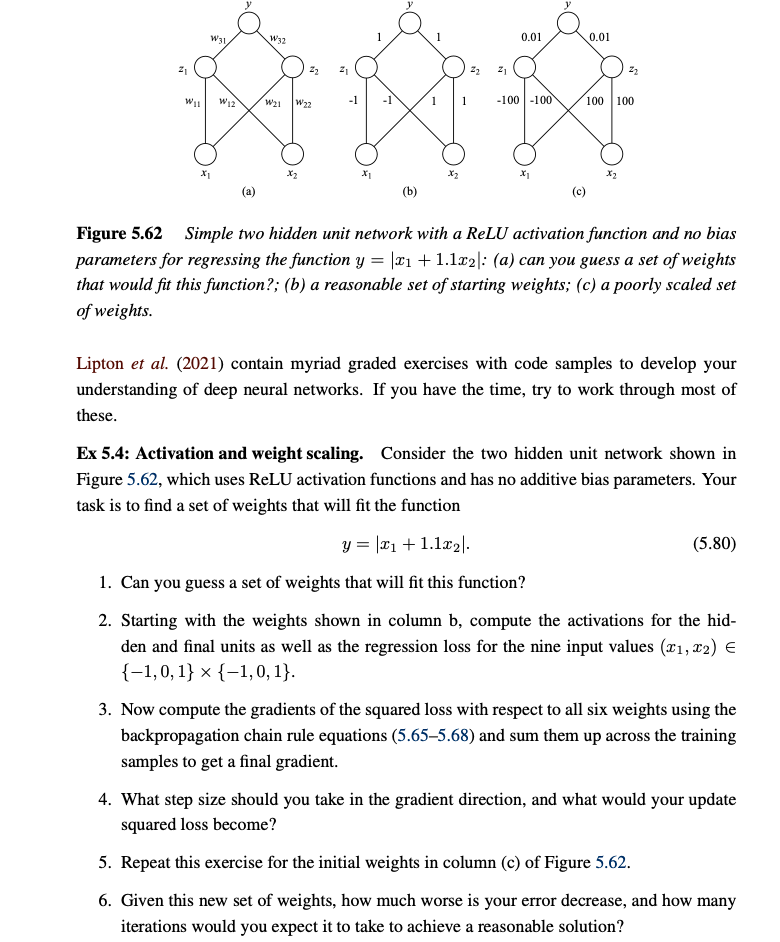

Ex 5 . 4 : Activation and weight scaling. Consider the two hidden unit network shown in Figure 5 . 6 2 , which uses

Ex : Activation and weight scaling. Consider the two hidden unit network shown in

Figure which uses ReLU activation functions and has no additive bias parameters. Your

task is to find a set of weights that will fit the function

Can you guess a set of weights that will fit this function?

Starting with the weights shown in column compute the activations for the hid

den and final units as well as the regression loss for the nine input values

Now compute the gradients of the squared loss with respect to all six weights using the

backpropagation chain rule equations and sum them up across the training

samples to get a final gradient.

What step size should you take in the gradient direction, and what would your update

squared loss become?

Repeat this exercise for the initial weights in column c of Figure

Given this new set of weights, how much worse is your error decrease, and how many

iterations would you expect it to take to achieve a reasonable solution?

Figure Function optimization: a the contour plot of with

the function being minimized at ; b ideal gradient descent optimization that quicklyFigure Simple two hidden unit network with a ReLU activation function and no bias

parameters for regressing the function : a can you guess a set of weights

that would fit this function?; b a reasonable set of starting weights; c a poorly scaled set

of weights.

Lipton et al contain myriad graded exercises with code samples to develop your

understanding of deep neural networks. If you have the time, try to work through most of

these.

Ex : Activation and weight scaling. Consider the two hidden unit network shown in

Figure which uses ReLU activation functions and has no additive bias parameters. Your

task is to find a set of weights that will fit the function

Can you guess a set of weights that will fit this function?

Starting with the weights shown in column compute the activations for the hid

den and final units as well as the regression loss for the nine input values

Now compute the gradients of the squared loss with respect to all six weights using the

backpropagation chain rule equations and sum them up across the training

samples to get a final gradient.

What step size should you take in the gradient direction, and what would your update

squared loss become?

Repeat this exercise for the initial weights in column c of Figure

Given this new set of weights, how much worse is your error decrease, and how many

iterations would you expect it to take to achieve a reasonable solution?

converges towards the minimum at

Would batch normalization help in this case?

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started