Question: For an MDP (S, A, T, R,y), let Vo: SR be an initial guess of the optimal value function V*. Suppose that this guess

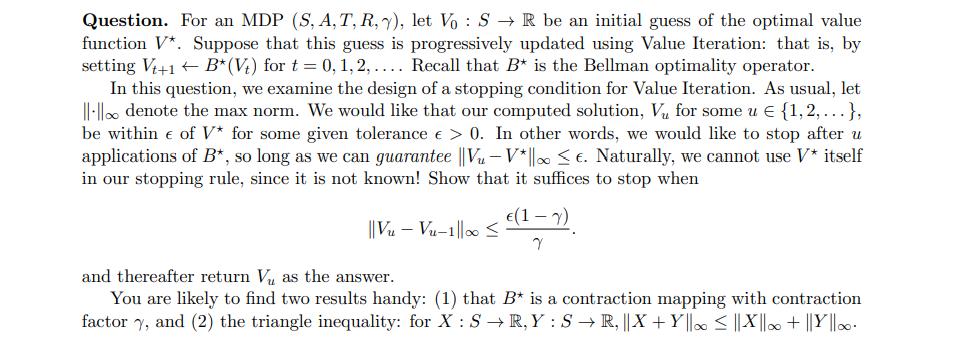

For an MDP (S, A, T, R,y), let Vo: SR be an initial guess of the optimal value function V*. Suppose that this guess is progressively updated using Value Iteration: that is, by setting Vt+1+B* (Vt) for t = 0, 1, 2,.... Recall that B* is the Bellman optimality operator. In this question, we examine the design of a stopping condition for Value Iteration. As usual, let ||-|| denote the max norm. We would like that our computed solution, V for some u {1,2,...}, be within e of V* for some given tolerance > 0. In other words, we would like to stop after u applications of B*, so long as we can guarantee ||Vu-V*|| e. Naturally, we cannot use V* itself in our stopping rule, since it is not known! Show that it suffices to stop when (1-7) Vu-Vu-1||0 Y and thereafter return V as the answer. You are likely to find two results handy: (1) that B* is a contraction mapping with contraction factory, and (2) the triangle inequality: for X: SR,Y: SR, || X + Y|| ||X|| + ||Y||o.

Step by Step Solution

3.43 Rating (159 Votes )

There are 3 Steps involved in it

The detailed ... View full answer

Get step-by-step solutions from verified subject matter experts