Question

For your convenience, I pasted the code in the first chunk here =============================================== from numpy import * # y = mx + b # m

For your convenience, I pasted the code in the first chunk here

===============================================

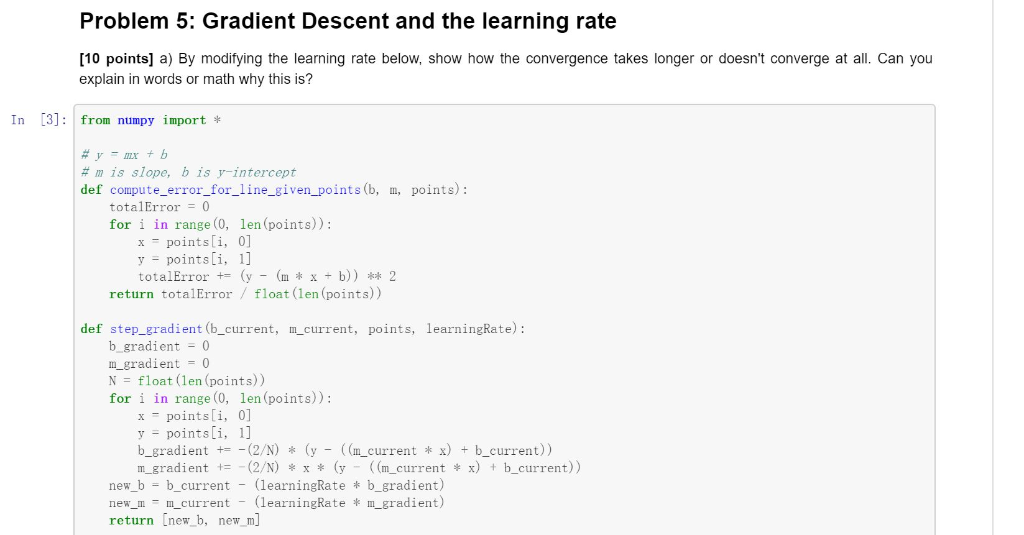

from numpy import *

# y = mx + b # m is slope, b is y-intercept def compute_error_for_line_given_points(b, m, points): totalError = 0 for i in range(0, len(points)): x = points[i, 0] y = points[i, 1] totalError += (y - (m * x + b)) ** 2 return totalError / float(len(points))

def step_gradient(b_current, m_current, points, learningRate): b_gradient = 0 m_gradient = 0 N = float(len(points)) for i in range(0, len(points)): x = points[i, 0] y = points[i, 1] b_gradient += -(2/N) * (y - ((m_current * x) + b_current)) m_gradient += -(2/N) * x * (y - ((m_current * x) + b_current)) new_b = b_current - (learningRate * b_gradient) new_m = m_current - (learningRate * m_gradient) return [new_b, new_m]

def gradient_descent_runner(points, starting_b, starting_m, learning_rate, num_iterations): b = starting_b m = starting_m for i in range(num_iterations): b, m = step_gradient(b, m, array(points), learning_rate) return [b, m]

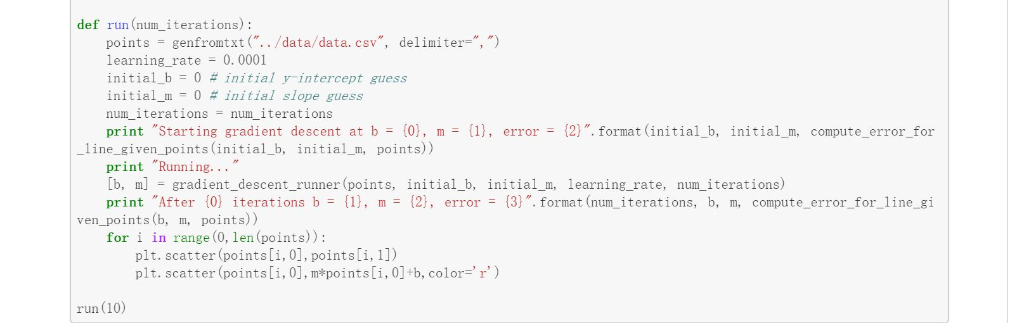

def run(num_iterations): points = genfromtxt("../data/data.csv", delimiter=",") learning_rate = 0.0001 initial_b = 0 # initial y-intercept guess initial_m = 0 # initial slope guess num_iterations = num_iterations print "Starting gradient descent at b = {0}, m = {1}, error = {2}".format(initial_b, initial_m, compute_error_for_line_given_points(initial_b, initial_m, points)) print "Running..." [b, m] = gradient_descent_runner(points, initial_b, initial_m, learning_rate, num_iterations) print "After {0} iterations b = {1}, m = {2}, error = {3}".format(num_iterations, b, m, compute_error_for_line_given_points(b, m, points)) for i in range(0,len(points)): plt.scatter(points[i,0],points[i,1]) plt.scatter(points[i,0],m*points[i,0]+b,color='r')

run(10)

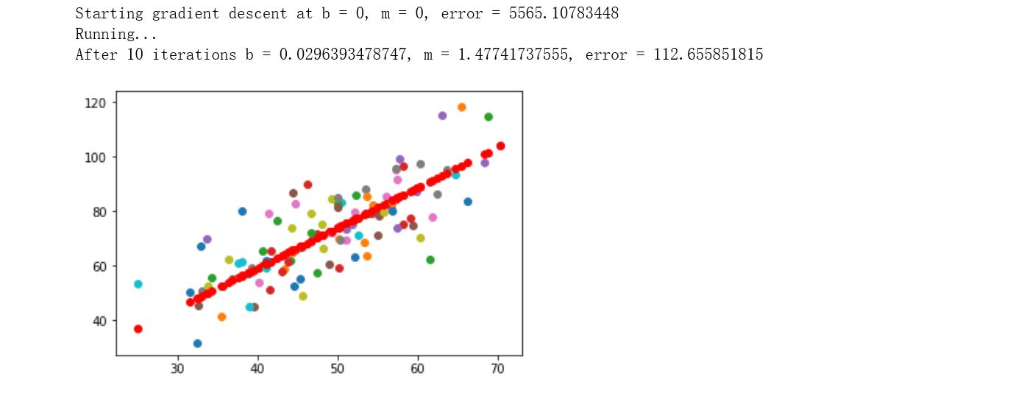

Problem 5: Gradient Descent and the learning rate [10 points] a) By modifying the learning rate below, show how the convergence takes longer or doesn't converge at all. Can you explain in words or math why this is? In [3]: from numpy import #y-mx + b # m is slope, b is y-intercept def compute-error-for-line-given-points(b, m, points): totalError0 for i in range (0, len (points)): x points[i, 0] y points[i, 1] return totalError float (len (points)) def step_gradient (b_current, mcurrent, points, learningRate): b gradient0 mgradient0 N = float (len (points)) for i in range (0, len (points)): x points[i, 01 y points[i, 1] b-gradient +--(2/N) * (y-Un-current *x) + b-current)) m gradient (2/N) x * (y ((m_currentx)b current)) new b - b_c new mm_current (learningRatem_gradient) return [new b, new m] current (learningRate b_gradient) def run (num_iterations): points genfromtxt ("../data/data. csv, delimiter-", ") learning rate 0. 0001 initial-b = 0 # initial y-intercept guess initial-m-0 # initial slope guess num_iterationsnum iterations print "Starting gradi ent descent at b- (0), m = {1), error- (2)", format (initial-b, initial-m, compute-error-for _1ine_given_ points (initial_b, initial_m, points)) print "Running. .." b, m] gradient_descent_runner (points, initial_b, initial_m, learning_rate, num_iterations) print "After {0) iterations b = {1), m = {2), error= {3)", formatinum-iterat 1 ons, b, m, compute, error-for-line-g1 ven_points (b, m, points)) for i in range (0, len (points)): plt. scatter (points[i, 0], points[i, 1]) plt. scatter (points [i, 0, m*points[i, 0]+b, colorr) run (10) In [13]:\# Use this to visually discuss convergence rate based on learning rate #for num in range (0,10): run (num # plt. show0 b) [10 points] Plot the error as a function of the number of iterations for various learning rates. Choose the rates so that it tells a story. In [1]: # Code here Problem 5: Gradient Descent and the learning rate [10 points] a) By modifying the learning rate below, show how the convergence takes longer or doesn't converge at all. Can you explain in words or math why this is? In [3]: from numpy import #y-mx + b # m is slope, b is y-intercept def compute-error-for-line-given-points(b, m, points): totalError0 for i in range (0, len (points)): x points[i, 0] y points[i, 1] return totalError float (len (points)) def step_gradient (b_current, mcurrent, points, learningRate): b gradient0 mgradient0 N = float (len (points)) for i in range (0, len (points)): x points[i, 01 y points[i, 1] b-gradient +--(2/N) * (y-Un-current *x) + b-current)) m gradient (2/N) x * (y ((m_currentx)b current)) new b - b_c new mm_current (learningRatem_gradient) return [new b, new m] current (learningRate b_gradient) def run (num_iterations): points genfromtxt ("../data/data. csv, delimiter-", ") learning rate 0. 0001 initial-b = 0 # initial y-intercept guess initial-m-0 # initial slope guess num_iterationsnum iterations print "Starting gradi ent descent at b- (0), m = {1), error- (2)", format (initial-b, initial-m, compute-error-for _1ine_given_ points (initial_b, initial_m, points)) print "Running. .." b, m] gradient_descent_runner (points, initial_b, initial_m, learning_rate, num_iterations) print "After {0) iterations b = {1), m = {2), error= {3)", formatinum-iterat 1 ons, b, m, compute, error-for-line-g1 ven_points (b, m, points)) for i in range (0, len (points)): plt. scatter (points[i, 0], points[i, 1]) plt. scatter (points [i, 0, m*points[i, 0]+b, colorr) run (10) In [13]:\# Use this to visually discuss convergence rate based on learning rate #for num in range (0,10): run (num # plt. show0 b) [10 points] Plot the error as a function of the number of iterations for various learning rates. Choose the rates so that it tells a story. In [1]: # Code hereStep by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started