from this pages give a List of the strategies that are used to reduce motion to photons latency?

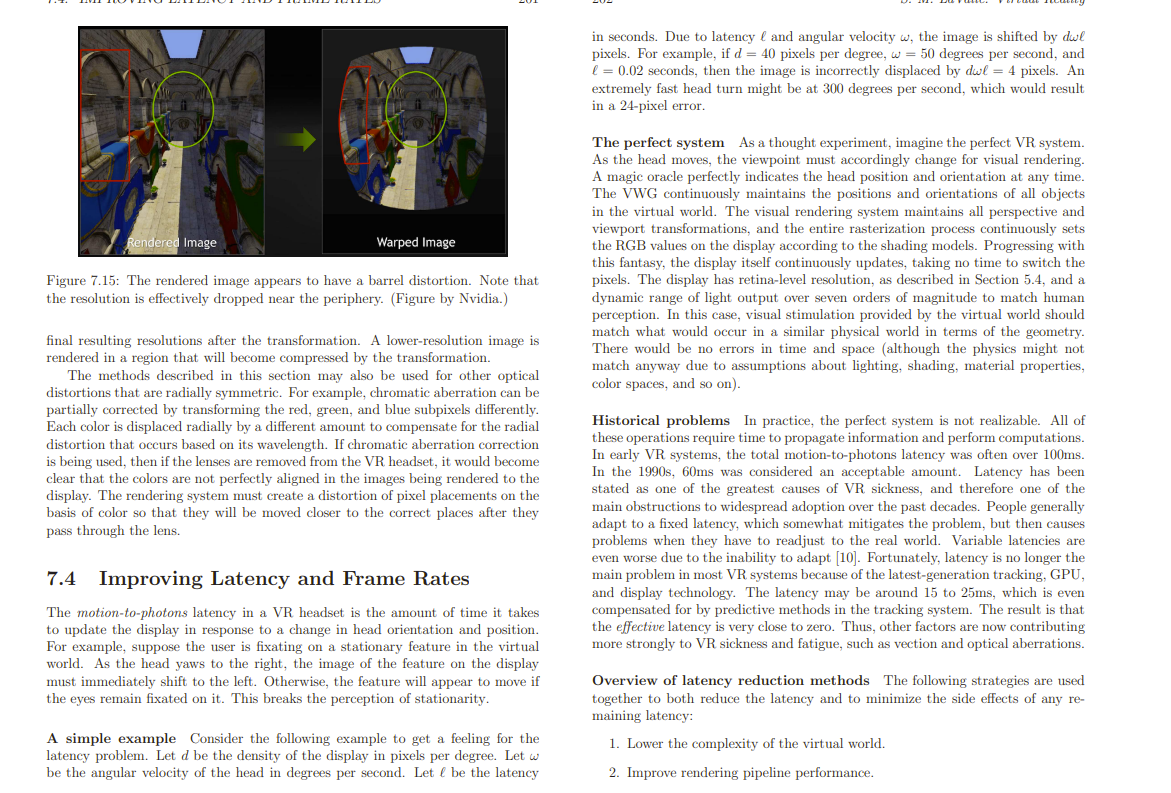

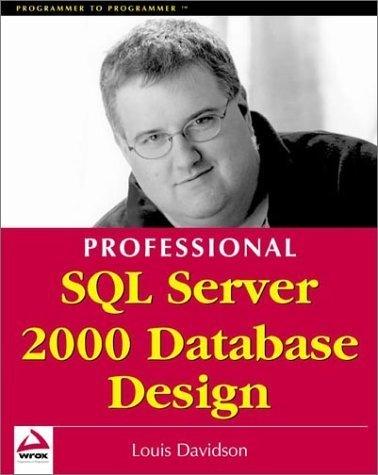

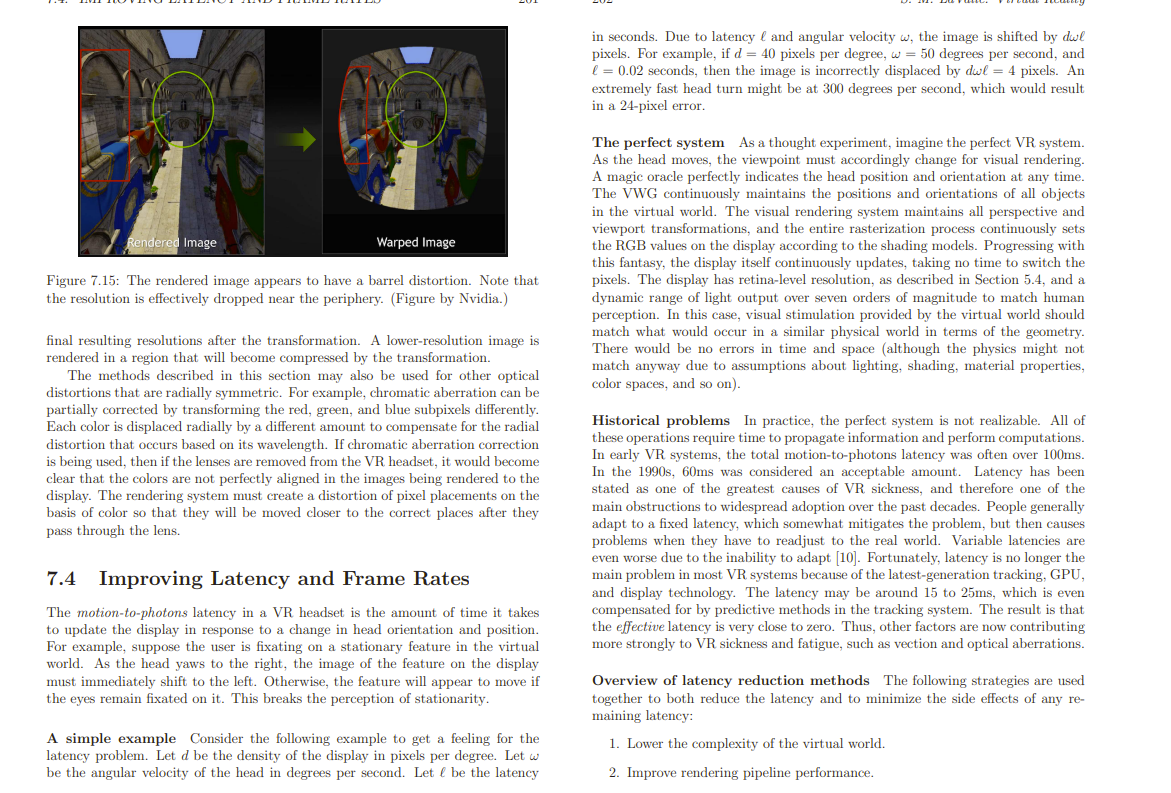

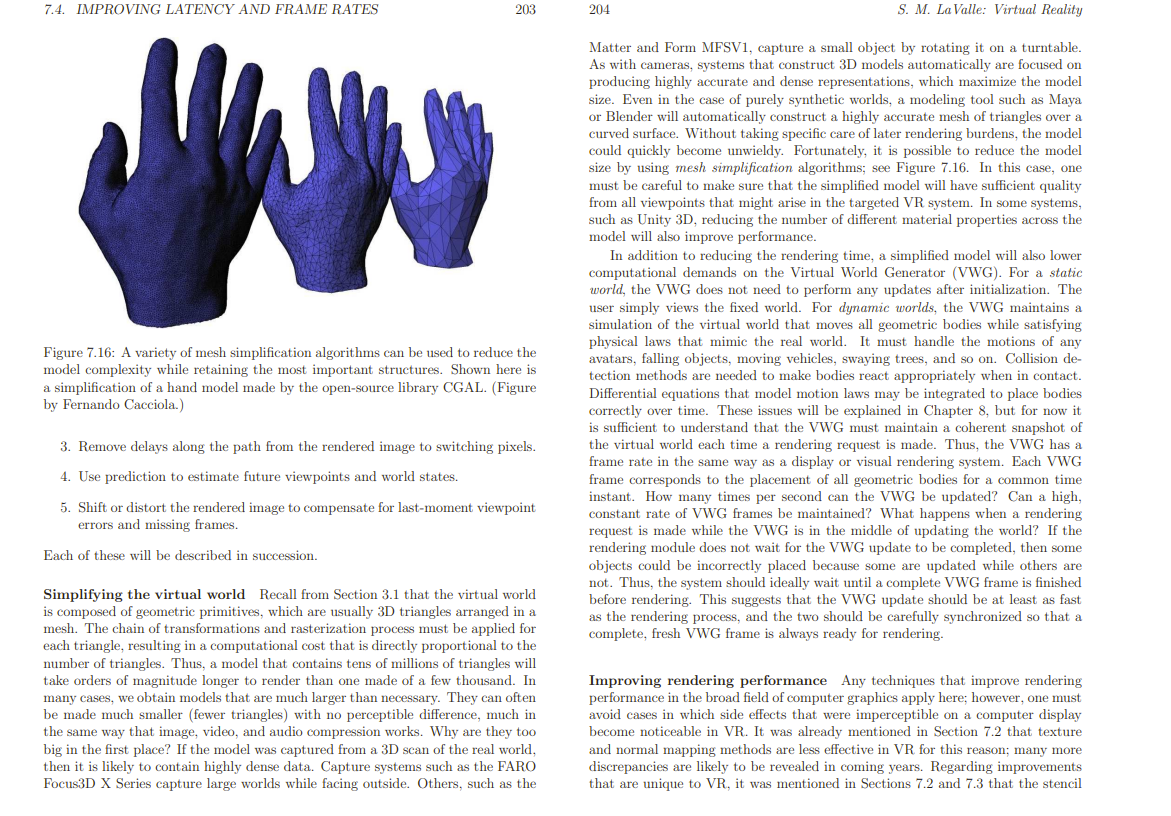

in seconds. Due to latency and angular velocity , the image is shifted by d pixels. For example, if d=40 pixels per degree, =50 degrees per second, and =0.02 seconds, then the image is incorrectly displaced by d=4 pixels. An extremely fast head turn might be at 300 degrees per second, which would result in a 24-pixel error. The perfect system As a thought experiment, imagine the perfect VR system. As the head moves, the viewpoint must accordingly change for visual rendering. A magic oracle perfectly indicates the head position and orientation at any time. The VWG continuously maintains the positions and orientations of all objects in the virtual world. The visual rendering system maintains all perspective and viewport transformations, and the entire rasterization process continuously sets the RGB values on the display according to the shading models. Progressing with this fantasy, the display itself continuously updates, taking no time to switch the Figure 7.15: The rendered image appears to have a barrel distortion. Note that pixels. The display has retina-level resolution, as described in Section 5.4, and a the resolution is effectively dropped near the periphery. (Figure by Nvidia.) dynamic range of light output over seven orders of magnitude to match human perception. In this case, visual stimulation provided by the virtual world should final resulting resolutions after the transformation. A lower-resolution image is match what would occur in a similar physical world in terms of the geometry. rendered in a region that will become compressed by the transformation. There would be no errors in time and space (although the physics might not The methods described in this section may also be used for other optical match anyway due to assumptions about lighting, shading, material properties, distortions that are radially symmetric. For example, chromatic aberration can be and so on). partially corrected by transforming the red, green, and blue subpixels differently. Each color is displaced radially by a different amount to compensate for the radial distortion that occurs based on its wavelength. If chromatic aberration correction is being used, then if the lenses are removed from the VR headset, it would become clear that the colors are not perfectly aligned in the images being rendered to the display. The rendering system must create a distortion of pixel placements on the basis of color so that they will be moved closer to the correct places after they pass through the lens. Historical problems In practice, the perfect system is not realizable. All of these operations require time to propagate information and perform computations. In early VR systems, the total motion-to-photons latency was often over 100ms. In the 1990s,60ms was considered an acceptable amount. Latency has been stated as one of the greatest causes of VR sickness, and therefore one of the main obstructions to widespread adoption over the past decades. People generally adapt to a fixed latency, which somewhat mitigates the problem, but then causes problems when they have to readjust to the real world. Variable latencies are even worse due to the inability to adapt [10]. Fortunately, latency is no longer the 7.4 Improving Latency and Frame Rates main problem in most VR systems because of the latest-generation tracking, GPU, The motion-to-photons latency in a VR headset is the amount of time it takes compensated for by predictive methods in the tracking system. The result is that to update the display in response to a change in head orientation and position. the effective latency is very close to zero. Thus, other factors are now contributing For example, suppose the user is fixating on a stationary feature in the virtual more strongly to VR sickness and fatigue, such as vection and optical aberrations. world. As the head yaws to the right, the image of the feature on the display must immediately shift to the left. Otherwise, the feature will appear to move if Overview of latency reduction methods The following strategies are used the eyes remain fixated on it. This breaks the perception of stationarity. together to both reduce the latency and to minimize the side effects of any remaining latency: A simple example Consider the following example to get a feeling for the latency problem. Let d be the density of the display in pixels per degree. Let 1. Lower the complexity of the virtual world. be the angular velocity of the head in degrees per second. Let be the latency 2. Improve rendering pipeline performance. 7.4. IMPROVING LATENCY AND FRAME RATES 203204 S. M. La Valle: Virtual Reality Matter and Form MFSV1, capture a small object by rotating it on a turntable. As with cameras, systems that construct 3D models automatically are focused on producing highly accurate and dense representations, which maximize the model size. Even in the case of purely synthetic worlds, a modeling tool such as Maya or Blender will automatically construct a highly accurate mesh of triangles over a curved surface. Without taking specific care of later rendering burdens, the model could quickly become unwieldy. Fortunately, it is possible to reduce the model size by using mesh simplification algorithms; see Figure 7.16. In this case, one must be careful to make sure that the simplified model will have sufficient quality from all viewpoints that might arise in the targeted VR system. In some systems, such as Unity 3D, reducing the number of different material properties across the model will also improve performance. In addition to reducing the rendering time, a simplified model will also lower computational demands on the Virtual World Generator (VWG). For a static world, the VWG does not need to perform any updates after initialization. The user simply views the fixed world. For dynamic worlds, the VWG maintains a simulation of the virtual world that moves all geometric bodies while satisfying physical laws that mimic the real world. It must handle the motions of any Figure 7.16: A variety of mesh simplification algorithms can be used to reduce the avatars, falling objects, moving vehicles, swaying trees, and so on. Collision demodel complexity while retaining the most important structures. Shown here is tection methods are needed to make bodies react appropriately when in contact. a simplification of a hand model made by the open-source library CGAL. (Figure Differential equations that model motion laws may be integrated to place bodies by Fernando Cacciola.) correctly over time. These issues will be explained in Chapter 8 , but for now it is sufficient to understand that the VWG must maintain a coherent snapshot of 3. Remove delays along the path from the rendered image to switching pixels. the virtual world each time a rendering request is made. Thus, the VWG has a 4. Use prediction to estimate future viewpoints and world rame in the same way as a display or visual rendering system. Each VWG 4. Use prediction to estimate future viewpoints and world states. frame corresponds to the placement of all geometric bodies for a common time 5. Shift or distort the rendered image to compensate for last-moment viewpoint instant. How many times per second can the VWG be updated? Can a high, errors and missing frames. constant rate of VWG frames be maintainede What a mene request is made while the VWG is in the middle of updating the world? If the rendering module does not wait for the VWG update to be completed, then some Each of these will be described in succession. objects could be incorrectly placed because some are updated while others are Simplifying the virtual world Recall from Section 3.1 that the virtual world Thus, the system should ideally wait until a complete VWG frame is finhed is composed of geometric primitives, which are usually 3D triangles arranged in a before rendering. This suggests that the VWG update should be at least as fast mesh. The chain of transformations and rasterization process must be applied for the rendering process, and the two should be carefully synchronized so that a each triangle, resulting in a computational cost that is directly proportional to the fresh VWG frame is always ready for rendering. number of triangles. Thus, a model that contains tens of millions of triangles will take orders of magnitude longer to render than one made of a few thousand. In Improving rendering performance Any techiques that improve rendering many cases, we obtain models that are much larger than necessary. They can often performance in the broad field of computer graphics apply here; however, one must be made much smaller (fewer triangles) with no perceptible difference, much in avoid cases in which side effects that were imperceptible on a computer display the same way that image, video, and audio compression works. Why are they too become noticeable in VR. It was already mentioned in Section 7.2 that texture big in the first place? If the model was captured from a 3D scan of the real world, and normal mapping methods are less effective in VR for this reason; many more then it is likely to contain highly dense data. Capture systems such as the FARO berepancies are likely to be revealed in coming years. Regarding improvements Focus3D X Series capture large worlds while facing outside. Others, such as the that are unique to VR, it was mentioned in Sections 7.2 and 7.3 that the stencil