Answered step by step

Verified Expert Solution

Question

1 Approved Answer

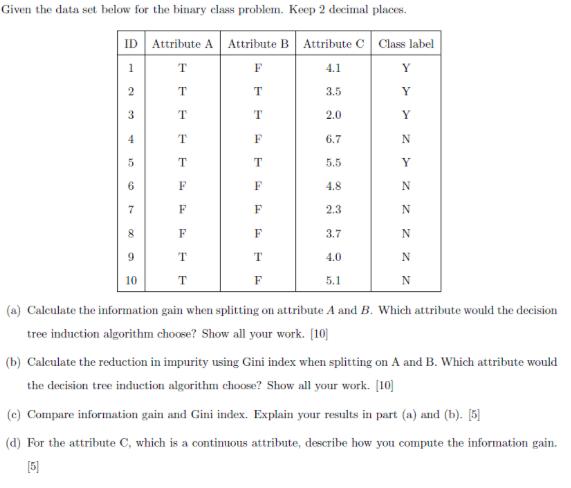

Given the data set below for the binary class problem. Keep 2 decimal places. ID Attribute A Attribute B Attribute C Class label 1

Given the data set below for the binary class problem. Keep 2 decimal places. ID Attribute A Attribute B Attribute C Class label 1 T F 2 T T T T T T F F 3 4 5 6 7 9 10 F T F T F F F T F 3.5 2.0 6.7 5.5 4.8 2.3 3.7 4.0 5.1 Y Y Y N Y N ZZZZ N N N N (a) Calculate the information gain when splitting on attribute A and B. Which attribute would the decision tree induction algorithm choose? Show all your work. [10] (b) Calculate the reduction in impurity using Gini index when splitting on A and B. Which attribute would the decision tree induction algorithm choose? Show all your work. [10] (c) Compare information gain and Gini index. Explain your results in part (a) and (b). [5] (d) For the attribute C, which is a continuous attribute, describe how you compute the information gain. [5]

Step by Step Solution

★★★★★

3.55 Rating (148 Votes )

There are 3 Steps involved in it

Step: 1

a To calculate the information gain we first need to calculate the entropy of the class labels and the weighted entropy after splitting on each attribute Entropy of class labels There are 6 positive Y ...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started