I will rate helpful please assist.

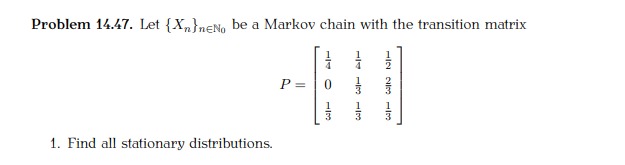

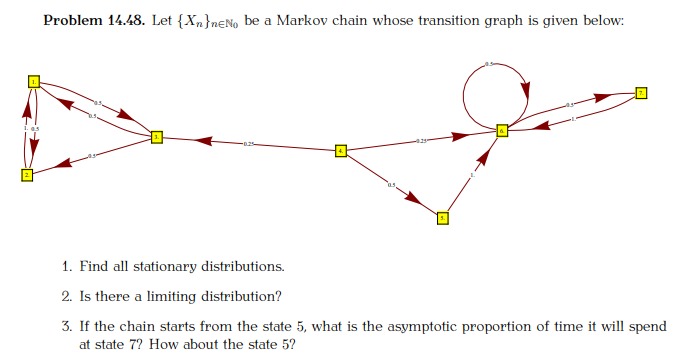

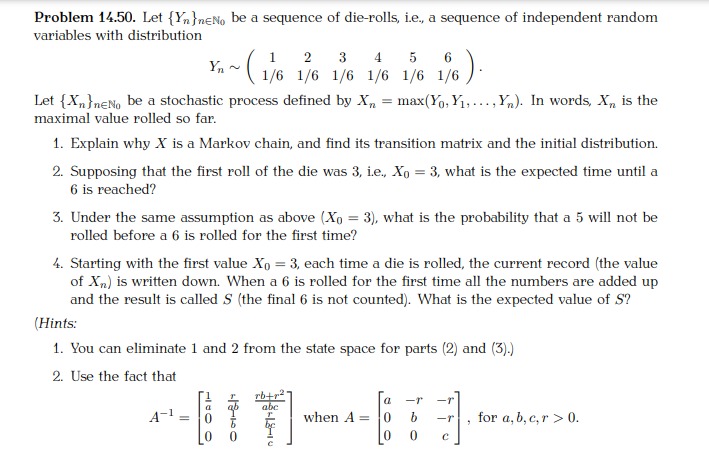

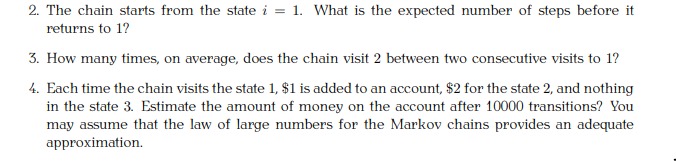

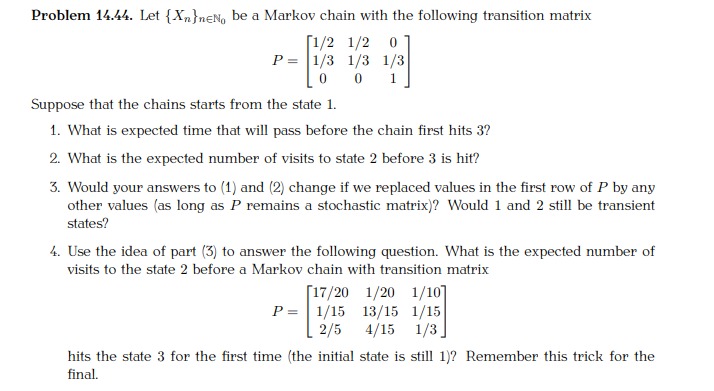

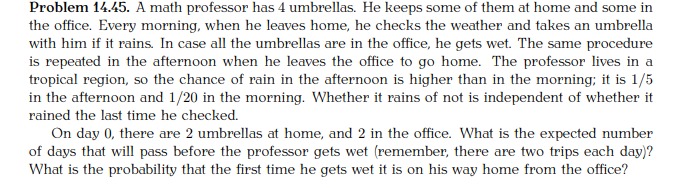

Problem 14.47. Let {X,}nen, be a Markov chain with the transition matrix P = WIH WIH GIN WHO 1. Find all stationary distributions.Problem H.431. Let {Xheun he a Markov chain whose transition graph is given below 1:7 I?!) 1. Find all stationary distributions. 2. Is there a limiting distribution? 3. If the chain starts from the state 5; what is the asymptotic proportion of time it will spend at state '1'"? How about the state 5'? Problem 14.511 Let {YnhEh- be a sequence of dierolls, i.e., a sequence of independent random variables with distribution 1 2 3 4 5 r5 Y\""\"(1; lf lf lf 1ft} 1;:5)' Let {XneNn be a stochastic process dened by X\" = 111me\2. The chain starts from the state i = 1. 1What is the expected number of steps before it returns to 1'? 3. How many tunes. on average. does the chain visit 2 between two consecutive visits to 1? 4. Each time the chain visits the state L $1 is added to an account. $2 for the state 2, and nothing in the state 3. Estimate the amount of money on the account after 1mm transitions? E'ou may assume that the law of large numbers for the Markov chains provides an adequate approximation Problem 14.44. Let {X}nen, be a Markov chain with the following transition matrix [1/2 1/2 0 P = 1/3 1/3 1/3 0 0 1 Suppose that the chains starts from the state 1. 1. What is expected time that will pass before the chain first hits 3? 2. What is the expected number of visits to state 2 before 3 is hit? 3. Would your answers to (1) and (2) change if we replaced values in the first row of P by any other values (as long as P remains a stochastic matrix)? Would 1 and 2 still be transient states? 4. Use the idea of part (3) to answer the following question. What is the expected number of visits to the state 2 before a Markov chain with transition matrix [17/20 1/20 1/10] P = 1/15 13/15 1/15 2/5 4/15 1/3 hits the state 3 for the first time (the initial state is still 1)? Remember this trick for the final.Problem 14.45. A math professor has 4 umbrellas. He keeps some of them at home and some in the office. Every morning, when he leaves home, he checks the weather and takes an umbrella with him if it rains. In case all the umbrellas are in the office, he gets wet. The same procedure is repeated in the afternoon when he leaves the office to go home. The professor lives in a tropical region, so the chance of rain in the afternoon is higher than in the morning; it is 1/5 in the afternoon and 1/20 in the morning. Whether it rains of not is independent of whether it rained the last time he checked. On day 0, there are 2 umbrellas at home, and 2 in the office. What is the expected number of days that will pass before the professor gets wet (remember, there are two trips each day)? What is the probability that the first time he gets wet it is on his way home from the office