Answered step by step

Verified Expert Solution

Question

1 Approved Answer

In the course notes on Neural Networks, we learned how to calculate the gradient for the weights of a neural network, through a process

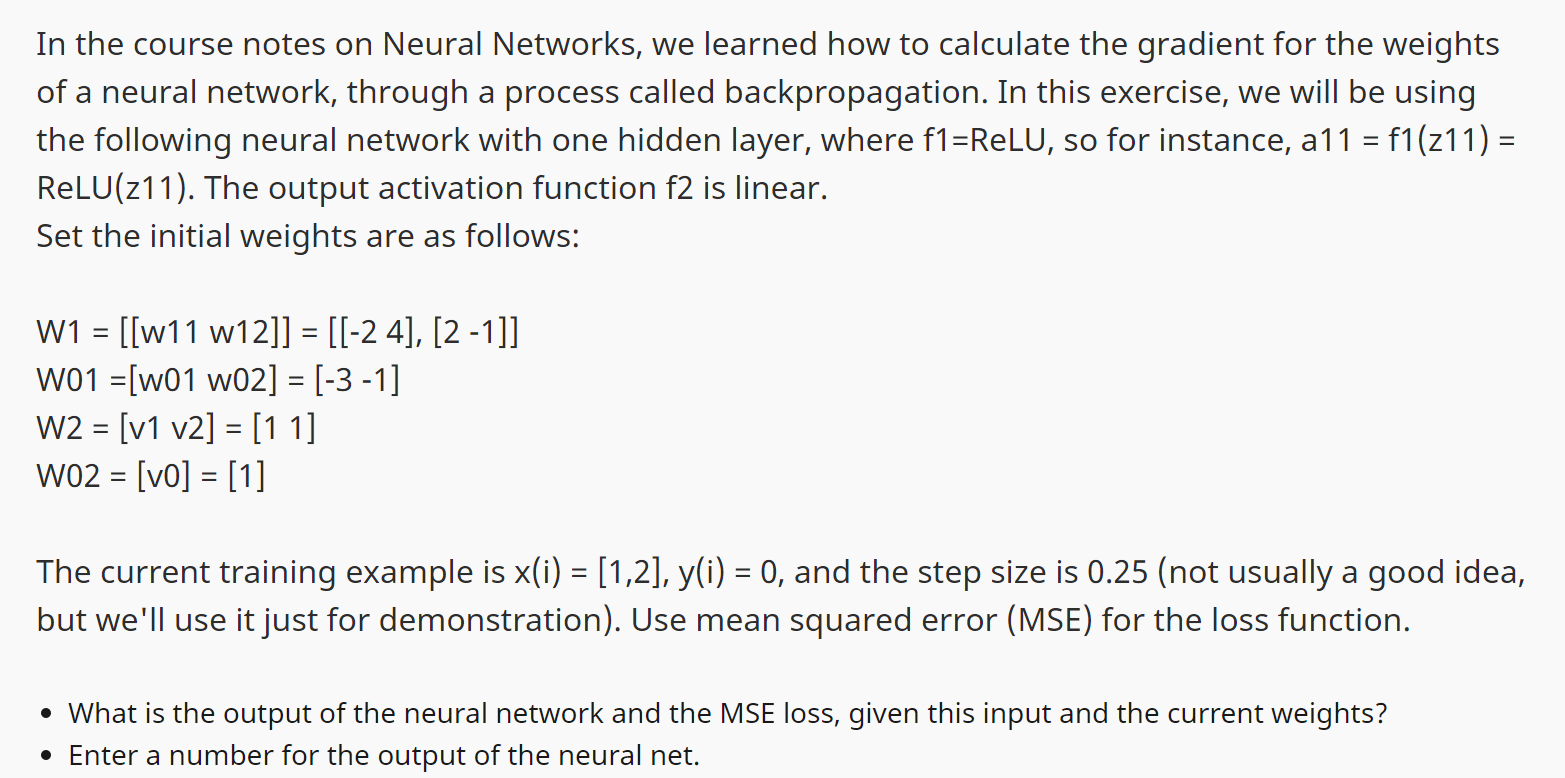

In the course notes on Neural Networks, we learned how to calculate the gradient for the weights of a neural network, through a process called backpropagation. In this exercise, we will be using the following neural network with one hidden layer, where f1 =ReLU, so for instance, a11 = f1(z11) = ReLU(z11). The output activation function f2 is linear. Set the initial weights are as follows: W1 = [[w11 w12]] = [[-2 4], [2 -1]] W01 =[w01 w02] = [-3 -1] W2 [v1 v2] = [11] = W02 = [v0] = [1] The current training example is x(i) = [1,2], y(i) = 0, and the step size is 0.25 (not usually a good idea, but we'll use it just for demonstration). Use mean squared error (MSE) for the loss function. What is the output of the neural network and the MSE loss, given this input and the current weights? Enter a number for the output of the neural net.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

SOLUTION Compute the input to the hidden layer neurons z 1 w 11 x 1 w 12 x 2 w 01 z 1 21123 z 1 ...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started