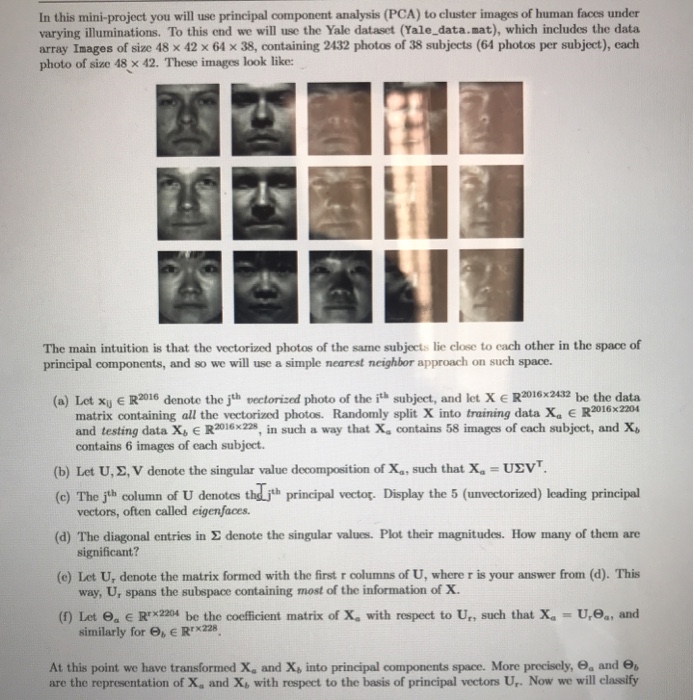

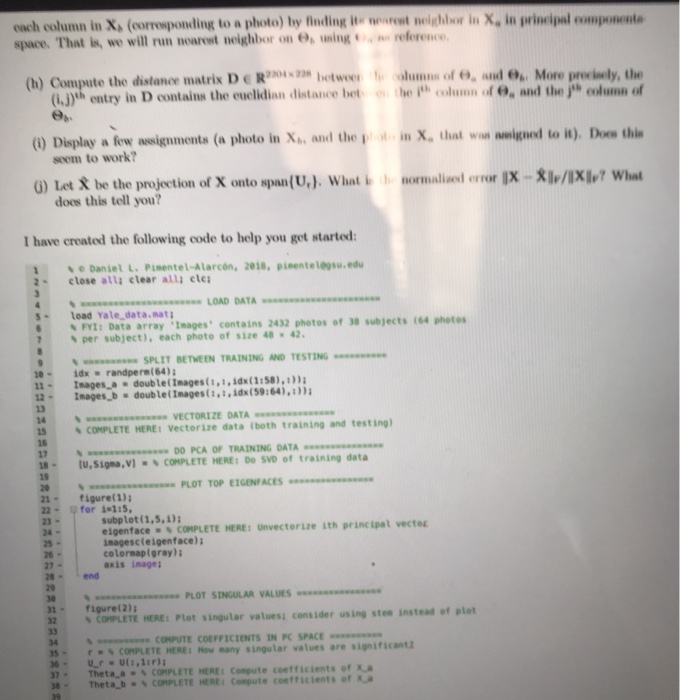

In this mini-project you will use principal component analysis (PCA) to cluster images of human faces under varying illuminations. To this end we will use the Yale dataset (Yale_data.mat), which includes the data array Images of size 48x 42 64 38, containing 2432 photos of 38 subjects (64 photos per subject), each photo of size 48 x 42. These images look like: The main intuition is that the veetorized photos of the same subjects lie close to each other in the space of principal components, and so we will use a simple nearest neighbor approach on such space. (a) let xu E R2016 denote the juh tectorized photo of the ith subject, and let X e R2016 x2432 be the data matrix containing all the vectorized photos. Randomly split X into training data X, e R2016x2204 and testing data Xb E R2016228 in such a way that ??contains 58 images of each subject, and ?. contains 6 images of each subject (b) Let U, ?,V denote the singular value decomposition of X., such that X?-U?VT (c) The jth column of U denotes theith principal vector. Display the 5 (unvectorized) leading principal (d) The diagonal entries in denote the singular values. Plot their magnitudes. How many of them are (e) Let U, denote the matrix formed with the first r columns of U, where r is your answer from (d). This (f) Let ?. E Rrx2204 be the coefficient matrix of X. with respect to Un such that Xa-Urea, and vectors, often called eigenfaces significant? way, Ur spans the subspace containing most of the information of x. similarly for 6, E Rr228. At this point we have transformed ?, and X. into principal components space. More precisely, e, and 6, are the representation of X. and X with respect to the basis of principal vectors Ur-Now we will classify In this mini-project you will use principal component analysis (PCA) to cluster images of human faces under varying illuminations. To this end we will use the Yale dataset (Yale_data.mat), which includes the data array Images of size 48x 42 64 38, containing 2432 photos of 38 subjects (64 photos per subject), each photo of size 48 x 42. These images look like: The main intuition is that the veetorized photos of the same subjects lie close to each other in the space of principal components, and so we will use a simple nearest neighbor approach on such space. (a) let xu E R2016 denote the juh tectorized photo of the ith subject, and let X e R2016 x2432 be the data matrix containing all the vectorized photos. Randomly split X into training data X, e R2016x2204 and testing data Xb E R2016228 in such a way that ??contains 58 images of each subject, and ?. contains 6 images of each subject (b) Let U, ?,V denote the singular value decomposition of X., such that X?-U?VT (c) The jth column of U denotes theith principal vector. Display the 5 (unvectorized) leading principal (d) The diagonal entries in denote the singular values. Plot their magnitudes. How many of them are (e) Let U, denote the matrix formed with the first r columns of U, where r is your answer from (d). This (f) Let ?. E Rrx2204 be the coefficient matrix of X. with respect to Un such that Xa-Urea, and vectors, often called eigenfaces significant? way, Ur spans the subspace containing most of the information of x. similarly for 6, E Rr228. At this point we have transformed ?, and X. into principal components space. More precisely, e, and 6, are the representation of X. and X with respect to the basis of principal vectors Ur-Now we will classify