Question: In this problem, we consider splitting when building a regression tree in the CART algorithm. We assume that there is a feature vector X

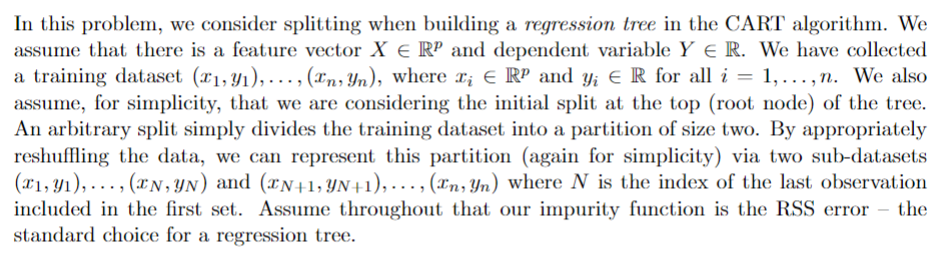

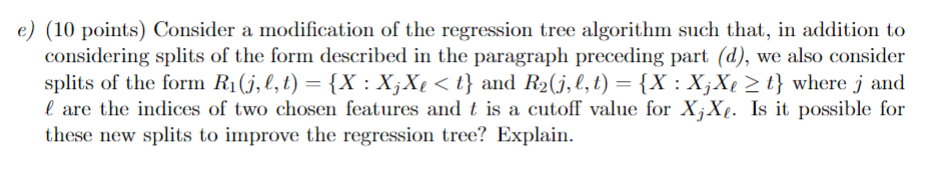

In this problem, we consider splitting when building a regression tree in the CART algorithm. We assume that there is a feature vector X RP and dependent variable Ye R. We have collected a training dataset (x, y),..., (In, Yn), where x R and y; E R for all i = 1, ..., n. We also assume, for simplicity, that we are considering the initial split at the top (root node) of the tree. An arbitrary split simply divides the training dataset into a partition of size two. By appropriately reshuffling the data, we can represent this partition (again for simplicity) via two sub-datasets (x1, y),..., (TN, YN) and (TN+1, YN+1),..., (En, Yn) where N is the index of the last observation included in the first set. Assume throughout that our impurity function is the RSS error the standard choice for a regression tree. e) (10 points) Consider a modification of the regression tree algorithm such that, in addition to considering splits of the form described in the paragraph preceding part (d), we also consider splits of the form R(j,l,t) = {X : XjX < t} and R(j,l,t) = {X : XjX t} where j and e are the indices of two chosen features and t is a cutoff value for XjXe. Is it possible for these new splits to improve the regression tree? Explain.

Step by Step Solution

3.41 Rating (145 Votes )

There are 3 Steps involved in it

In the context of regression trees and the CART Classification and Regression Trees algorithm the primary goal is to find optimal splits that minimize ... View full answer

Get step-by-step solutions from verified subject matter experts