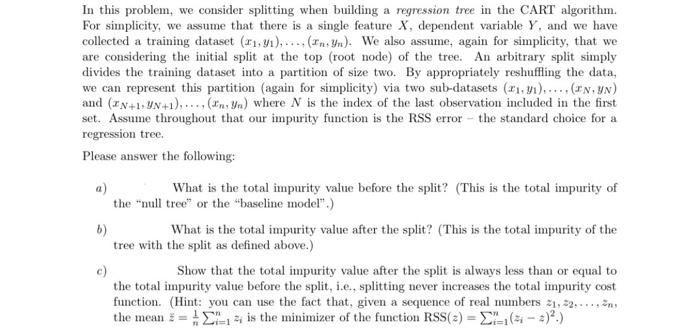

In this problem, we consider splitting when building a regression tree in the CART algorithm. For simplicity, we assume that there is a single feature X, dependent variable Y, and we have collected a training dataset (x1,y1),,(xn,yn). We also assume, again for simplicity, that we are considering the initial split at the top (root node) of the tree. An arbitrary split simply divides the training dataset into a partition of size two. By appropriately reshuffling the data, we can represent this partition (again for simplicity) via two sub-datasets (x1,y1),,(xN,yN) and (xN+1,yN+1),,(xn,yn) where N is the index of the last observation included in the first set. Assume throughout that our impurity function is the RSS error - the standard choice for a regression tree. Please answer the following: a) What is the total impurity value before the split? (This is the total impurity of the "null tree" or the "baseline model".) b) What is the total impurity value after the split? (This is the total impurity of the tree with the split as defined above.) c) Show that the total impurity value after the split is always less than or equal to the total impurity value before the split, i.e, splitting never increases the total impurity cost function. (Hint: you can use the fact that, given a sequence of real numbers z1,z2,,zn, the mean z=n1i=1nzi is the minimizer of the function RSS(z)=i=1n(ziz)2.) In this problem, we consider splitting when building a regression tree in the CART algorithm. For simplicity, we assume that there is a single feature X, dependent variable Y, and we have collected a training dataset (x1,y1),,(xn,yn). We also assume, again for simplicity, that we are considering the initial split at the top (root node) of the tree. An arbitrary split simply divides the training dataset into a partition of size two. By appropriately reshuffling the data, we can represent this partition (again for simplicity) via two sub-datasets (x1,y1),,(xN,yN) and (xN+1,yN+1),,(xn,yn) where N is the index of the last observation included in the first set. Assume throughout that our impurity function is the RSS error - the standard choice for a regression tree. Please answer the following: a) What is the total impurity value before the split? (This is the total impurity of the "null tree" or the "baseline model".) b) What is the total impurity value after the split? (This is the total impurity of the tree with the split as defined above.) c) Show that the total impurity value after the split is always less than or equal to the total impurity value before the split, i.e, splitting never increases the total impurity cost function. (Hint: you can use the fact that, given a sequence of real numbers z1,z2,,zn, the mean z=n1i=1nzi is the minimizer of the function RSS(z)=i=1n(ziz)2.)