Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Just need the answer to double check mine. This was already posted and given an explanation but they didnt give the answer so its of

Just need the answer to double check mine. This was already posted and given an explanation but they didnt give the answer so its of no help.

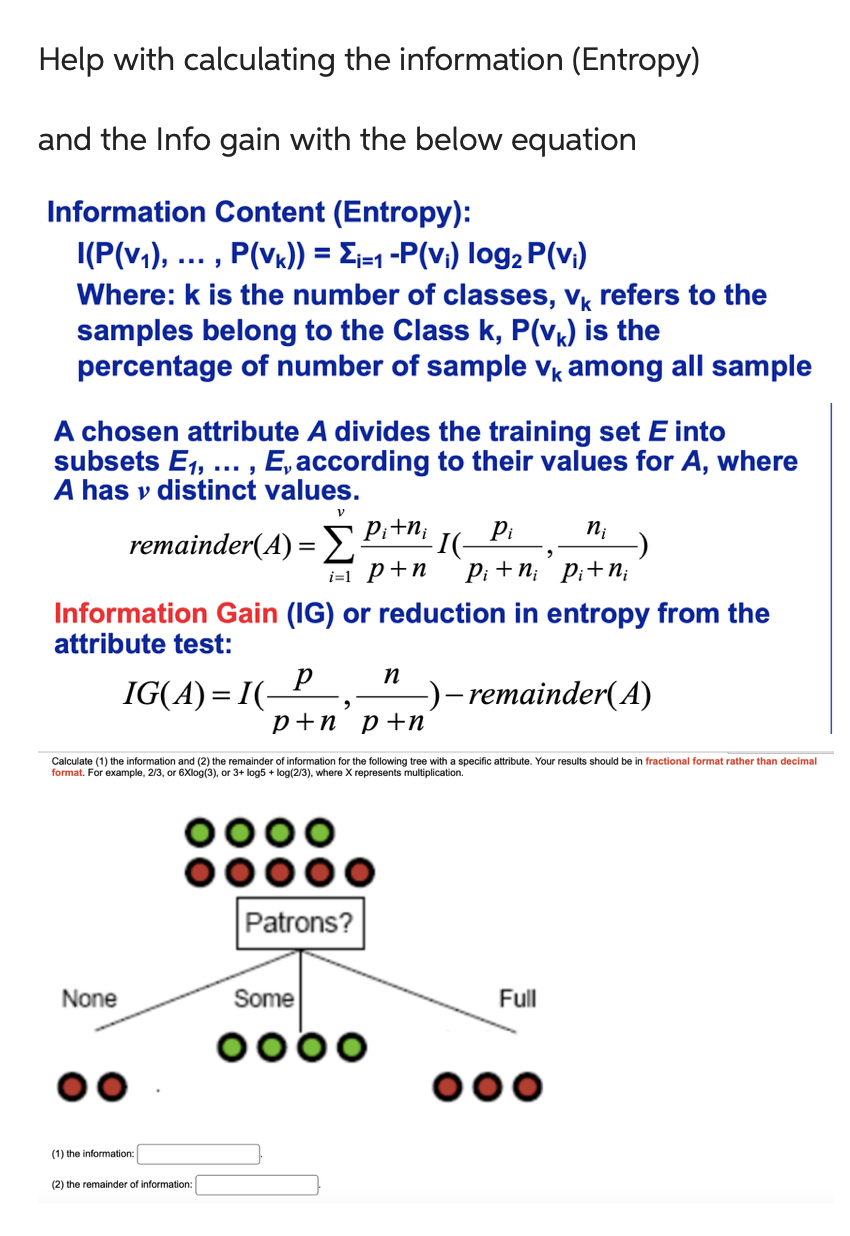

Help with calculating the information (Entropy) and the Info gain with the below equation Information Content (Entropy): I(P(v1),,P(vk))=i=1P(vi)log2P(vi) Where: k is the number of classes, vk refers to the samples belong to the Class k,P(vk) is the percentage of number of sample vk among all sample A chosen attribute A divides the training set E into subsets E1,,Ev according to their values for A, where A has v distinct values. remainder(A)=i=1vp+npi+niI(pi+nipi,pi+nini) Information Gain (IG) or reduction in entropy from the attribute test: IG(A)=I(p+np,p+nn)remainder(A) Calculate (1) the information and (2) the remainder of information for the following tree with a specific attribute. Your results should be in fractional format rather than decimal ormat. For example, 2/3, or 6log(3), or 3+log5+log(2/3), where X represents multiplication. (1) the information: (2) the remainder of information

Help with calculating the information (Entropy) and the Info gain with the below equation Information Content (Entropy): I(P(v1),,P(vk))=i=1P(vi)log2P(vi) Where: k is the number of classes, vk refers to the samples belong to the Class k,P(vk) is the percentage of number of sample vk among all sample A chosen attribute A divides the training set E into subsets E1,,Ev according to their values for A, where A has v distinct values. remainder(A)=i=1vp+npi+niI(pi+nipi,pi+nini) Information Gain (IG) or reduction in entropy from the attribute test: IG(A)=I(p+np,p+nn)remainder(A) Calculate (1) the information and (2) the remainder of information for the following tree with a specific attribute. Your results should be in fractional format rather than decimal ormat. For example, 2/3, or 6log(3), or 3+log5+log(2/3), where X represents multiplication. (1) the information: (2) the remainder of information Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started