Question: ,, kindly solve Consider the Linear Regression Model 1. For any X = x, let Y = x8 + U, where B E R*. 2.

![(u; ) is a distribution on R : E, [U] = 0,](https://s3.amazonaws.com/si.experts.images/answers/2024/06/6675b347452bd_6396675b3471c67c.jpg)

![Var, [U] = 02,0 > 0}. 4. Sampling model: {Y/}_, is an](https://s3.amazonaws.com/si.experts.images/answers/2024/06/6675b347d18d9_6396675b3479ba9d.jpg)

,, kindly solve

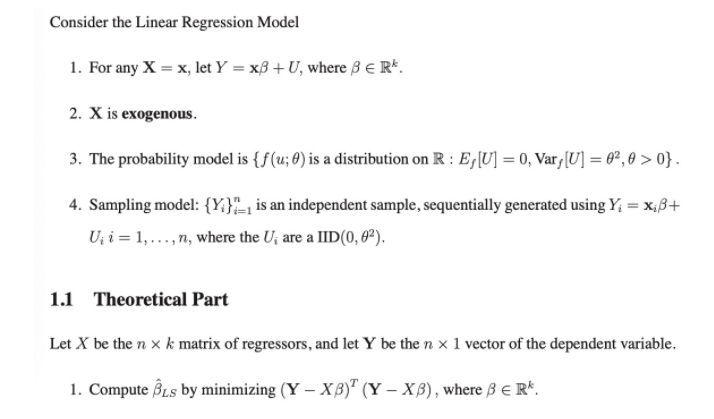

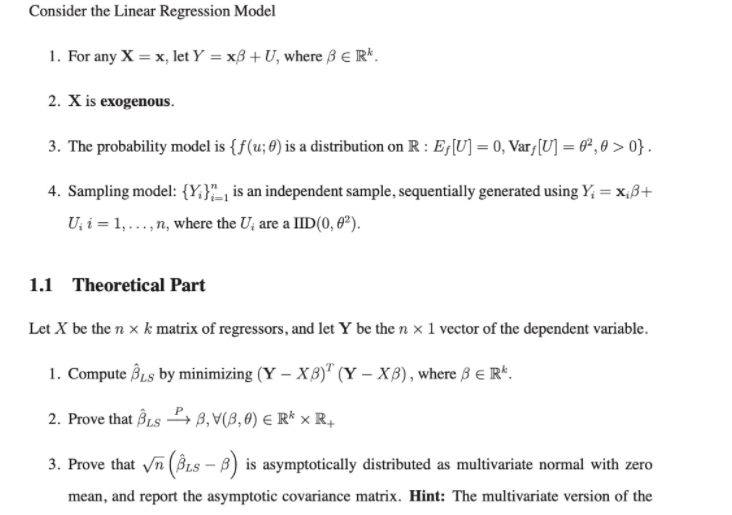

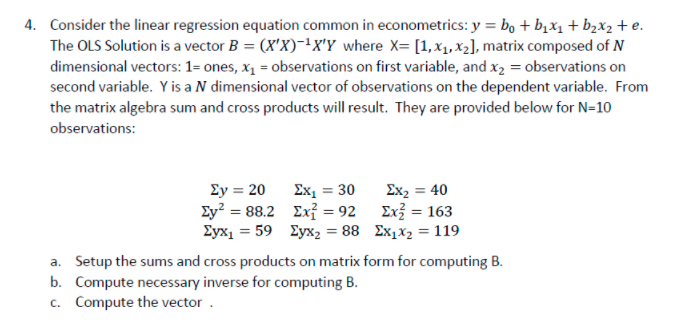

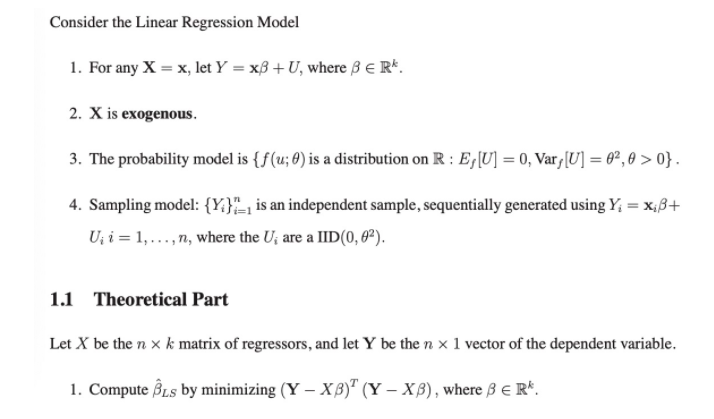

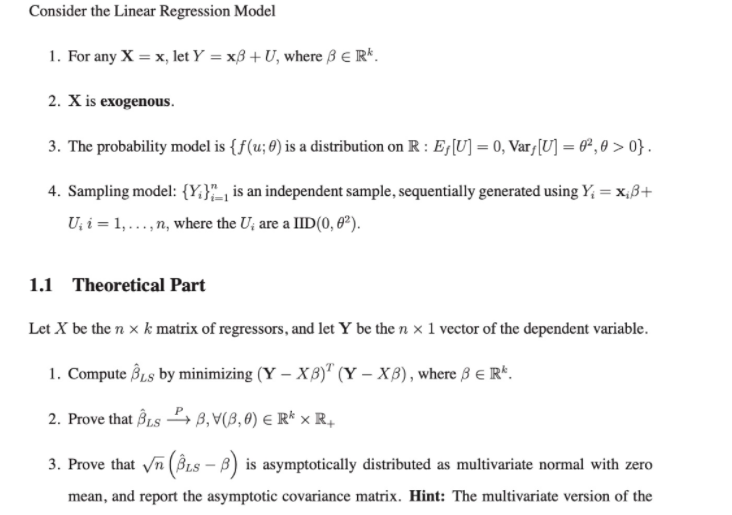

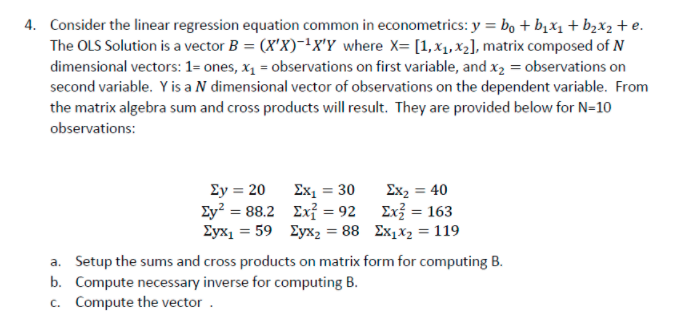

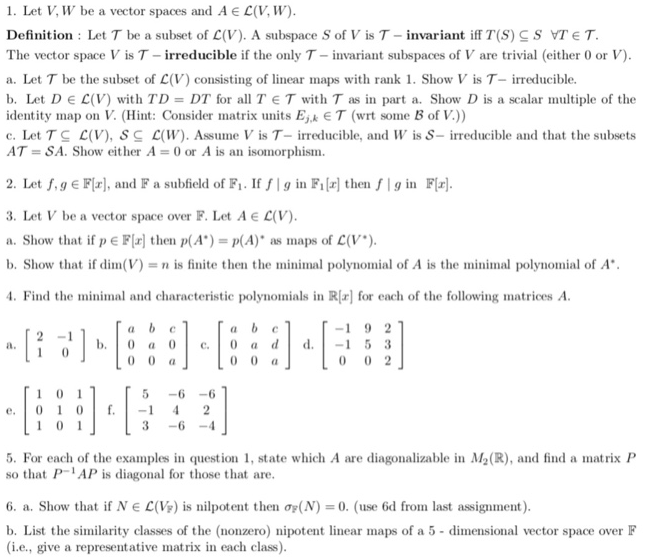

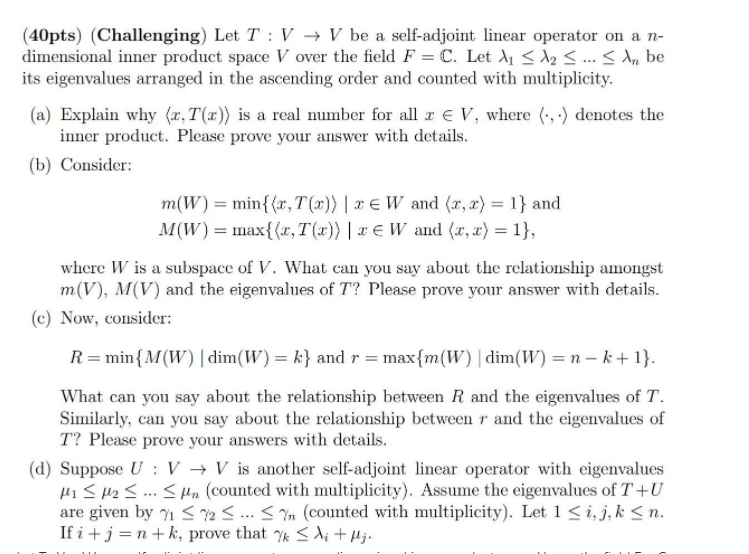

Consider the Linear Regression Model 1. For any X = x, let Y = x8 + U, where B E R*. 2. X is exogenous. 3. The probability model is { f (u; ) is a distribution on R : E, [U] = 0, Var, [U] = 02,0 > 0}. 4. Sampling model: {Y/}_, is an independent sample, sequentially generated using Y, = X;B+ Uji = 1, ...,n, where the U, are a IID(0, 02). 1.1 Theoretical Part Let X be the n x k matrix of regressors, and let Y be the n x 1 vector of the dependent variable. 1. Compute BLs by minimizing (Y - XB)"(Y - XB) , where B E R*.Consider the Linear Regression Model 1. For any X = x, let Y = x8 + U, where B E Rk. 2. X is exogenous. 3. The probability model is { f(u; 0) is a distribution on R : E,[U] = 0, Var, [U] = 02,0 > 0}. 4. Sampling model: {Y/},_, is an independent sample, sequentially generated using Y, = x;B+ Uji = 1, . ..,n, where the U, are a IID(0, 02). 1.1 Theoretical Part Let X be the n x k matrix of regressors, and let Y be the n x 1 vector of the dependent variable. 1. Compute BLS by minimizing (Y - XB)" (Y - XB), where B E RK. 2. Prove that BLS B, V(B, 0) ERk x R+ 3. Prove that vn (BLS - B) is asymptotically distributed as multivariate normal with zero mean, and report the asymptotic covariance matrix. Hint: The multivariate version of the4. Consider the linear regression equation common in econometrics: y = bo + bix, + b2x2 +e. The OLS Solution is a vector B = (XX)-1XY where X= [1, X1, X2], matrix composed of N dimensional vectors: 1= ones, x, = observations on first variable, and X2 = observations on second variable. Y is a N dimensional vector of observations on the dependent variable. From the matrix algebra sum and cross products will result. They are provided below for N=10 observations: Ey = 20 Ex1 = 30 Ex, = 40 Ey? = 88.2 Ex = 92 Ex = 163 Eyx1 = 59 Zyx2 = 88 Ex1X2 = 119 a. Setup the sums and cross products on matrix form for computing B. b. Compute necessary inverse for computing B. c. Compute the vector .1. Let V, W be a vector spaces and A e C(V, W). Definition : Let 7 be a subset of C(V). A subspace S of V is 7 - invariant iff T(S) CS VT ET. The vector space V is 7 - irreducible if the only 7 - invariant subspaces of V are trivial (either 0 or V). a. Let 7 be the subset of C(V) consisting of linear maps with rank 1. Show V is 7- irreducible. b. Let D e C(V) with TD = DT for all T E T with T as in part a. Show D is a scalar multiple of the identity map on V. (Hint: Consider matrix units E, & E T (wrt some B of V.)) c. Let T C C(V), SC C(W). Assume V is 7- irreducible, and W is S- irreducible and that the subsets AT = SA. Show either A = 0 or A is an isomorphism. 2. Let f, g ( F(x], and F a subfield of F1. If f | g in Fi[x] then f | g in F(x]. 3. Let V be a vector space over F. Let A e C(V). a. Show that if p E F[x] then p(A") = p(A)* as maps of C(V*). b. Show that if dim(V) = n is finite then the minimal polynomial of A is the minimal polynomial of A*. 4. Find the minimal and characteristic polynomials in R[x] for each of the following matrices A. 5. For each of the examples in question 1, state which A are diagonalizable in My(R), and find a matrix P so that PAP is diagonal for those that are. 6. a. Show that if NE C(VF) is nilpotent then op( N) = 0. (use 6d from last assignment). b. List the similarity classes of the (nonzero) nipotent linear maps of a 5 - dimensional vector space over F (i.e., give a representative matrix in each class).(40pts) (Challenging) Let T : V - V be a self-adjoint linear operator on a n- dimensional inner product space V over the field F = C. Let Al S 12 S ... S An be its eigenvalues arranged in the ascending order and counted with multiplicity. (a) Explain why (r, T(x)) is a real number for all r E V, where (., .) denotes the inner product. Please prove your answer with details. (b) Consider: m(W) = min{(r, T(x)) |re W and (x, x) = 1} and M(W) = max {(x, T(x)) |re W and (r, x) = 1}, where W is a subspace of V. What can you say about the relationship amongst m(V), M(V) and the eigenvalues of 7? Please prove your answer with details. (c) Now, consider: R = min {M(W) | dim(W) = k} and r = maxim(W) | dim(W) = n - k+ 1}. What can you say about the relationship between R and the eigenvalues of T. Similarly, can you say about the relationship between r and the eigenvalues of T? Please prove your answers with details. (d) Suppose U : V - V is another self-adjoint linear operator with eigenvalues HI S /2 5 ... Un (counted with multiplicity). Assume the eigenvalues of T+ U are given by 1

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts