Answered step by step

Verified Expert Solution

Question

1 Approved Answer

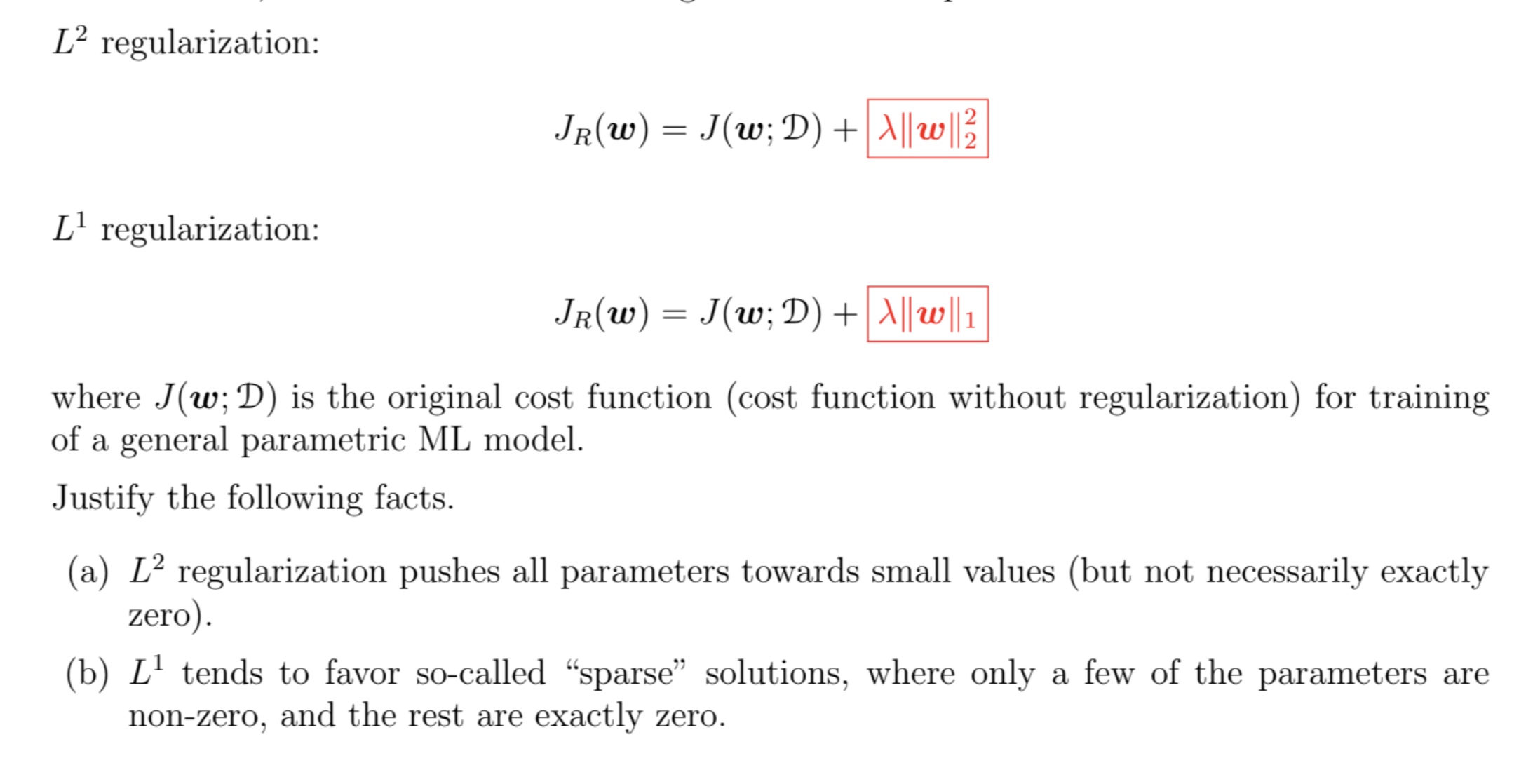

L 2 regularization: J R ( w ) = J ( w ; D ) + | | w | | 2 2 L 1

regularization:

;

regularization:

;

where ; is the original cost function cost function without regularization for training

of a general parametric ML model.

Justify the following facts.

a regularization pushes all parameters towards small values but not necessarily exactly

zero

b tends to favor socalled "sparse" solutions, where only a few of the parameters are

nonzero, and the rest are exactly zero.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started