MP2.2. Approximate the Derivatives // python

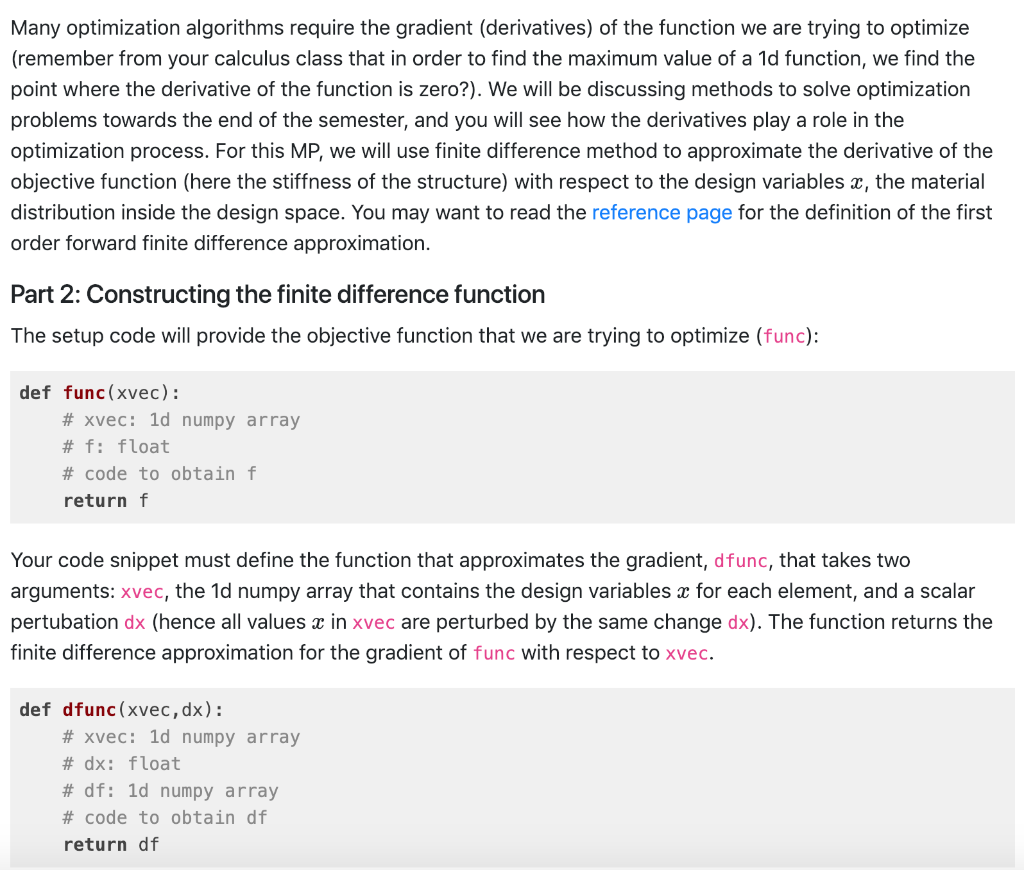

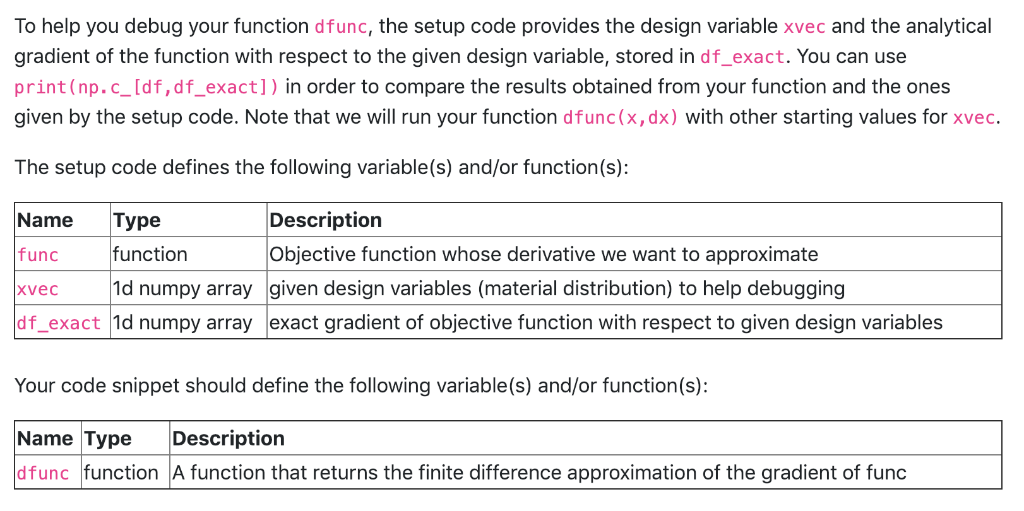

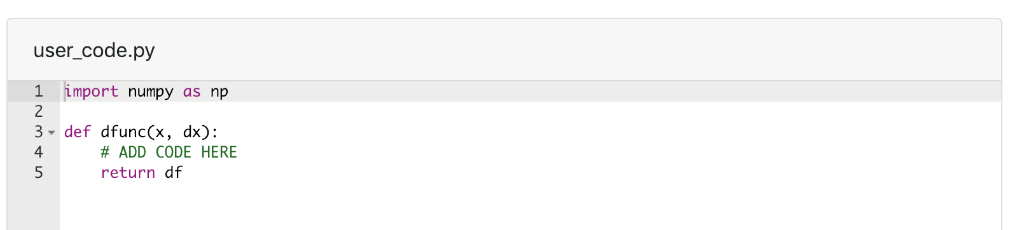

Many optimization algorithms require the gradient (derivatives) of the function we are trying to optimize (remember from your calculus class that in order to find the maximum value of a 1d function, we find the point where the derivative of the function is zero?). We will be discussing methods to solve optimization problems towards the end of the semester, and you will see how the derivatives play a role in the optimization process. For this MP, we will use finite difference method to approximate the derivative of the objective function (here the stiffness of the structure) with respect to the design variables , the material distribution inside the design space. You may want to read the reference page for the definition of the first order forward finite difference approximation. Part 2: Constructing the finite difference function The setup code will provide the objective function that we are trying to optimize (func); def func (xvec): # xvec : 1d numpy array # f: float # code to obtain f return f Your code snippet must define the function that approximates the gradient, dfunc, that takes two arguments: xvec, the 1d numpy array that contains the design variables x for each element, and a scalar pertubation dx (hence all values in xvec are perturbed by the same change dx). The function returns the finite difference approximation for the gradient of func with respect to xvec. def dfunc (xvec,dx): #xvec : 1d numpy array # dx: float # df: 1d numpy array # code to obtain df return df To help you debug your function dfunc, the setup code provides the design variable xvec and the analytical gradient of the function with respect to the given design variable, stored in df_exact. You can use print (np.c[df,df_exact]) in order to compare the results obtained from your function and the ones given by the setup code. Note that we will run your function dfunc(x, dx) with other starting values for xvec. The setup code defines the following variable(s) and/or function(s): Description Objective function whose derivative we want to approximate NameType func xvec1d numpy array given design variables (material distribution) to help debugging df_exact 1d numpy array exact gradient of objective function with respect to given design variables function Your code snippet should define the following variable(s) and/or function(s) Name Type Description dfunc function A function that returns the finite difference approximation of the gradient of func user_code.py 1 import numpy as np 3- def dfunc(x, dx): 4 # ADD CODE HERE return df