Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Multi - Arm Bandit Problem: Background In digital advertising, Click - Through Rate ( CTR ) is a critical metric that measures the effectiveness of

MultiArm Bandit Problem:

Background

In digital advertising, ClickThrough Rate CTR is a critical metric that measures the effectiveness of an advertisement. It is calculated as the ratio of users who click on an ad to the number of users who view the ad A higher CTR indicates more successful engagement with the audience, which can lead to increased conversions and revenue. From timetotime advertisers experiment with various elementstargeting of an ad to optimise the ROI.

Scenario

Imagine an innovative digital advertising agency, AdMasters Inc., that specializes in maximizing clickthrough rates CTR for their clients' advertisements. One of their clients has identified four key tunable elements in their ads: Age, City, Gender, and Mobile Operating System OS These elements significantly influence user engagement and conversion rates. The client is keen to optimize their CTR while minimizing resource expenditure.

Objective

Optimize the CTR of digital ads by employing Multi Arm Bandit algorithms. System should dynamically and efficiently allocate ad displays to maximize overall CTR

Dataset

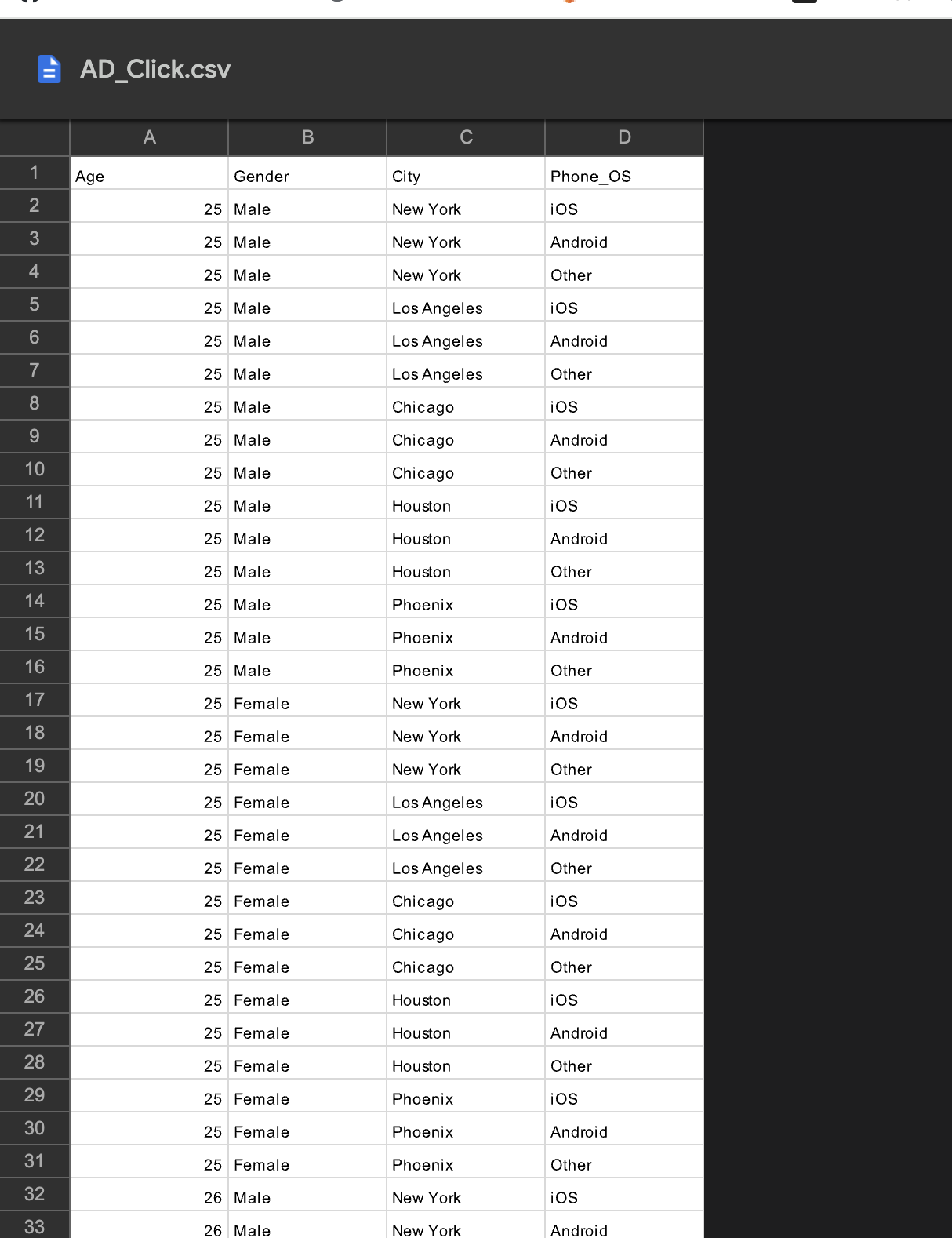

The dataset for Ads contains unique featurescharacteristics

Age Range: :

City Possible Values: 'New York', 'Los Angeles', 'Chicago','Houston', 'Phoenix'

Gender Possible Values: 'Male', 'Female'

OS: Possible Values: 'iOS', 'Android', 'Other'

Attached the SS for reference of dataset ADClick.csv

Requirements and Deliverables:

Implement the MultiArm Bandit Problem for the given above scenario for all the below mentioned policy methods.

Initialize constants

# Constants

epsilon

# Initialize value function and policy

Load Dataset

# Python Code for Dataset loading and print dataset statistics

#write your python code below this line

Design a CTR Environment M

# Code for Dataset loading and print dataset statistics along with reward function

#write your python code below this line

class CTREnvironment:

Using Random Policy M

Print all the iterations with random policy selected for the given AdMandatory

# run the environment with an agent that is guided by a random policy

#write your code below this line

Using Greedy Policy M

Print all the iterations with random policy selected for the given AdMandatory

# run the environment with an agent that is guided by a greedy policy

#write your code below this line

Using EpsilonGreedy Policy M

Print all the iterations with random policy selected for the given AdMandatory

# run the environment with an agent that is guided by a epsilongreedy policy

#write your code below this line

Using UCB M

Print all the iterations with random policy selected for the given AdMandatory

# run the environment with an agent that is guided by a UCB

#write your code below this line

Plot CTR distribution for all the appraoches as a spearate graph M

#write your code below this line

Changing Exploration Percentage M

How does changing the exploration percentage EXPLOREPERCENTAGE affect the performance of the algorithm? Test with different values eg and and discuss the results.

#Implement with any MAB algorithm

#Try with different EXPLOREPERCENTAGE

#Different value of alpha

Requirements and Deliverables:

Implement the MultiArm Bandit Problem for the given above scenario for all the below mentioned policy methods.

Initialize constantsepsilon

Load Dataset

In : # Code for Dataset loading and print dataset statistics

#write your code below this line

Design a CTR Environment M

In : # Code for Dataset loading and print dataset statistics along with reward function

#write your code below this line

class CTREnvironment: Using Random Policy M

Print all the iterations with random policy selected for the given AdMandatory

In : # run the environment with an agent that is guided by a random policy

#write your code below this line

Using Greedy Policy M

Print all the iterations with random policy selected for the given AdMandatory

In : # run the environment with an agent that is guided by a greedy policy

#write your code below this line

Using EpsilonGreedy Policy M

Print all the iterations with random policy selected for the given AdMandatory

In : # run the environment with an agent that is guided by a epsilongreedy policy

#write your code below this line

Using UCB M

Print all the iterations with random policy selected for the given AdMandatory

In : # run the environment with an agent that is guided by a UCB

#write your code below this line Plot CTR distribution for all the appraoches as a spearate graph Conclusion M

Conclude your assignment in wrods by discussing the best approach for maximizing the CTR using random, greedy, epsilongreedy and UCB.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started