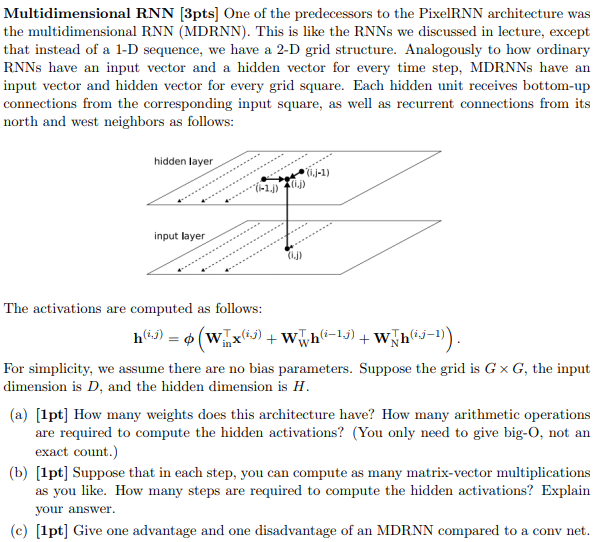

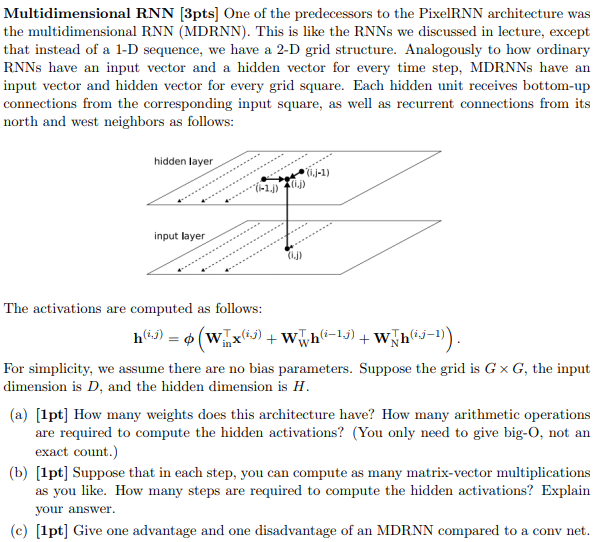

Multidimensional RNN [3pts One of the predecessors to the PixelRNN architecture was the multidimensional RNN (MDRNN). This is like the RNNs we discussed in lecture, except that instead of a 1-D sequence, we have a 2-D grid structure. Analogously to how ordinary RNNs have an input vector and a hidden vector for every time step, MDRNNs have an input vector and hidden vector for every grid square. Each hidden unit receives bottom-up connections from the corresponding input square, as well as recurrent connections from its north and west neighbors as follows hidden layer input layer i.j) The activations are computed as follows For simplicity, we assume there are no bias parameters. Suppose the grid is G x G, the input dimension is D, and the hidden dimension is H (a) [lpt] How many weights does this architecture have? How many arithmetic operations are required to compute the hidden activations? (You only need to give big-O, not arn exact count (b) [1pt] Suppose that in each step, you can compute as many matrix-vector multiplications as you like. How many steps are required to compute the hidden activations? Explain your answer (c) [lpt] Give one advantage and one disadvantage of an MDRNN compared to a conv net Multidimensional RNN [3pts One of the predecessors to the PixelRNN architecture was the multidimensional RNN (MDRNN). This is like the RNNs we discussed in lecture, except that instead of a 1-D sequence, we have a 2-D grid structure. Analogously to how ordinary RNNs have an input vector and a hidden vector for every time step, MDRNNs have an input vector and hidden vector for every grid square. Each hidden unit receives bottom-up connections from the corresponding input square, as well as recurrent connections from its north and west neighbors as follows hidden layer input layer i.j) The activations are computed as follows For simplicity, we assume there are no bias parameters. Suppose the grid is G x G, the input dimension is D, and the hidden dimension is H (a) [lpt] How many weights does this architecture have? How many arithmetic operations are required to compute the hidden activations? (You only need to give big-O, not arn exact count (b) [1pt] Suppose that in each step, you can compute as many matrix-vector multiplications as you like. How many steps are required to compute the hidden activations? Explain your answer (c) [lpt] Give one advantage and one disadvantage of an MDRNN compared to a conv net