Question: Need help in solving part 3 & part 4 . Other questions posted in Chegg are having different parameters for the functions, def cart(xTr,yTr): &

Need help in solving part 3 & part 4 . Other questions posted in Chegg are having different parameters for the functions, def cart(xTr,yTr): & def evaltree(root,xTe):

Part 3 :

Test cases for part 3 :

Test cases for part 3 :

# The tests below check that your implementation of cart returns the correct predicted values for a sample dataset

#test case 1 def cart_test1(): t=cart(xor4,yor4) return DFSxor(t)

#test case 2 def cart_test2(): y = np.random.rand(16); t = cart(xor4,y); yTe = DFSpreds(t)[:]; # Check that every label appears exactly once in the tree y.sort() yTe.sort() return np.all(np.isclose(y, yTe))

#test case 3 def cart_test3(): xRep = np.concatenate([xor2, xor2]) yRep = np.concatenate([yor2, 1-yor2]) t = cart(xRep, yRep) return DFSxorUnsplittable(t)

#test case 4 def cart_test4(): X = np.ones((5, 2)) # Create a dataset with identical examples y = np.ones(5) # On this dataset, your cart algorithm should return a single leaf # node with prediction equal to 1 t = cart(X, y) # t has no children children_check = (t.left is None) and (t.right is None) # Make sure t does not cut any feature and at any value feature_check = (t.feature is None) and (t.cut is None) # Check t's prediction prediction_check = np.isclose(t.prediction, 1) return children_check and feature_check and prediction_check

#test case 5 def cart_test5(): X = np.arange(4).reshape(-1, 1) y = np.array([0, 0, 1, 1])

t = cart(X, y) # your cart algorithm should generate one split # check whether you set t.feature and t.cut to something return t.feature is not None and t.cut is not None

runtest(cart_test1,'cart_test1') runtest(cart_test2,'cart_test2') runtest(cart_test3,'cart_test3') runtest(cart_test4,'cart_test4') runtest(cart_test5,'cart_test5')

Part 4 :

Test cases for part 4 :

Test cases for part 4 :

# The following tests check that your implementation of evaltree returns the correct predictions for two sample trees

t0 = time.time() root = cart(xTrIon, yTrIon) t1 = time.time()

tr_err = np.mean((evaltree(root,xTrIon) - yTrIon)**2) te_err = np.mean((evaltree(root,xTeIon) - yTeIon)**2)

print("Elapsed time: %.2f seconds" % (t1-t0)) print("Training RMSE : %.2f" % tr_err) print("Testing RMSE : %.2f " % te_err)

#test case 1 def evaltree_test1(): t = cart(xor4,yor4) xor4te = xor4 + (np.sign(xor4 - .5) * .1) inds = np.arange(16) np.random.shuffle(inds) # Check that shuffling and expanding the data doesn't affect the predictions return np.all(np.isclose(evaltree(t, xor4te[inds,:]), yor4[inds]))

#test case 2 def evaltree_test2(): a = TreeNode(None, None, None, None, 1) b = TreeNode(None, None, None, None, -1) c = TreeNode(None, None, None, None, 0) d = TreeNode(None, None, None, None, -1) e = TreeNode(None, None, None, None, -1) x = TreeNode(a, b, 0, 10, 0) y = TreeNode(x, c, 0, 20, 0) z = TreeNode(d, e, 0, 40, 0) t = TreeNode(y, z, 0, 30, 0) # Check that the custom tree evaluates correctly return np.all(np.isclose( evaltree(t, np.array([[45, 35, 25, 15, 5]]).T), np.array([-1, -1, 0, -1, 1])))

runtest(evaltree_test1,'evaltree_test1') runtest(evaltree_test2,'evaltree_test2')

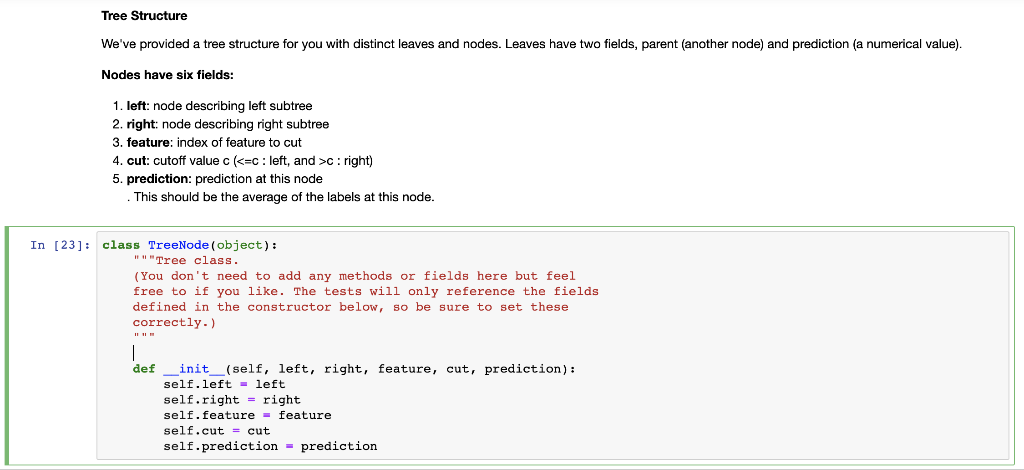

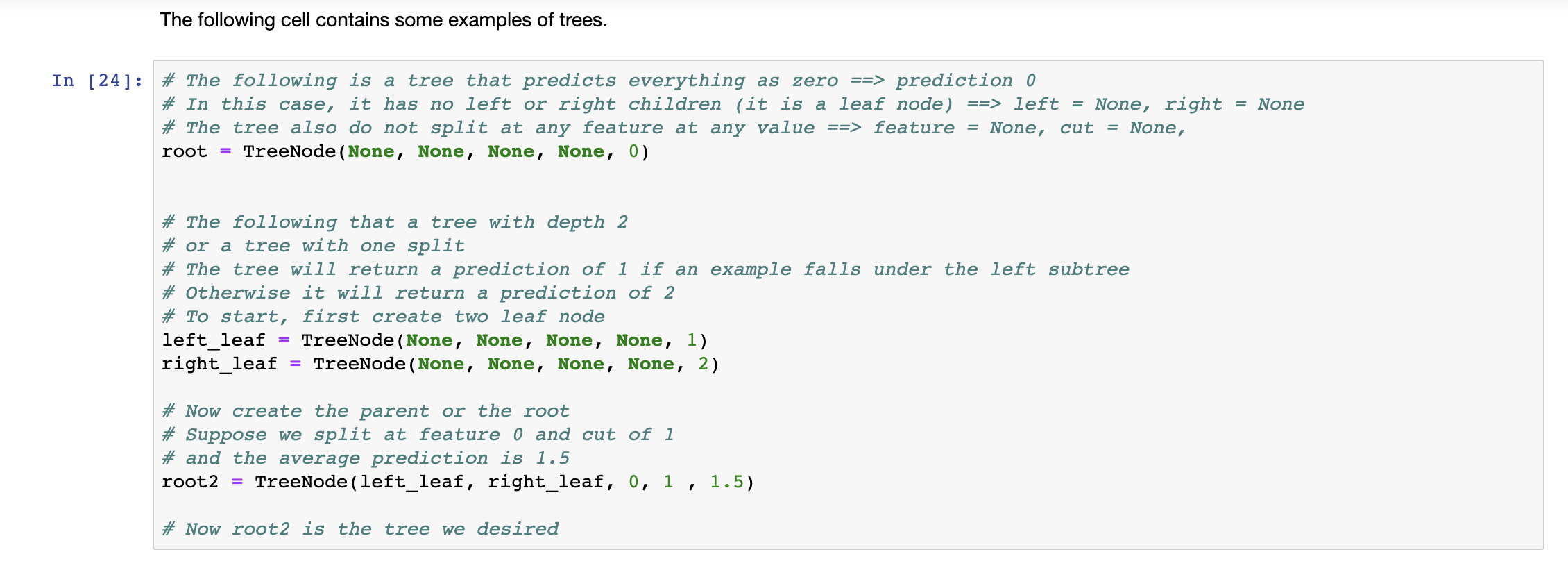

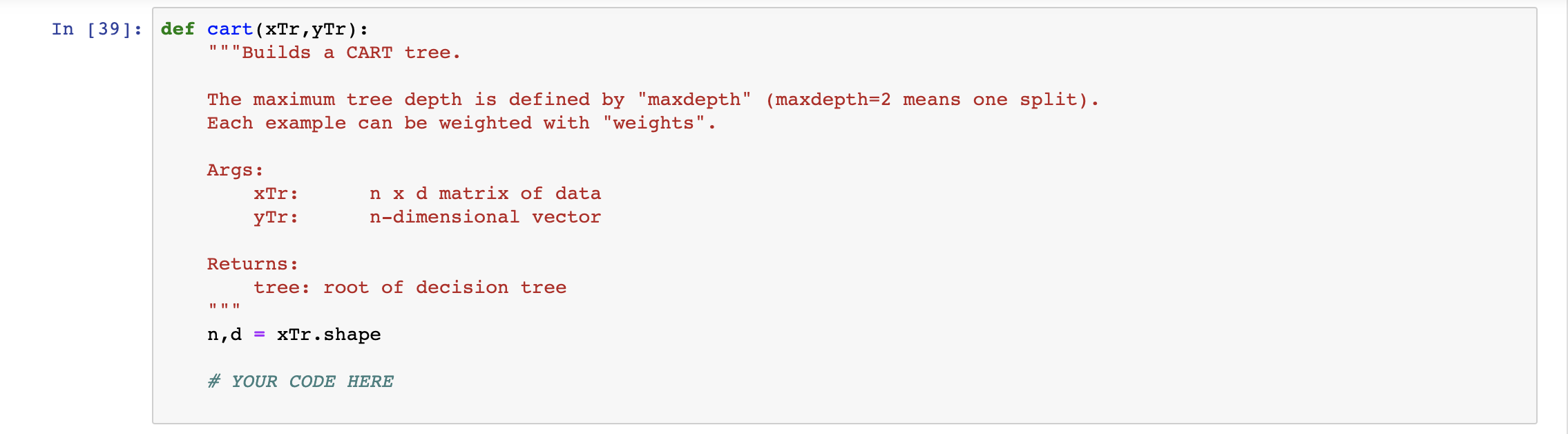

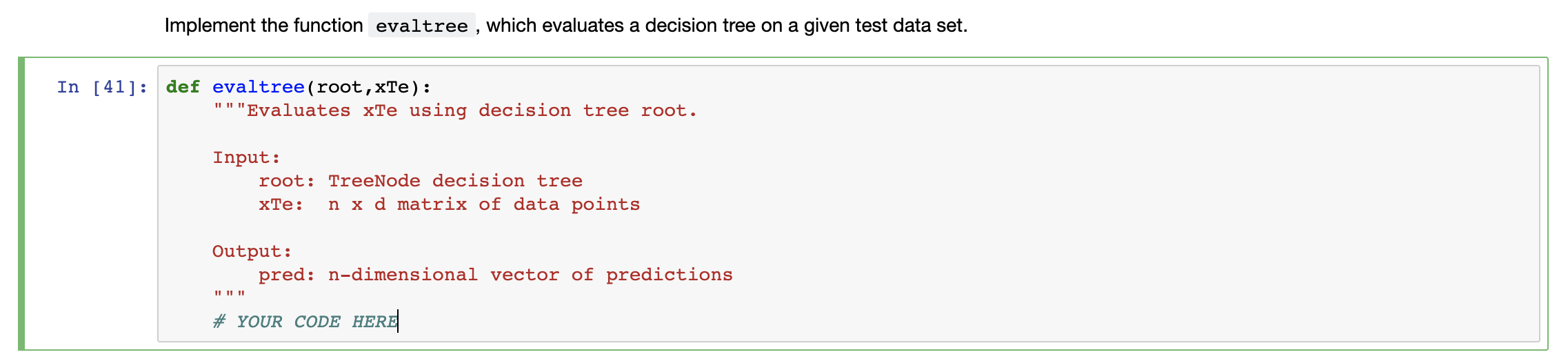

Tree Structure We've provided a tree structure for you with distinct leaves and nodes. Leaves have two fields, parent (another node) and prediction (a numerical value). Nodes have six fields: 1. left: node describing left subtree 2. right: node describing right subtree 3. feature: index of feature to cut 4. cut: cutoff value c (c: right) 5. prediction: prediction at this node This should be the average of the labels at this node. In [23]: class TreeNode(object): Tree class. (You don't need to add any methods or fields here but feel free to if you like. The tests will only reference the fields defined in the constructor below, so be sure to set these correctly.) def _init__(self, left, right, feature, cut, prediction): self.left = left self.right = right self. feature = feature self.cut = cut self.prediction - prediction The following cell contains some examples of trees. In [24]: # The following is a tree that predicts everything as zero ==> prediction 0 # In this case, it has no left or right children (it is a leaf node) ==> left = None, right = None # The tree also do not split at any feature at any value ==> feature = None, cut = None, root = TreeNode (None, None, None, None, 0) # The following that a tree with depth 2 # or a tree with one split # The tree will return a prediction of 1 if an example falls under the left subtree # Otherwise it will return a prediction of 2 # To start, first create two leaf node left_leaf = TreeNode (None, None, None, None, 1) right_leaf = TreeNode (None, None, None, None, 2) # Now create the parent or the root # Suppose we split at feature O and cut of 1 # and the average prediction is 1.5 root2 = TreeNode (left_leaf, right_leaf, 0, 1 , 1.5) # Now root2 is the tree we desired In [39]: def cart(xTr, yTr): ""Builds a CART tree. The maximum tree depth is defined by "maxdepth" (maxdepth=2 means one split). Each example can be weighted with "weights". Args: xTr: yTr: n x d matrix of data n-dimensional vector Returns: tree: root of decision tree n,d xTr.shape # YOUR CODE HERE Implement the function evaltree , which evaluates a decision tree on a given test data set. In [41]: def evaltree (root, xTe): """Evaluates xTe using decision tree root. Input: root: TreeNode decision tree xTe: n x d matrix of data points Output: pred: n-dimensional vector of predictions II II II # YOUR CODE HERE

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts