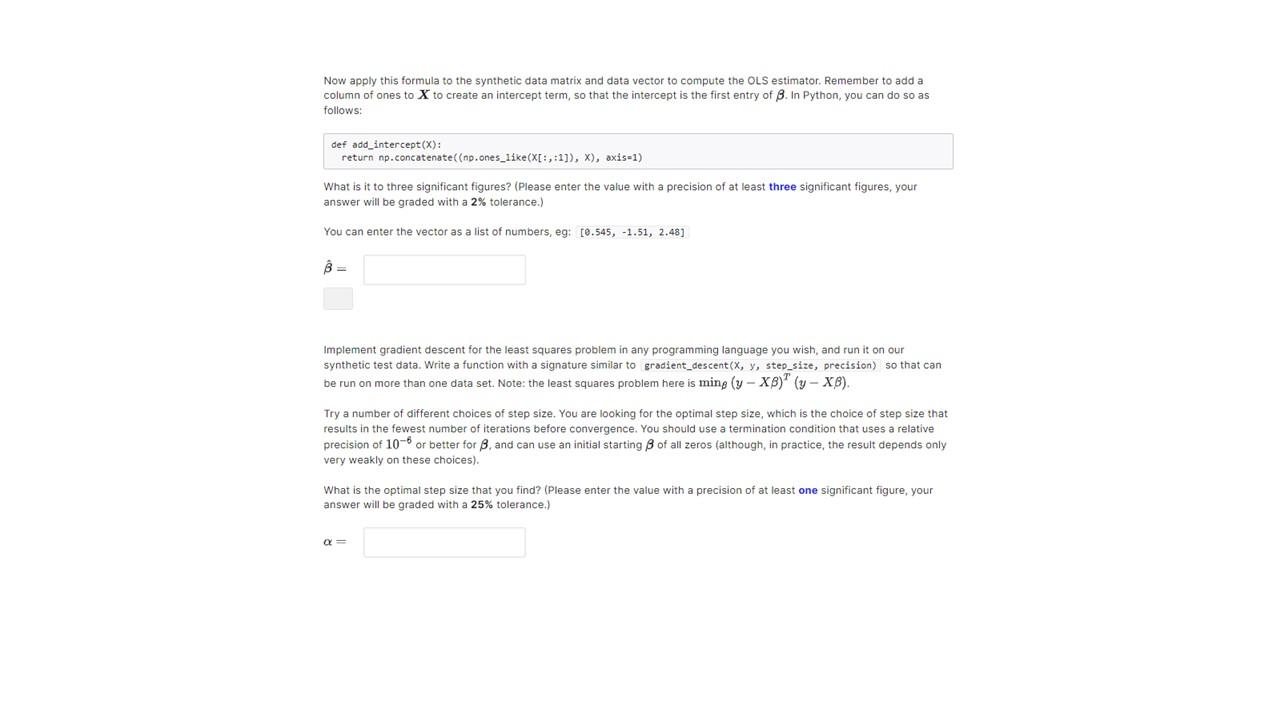

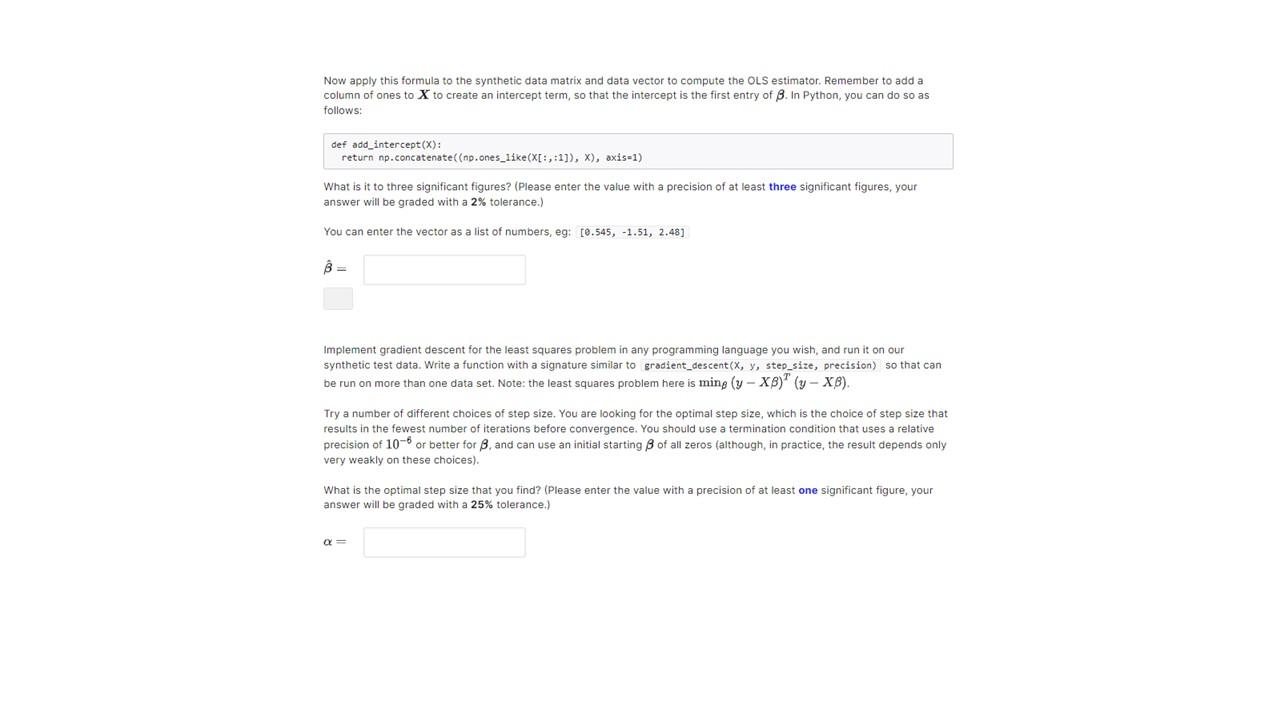

Now apply this formula to the synthetic data matrix and data vector to compute the OLS estimator. Remember to add a column of ones to X to create an intercept term, so that the intercept is the first entry of . In Python, you can do so as follows: def add_intercept (x) : return np.concatenate ((np,ones_1ike(x[:,:1]),x), axis=1) What is it to three significant figures? (Please enter the value with a precision of at least three significant figures, your answer will be graded with a 2% tolerance.) You can enter the vector as a list of numbers, eg: [0.545,1.51,2.48] ^= Implement gradient descent for the least squares problem in any programming language you wish, and run it on our synthetic test data. Write a function with a signature similar to gradient_descent (x,y, step_size, precision ) so that can be run on more than one data set. Note: the least squares problem here is min(yX)T(yX). Try a number of different choices of step size. You are looking for the optimal step size, which is the choice of step size that results in the fewest number of iterations before convergence. You should use a termination condition that uses a relative precision of 106 or better for , and can use an initial starting of all zeros (although, in practice, the result depends only very weakly on these choices). What is the optimal step size that you find? (Please enter the value with a precision of at least one significant figure, your answer will be graded with a 25% tolerance.) Now apply this formula to the synthetic data matrix and data vector to compute the OLS estimator. Remember to add a column of ones to X to create an intercept term, so that the intercept is the first entry of . In Python, you can do so as follows: def add_intercept (x) : return np.concatenate ((np,ones_1ike(x[:,:1]),x), axis=1) What is it to three significant figures? (Please enter the value with a precision of at least three significant figures, your answer will be graded with a 2% tolerance.) You can enter the vector as a list of numbers, eg: [0.545,1.51,2.48] ^= Implement gradient descent for the least squares problem in any programming language you wish, and run it on our synthetic test data. Write a function with a signature similar to gradient_descent (x,y, step_size, precision ) so that can be run on more than one data set. Note: the least squares problem here is min(yX)T(yX). Try a number of different choices of step size. You are looking for the optimal step size, which is the choice of step size that results in the fewest number of iterations before convergence. You should use a termination condition that uses a relative precision of 106 or better for , and can use an initial starting of all zeros (although, in practice, the result depends only very weakly on these choices). What is the optimal step size that you find? (Please enter the value with a precision of at least one significant figure, your answer will be graded with a 25% tolerance.)