Question: Operating system programming assignment generator.cpp: #include static const int ALEN=50; char* str_generator(void){ std::random_device rd; std::mt19937 gen(rd()); std::uniform_int_distribution dis(48, 122); char *art = new char[ALEN+3]; for

Operating system programming assignment

![std::random_device rd; std::mt19937 gen(rd()); std::uniform_int_distribution dis(48, 122); char *art = new char[ALEN+3];](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f4f1b0ba3e8_68866f4f1b058b81.jpg)

![for (int i=0; i art[i] = char(dis(gen)); art[ALEN] = 0; return art;](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f4f1b177497_68866f4f1b0e692a.jpg)

generator.cpp:

#include

static const int ALEN=50;

char* str_generator(void){

std::random_device rd;

std::mt19937 gen(rd());

std::uniform_int_distribution dis(48, 122);

char *art = new char[ALEN+3];

for (int i=0; i art[i] = char(dis(gen));

art[ALEN] = 0;

return art;

}

write a program in c++ which will be tested on a Linux server

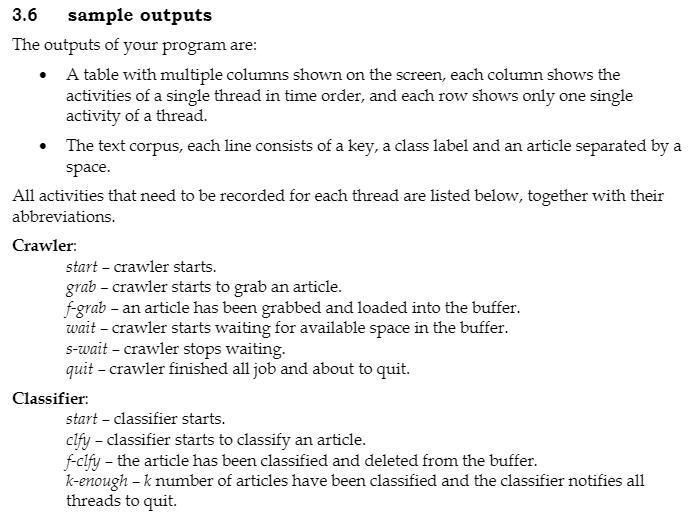

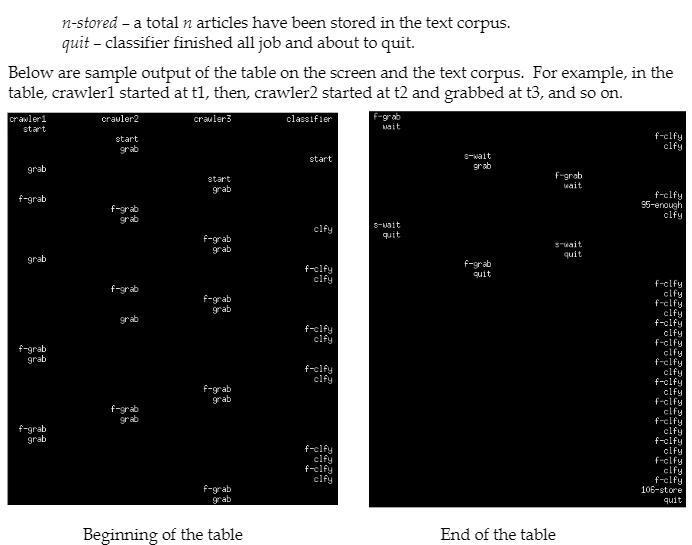

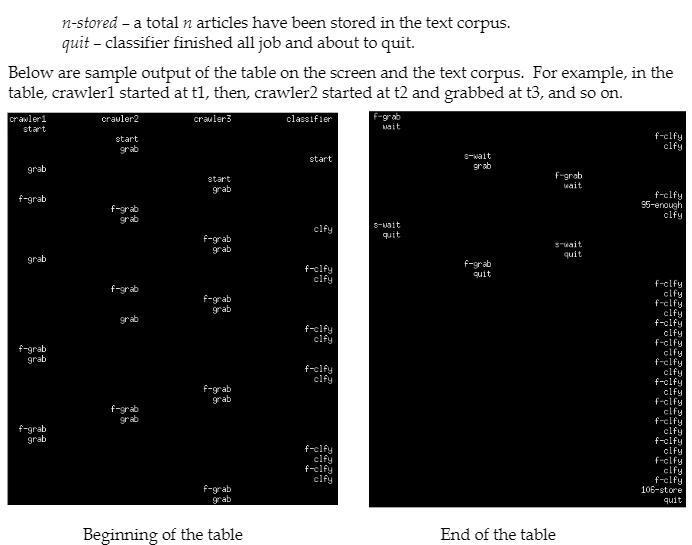

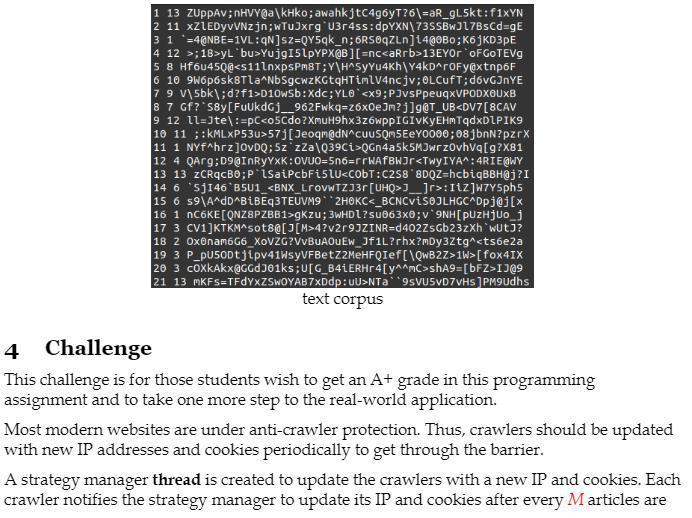

1 Goals The purpose of this assignment is to help you: get familiar with multi-threaded programming using pthread get familiar with mutual exclusion using mutexes get familiar with synchronization using semaphores 2 Background Sentiment analysis, which is a powerful technique based on natural language processing, has a wide range of applications, including consumer reviews analysis, recommender system, political campaigning, stock speculation, etc. A sentiment analysis model requires a large text corpus, which consists of classified articles grabbed from the internet using web crawlers. In the simplest scenario, a text corpus can be built by two components: a web crawler and a classifier. The crawler browse through web pages and grabs articles from websites. The grabbed articles are stored in a buffer, from which the classifier processes articles and classifies them. Considering the complexity of modern websites, it usually takes a long time for a crawler to locate and grab an article from the web page. So, the speed of crawlers is usually too slow for the classifier. Thus, multiple crawlers would be a better choice. 3 Components and Requirements You are required to design and implement three crawlers, a buffer and a classifier in C/C++ on Linux (other languages are not allowed). Mutual exclusion and synchronization must be done with mutex and semaphore provided in libraries and 3.1 crawler Each crawler thread is created to grab articles from websites and load them into the buffer. It keeps doing grabbing and loading job, which takes time interval_A, until the buffer is full. And then it starts waiting until the classifier deletes an article from the buffer. 3.2 buffer The buffer structure is a first-in-first-out (FIFO) queue. It is used to store the grabbed articles from crawlers temporarily, until they are taken by the classifier. It can store up to 12 articles at the same time. You need to implement your own queue. You are not allowed to use standard c++ library (e.g., queue or other container provided by standard template library) or third-party libraries. 3.3 classifier A classifier thread is created to classify the articles grabbed by the crawlers in FIFO order. Specifically, there are two steps in the procedure: 1. Pre-processing the classifier makes a copy of the article at the head of the buffer, changes all the uppercase letter ('A-'Z') to lowercase letter ('a'-'Z') and deletes any symbol that is not a letter. 2. Classification: the classifier classifies the article into one of the 13 classes based on the first letter, X, of the processed article as follows. Class label = int(x -'a')%13 + 1 Next, an auto-increasing key starting from 1 will be given to the classified article. (So, the keys of classified articles are 1,2,3,...). At last, the key, the class label and the original article, are stored to the text corpus in a text file. Then, the classifier deletes the classified article in the buffer. The whole procedure takes time of interval_B. 3.4 termination The articles are divided into 13 classes. Denote the number of articles in each class as C., C, ... C13, and p = min{ Cu, C., ... C13). When p 25, the classifier notifies all crawlers to quit after finishing the current job at hand, and then the program terminates. 3.5 input arguments Your program has to accept the following two arguments in input order: interval_A, interval_B: integer, unit: microsecond. . 3.6 sample outputs The outputs of your program are: A table with multiple columns shown on the screen, each column shows the activities of a single thread in time order, and each row shows only one single activity of a thread. The text corpus, each line consists of a key, a class label and an article separated by a space. All activities that need to be recorded for each thread are listed below, together with their abbreviations. Crawler start - crawler starts. grab - crawler starts to grab an article. f-grab - an article has been grabbed and loaded into the buffer. wait - crawler starts waiting for available space in the buffer. s-wait - crawler stops waiting. quit - crawler finished all job and about to quit. Classifier: start - classifier starts. clfy - classifier starts to classify an article. f-clfy - the article has been classified and deleted from the buffer. k-enough - k number of articles have been classified and the classifier notifies all threads to quit. n-stored - a total n articles have been stored in the text corpus. quit - classifier finished all job and about to quit. Below are sample output of the table on the screen and the text corpus. For example, in the table, crawler1 started at t1, then, crawler2 started at t2 and grabbed at t3, and so on. crauler2 crawleri start crawlers classifier F-grab sit start f-elfy clfy start s-wait grab grab start grab F-grob wait f-grab f-grab grab f-clfy 95-enough clfy cify S-wait quit f-grab grab s wait quit grab f-clfy cify f-grab cuit f-arab f-grab grab grab f-clfy cify f-grab grab f-clfy cify f-grab f-clfy cify f-elfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy 106-store quit grab f-grab grab f-grab grab f-clfy clfy f-elfy clfy f-grab grab Beginning of the table End of the table 1 13 ZUPPAV; NHVY@a|kHko;awahkjtc4g6yT?6\=aR_9L5kt:f1xYN 2 11 XZLEDY VNzjn;WtuJxrg U3r4ss:dpYXN\73SSBWJ17BsCd=gE 3 1 '=4@NBE=1VL:IN]sz=QY5qk_n; 6RSOZLn][4@0B0;K6jKD3PE 4 12 >;18>yl bu>Yujg151pYPX@B][=nc13EYOroFGOTEVO 5 8 Hf6u45Q@D10wsb:Xdc;YLO 57][Jeoqm@dNACUUSQM5EeY0000;08 jbnN?pzrX 11 1 NYfahrz]OVDQ;5z' z2a\Q39Ci>QGn4a5k5MJwrzovhva[g?X81 12 4 QArg; D9QINRyYXK:OVUO=5n6=rrWAFBWJr)_]r>:112]w7y5phs 15 6 59\AndDBiBEq3TEUVM9 *2H0KC<_bcncvisojlhgc nc6ke>gkzu; 3WHD1?SU063x0;v9NH PUzHjuo_j 17 3 CV1 ]KTKM^sot8@[J[M>4?v2r9JZINR=D4027sGb23zxh WUtJ? 18 2 Oxonam666_XOVZG?VVBUAOUEw_Jf1L?rhx?mDy3Ztg^1W>[fox4IX 20 3 coxkAkx@GGdJ01ks; U[G_B41ERHr4[ ymC>shA9=[bFZ>IJ@9 21 13 MKFS=TFdYxZSWOYAB7XDdp: UU>NTa9SVUSVD VHS JPMoudhs text corpus 4 Challenge This challenge is for those students wish to get an A+ grade in this programming assignment and to take one more step to the real-world application. Most modern websites are under anti-crawler protection. Thus, crawlers should be updated with new IP addresses and cookies periodically to get through the barrier. A strategy manager thread is created to update the crawlers with a new IP and cookies. Each crawler notifies the strategy manager to update its IP and cookies after every M articles are grabbed. The update takes time of interval_C. The input and extra output are listed below. Your program has to accept the following arguments in input order: interval_A, intervat_B, interval_C: integer, unit: microsecond, M: integer. Crawler: two more activities have to be recorded: rest - crawler starts resting. s-rest - crawler stops resting. Strategy-Manager: start-manager starts. get-crx - manager gets a notification from crawler x. up-crx - manager updated crawler x with new IP and cookies. quit - manager finished all job and about to quit. 5 Helper Program and Hint 5.1 generator.cpp The function char* str_generator (void) is provided in the file generator.cpp. It returns a string (char array) of length 50. Use it by declaring a prototype in your code and compiling it along with your source code. 5.2 hint Multi-threading needs careful manipulation. A specious program may show correctness in several tests at the beginning, but collapses at the later tests. Thus, testing your program multiple times would be a good choice. Testing it with different arguments would be even better. 6 Marking Scheme Your program will be tested on our CSLab Linux servers (cs3103-01, cs3103-02, cs3103-03). You should describe clearly how to compile and run your program as comments in your source program file. If an executable file cannot be generated and running successfully on our Linux servers, it will be considered as unsuccessful. A. Design and use of multi-threading (15%) Thread-safe multithreaded design and correct use of thread-management functions Non-multithreaded implementation (0%) B. Design and use of mutexes (15%) Complete, correct and non-excessive use of mutexes Useless/unnecessary use of mutexes (0%) C. Design and use of semaphores (30%) Complete, correct and non-excessive use of semaphores Useless / unnecessary use of semaphores (0%) D. Degree of concurrency (15%) A design with higher concurrency is preferable to one with lower concurrency. An example of lower concurrency: only one thread can access the buffer at a time. An example of higher concurrency: various threads can access the buffer o but works on different articles at a time. No concurrency (0%) E. Program correctness (15%) Complete and correct implementation of other features including: o correct logic and coding of thread functions o correct coding of queue and related operations o passing parameters to the program on the command line o program output conform to the format of the sample output o successful program termination Fail to pass the g++ complier on our Linux servers to generate a runnable executable file (0%) F. Programming style and documentation (10%) Good programming style Clear comments in the program to describe the design and logic Unreadable program without any comment (0%) 1 Goals The purpose of this assignment is to help you: get familiar with multi-threaded programming using pthread get familiar with mutual exclusion using mutexes get familiar with synchronization using semaphores 2 Background Sentiment analysis, which is a powerful technique based on natural language processing, has a wide range of applications, including consumer reviews analysis, recommender system, political campaigning, stock speculation, etc. A sentiment analysis model requires a large text corpus, which consists of classified articles grabbed from the internet using web crawlers. In the simplest scenario, a text corpus can be built by two components: a web crawler and a classifier. The crawler browse through web pages and grabs articles from websites. The grabbed articles are stored in a buffer, from which the classifier processes articles and classifies them. Considering the complexity of modern websites, it usually takes a long time for a crawler to locate and grab an article from the web page. So, the speed of crawlers is usually too slow for the classifier. Thus, multiple crawlers would be a better choice. 3 Components and Requirements You are required to design and implement three crawlers, a buffer and a classifier in C/C++ on Linux (other languages are not allowed). Mutual exclusion and synchronization must be done with mutex and semaphore provided in libraries and 3.1 crawler Each crawler thread is created to grab articles from websites and load them into the buffer. It keeps doing grabbing and loading job, which takes time interval_A, until the buffer is full. And then it starts waiting until the classifier deletes an article from the buffer. 3.2 buffer The buffer structure is a first-in-first-out (FIFO) queue. It is used to store the grabbed articles from crawlers temporarily, until they are taken by the classifier. It can store up to 12 articles at the same time. You need to implement your own queue. You are not allowed to use standard c++ library (e.g., queue or other container provided by standard template library) or third-party libraries. 3.3 classifier A classifier thread is created to classify the articles grabbed by the crawlers in FIFO order. Specifically, there are two steps in the procedure: 1. Pre-processing the classifier makes a copy of the article at the head of the buffer, changes all the uppercase letter ('A-'Z') to lowercase letter ('a'-'Z') and deletes any symbol that is not a letter. 2. Classification: the classifier classifies the article into one of the 13 classes based on the first letter, X, of the processed article as follows. Class label = int(x -'a')%13 + 1 Next, an auto-increasing key starting from 1 will be given to the classified article. (So, the keys of classified articles are 1,2,3,...). At last, the key, the class label and the original article, are stored to the text corpus in a text file. Then, the classifier deletes the classified article in the buffer. The whole procedure takes time of interval_B. 3.4 termination The articles are divided into 13 classes. Denote the number of articles in each class as C., C, ... C13, and p = min{ Cu, C., ... C13). When p 25, the classifier notifies all crawlers to quit after finishing the current job at hand, and then the program terminates. 3.5 input arguments Your program has to accept the following two arguments in input order: interval_A, interval_B: integer, unit: microsecond. . 3.6 sample outputs The outputs of your program are: A table with multiple columns shown on the screen, each column shows the activities of a single thread in time order, and each row shows only one single activity of a thread. The text corpus, each line consists of a key, a class label and an article separated by a space. All activities that need to be recorded for each thread are listed below, together with their abbreviations. Crawler start - crawler starts. grab - crawler starts to grab an article. f-grab - an article has been grabbed and loaded into the buffer. wait - crawler starts waiting for available space in the buffer. s-wait - crawler stops waiting. quit - crawler finished all job and about to quit. Classifier: start - classifier starts. clfy - classifier starts to classify an article. f-clfy - the article has been classified and deleted from the buffer. k-enough - k number of articles have been classified and the classifier notifies all threads to quit. n-stored - a total n articles have been stored in the text corpus. quit - classifier finished all job and about to quit. Below are sample output of the table on the screen and the text corpus. For example, in the table, crawler1 started at t1, then, crawler2 started at t2 and grabbed at t3, and so on. crauler2 crawleri start crawlers classifier F-grab sit start f-elfy clfy start s-wait grab grab start grab F-grob wait f-grab f-grab grab f-clfy 95-enough clfy cify S-wait quit f-grab grab s wait quit grab f-clfy cify f-grab cuit f-arab f-grab grab grab f-clfy cify f-grab grab f-clfy cify f-grab f-clfy cify f-elfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy clfy f-clfy 106-store quit grab f-grab grab f-grab grab f-clfy clfy f-elfy clfy f-grab grab Beginning of the table End of the table 1 13 ZUPPAV; NHVY@a|kHko;awahkjtc4g6yT?6\=aR_9L5kt:f1xYN 2 11 XZLEDY VNzjn;WtuJxrg U3r4ss:dpYXN\73SSBWJ17BsCd=gE 3 1 '=4@NBE=1VL:IN]sz=QY5qk_n; 6RSOZLn][4@0B0;K6jKD3PE 4 12 >;18>yl bu>Yujg151pYPX@B][=nc13EYOroFGOTEVO 5 8 Hf6u45Q@D10wsb:Xdc;YLO 57][Jeoqm@dNACUUSQM5EeY0000;08 jbnN?pzrX 11 1 NYfahrz]OVDQ;5z' z2a\Q39Ci>QGn4a5k5MJwrzovhva[g?X81 12 4 QArg; D9QINRyYXK:OVUO=5n6=rrWAFBWJr)_]r>:112]w7y5phs 15 6 59\AndDBiBEq3TEUVM9 *2H0KC<_bcncvisojlhgc nc6ke>gkzu; 3WHD1?SU063x0;v9NH PUzHjuo_j 17 3 CV1 ]KTKM^sot8@[J[M>4?v2r9JZINR=D4027sGb23zxh WUtJ? 18 2 Oxonam666_XOVZG?VVBUAOUEw_Jf1L?rhx?mDy3Ztg^1W>[fox4IX 20 3 coxkAkx@GGdJ01ks; U[G_B41ERHr4[ ymC>shA9=[bFZ>IJ@9 21 13 MKFS=TFdYxZSWOYAB7XDdp: UU>NTa9SVUSVD VHS JPMoudhs text corpus 4 Challenge This challenge is for those students wish to get an A+ grade in this programming assignment and to take one more step to the real-world application. Most modern websites are under anti-crawler protection. Thus, crawlers should be updated with new IP addresses and cookies periodically to get through the barrier. A strategy manager thread is created to update the crawlers with a new IP and cookies. Each crawler notifies the strategy manager to update its IP and cookies after every M articles are grabbed. The update takes time of interval_C. The input and extra output are listed below. Your program has to accept the following arguments in input order: interval_A, intervat_B, interval_C: integer, unit: microsecond, M: integer. Crawler: two more activities have to be recorded: rest - crawler starts resting. s-rest - crawler stops resting. Strategy-Manager: start-manager starts. get-crx - manager gets a notification from crawler x. up-crx - manager updated crawler x with new IP and cookies. quit - manager finished all job and about to quit. 5 Helper Program and Hint 5.1 generator.cpp The function char* str_generator (void) is provided in the file generator.cpp. It returns a string (char array) of length 50. Use it by declaring a prototype in your code and compiling it along with your source code. 5.2 hint Multi-threading needs careful manipulation. A specious program may show correctness in several tests at the beginning, but collapses at the later tests. Thus, testing your program multiple times would be a good choice. Testing it with different arguments would be even better. 6 Marking Scheme Your program will be tested on our CSLab Linux servers (cs3103-01, cs3103-02, cs3103-03). You should describe clearly how to compile and run your program as comments in your source program file. If an executable file cannot be generated and running successfully on our Linux servers, it will be considered as unsuccessful. A. Design and use of multi-threading (15%) Thread-safe multithreaded design and correct use of thread-management functions Non-multithreaded implementation (0%) B. Design and use of mutexes (15%) Complete, correct and non-excessive use of mutexes Useless/unnecessary use of mutexes (0%) C. Design and use of semaphores (30%) Complete, correct and non-excessive use of semaphores Useless / unnecessary use of semaphores (0%) D. Degree of concurrency (15%) A design with higher concurrency is preferable to one with lower concurrency. An example of lower concurrency: only one thread can access the buffer at a time. An example of higher concurrency: various threads can access the buffer o but works on different articles at a time. No concurrency (0%) E. Program correctness (15%) Complete and correct implementation of other features including: o correct logic and coding of thread functions o correct coding of queue and related operations o passing parameters to the program on the command line o program output conform to the format of the sample output o successful program termination Fail to pass the g++ complier on our Linux servers to generate a runnable executable file (0%) F. Programming style and documentation (10%) Good programming style Clear comments in the program to describe the design and logic Unreadable program without any comment (0%)

![std::random_device rd; std::mt19937 gen(rd()); std::uniform_int_distribution dis(48, 122); char *art = new char[ALEN+3];](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f4f1b0ba3e8_68866f4f1b058b81.jpg)

![for (int i=0; i art[i] = char(dis(gen)); art[ALEN] = 0; return art;](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f4f1b177497_68866f4f1b0e692a.jpg)