Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Overfitting is the phenomenon where fitting the observed facts (data) well no longer indicates that we will get a decent out-of-sample error, and may actually

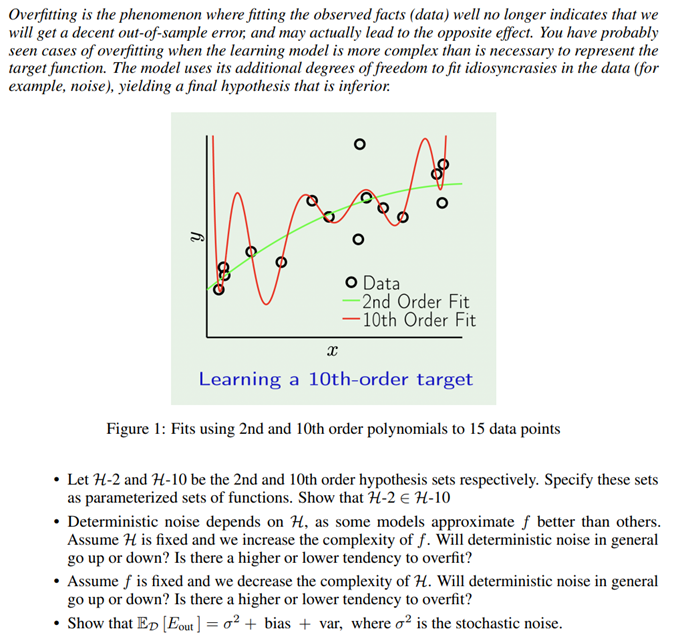

Overfitting is the phenomenon where fitting the observed facts (data) well no longer indicates that we will get a decent out-of-sample error, and may actually lead to the opposite effect. You have probably seen cases of overfitting when the learning model is more complex than is necessary to represent the target function. The model uses its additional degrees of freedom to fit idiosyncrasies in the data (for example, noise), yielding a final hypothesis that is inferior. Learning a 10th-order target Figure 1: Fits using 2nd and 10th order polynomials to 15 data points - Let H2 and H10 be the 2 nd and 10 th order hypothesis sets respectively. Specify these sets as parameterized sets of functions. Show that H2H10 - Deterministic noise depends on H, as some models approximate f better than others. Assume H is fixed and we increase the complexity of f. Will deterministic noise in general go up or down? Is there a higher or lower tendency to overfit? - Assume f is fixed and we decrease the complexity of H. Will deterministic noise in general go up or down? Is there a higher or lower tendency to overfit? - Show that ED[Eout]=2+ bias + var, where 2 is the stochastic noise

Overfitting is the phenomenon where fitting the observed facts (data) well no longer indicates that we will get a decent out-of-sample error, and may actually lead to the opposite effect. You have probably seen cases of overfitting when the learning model is more complex than is necessary to represent the target function. The model uses its additional degrees of freedom to fit idiosyncrasies in the data (for example, noise), yielding a final hypothesis that is inferior. Learning a 10th-order target Figure 1: Fits using 2nd and 10th order polynomials to 15 data points - Let H2 and H10 be the 2 nd and 10 th order hypothesis sets respectively. Specify these sets as parameterized sets of functions. Show that H2H10 - Deterministic noise depends on H, as some models approximate f better than others. Assume H is fixed and we increase the complexity of f. Will deterministic noise in general go up or down? Is there a higher or lower tendency to overfit? - Assume f is fixed and we decrease the complexity of H. Will deterministic noise in general go up or down? Is there a higher or lower tendency to overfit? - Show that ED[Eout]=2+ bias + var, where 2 is the stochastic noise Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started