PLEASE ANSWER IN FULL FOR UPVOTE Problem 1 . Consider the following gamblers ruin problem. A gambler bets $1 on each play of a game.

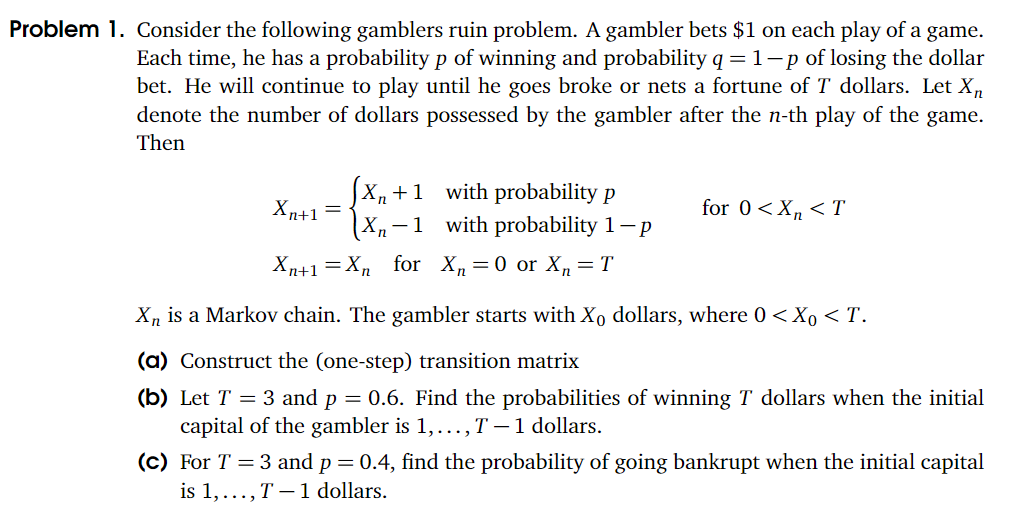

Problem 1 . Consider the following gamblers ruin problem. A gambler bets $1 on each play of a game. Each time, he has a probability p of winning and probability q 1 p of losing the dollar bet. He will continue to play until he goes broke or nets a fortune of T dollars. Let Xn denote the number of dollars possessed by the gambler after the n-th play of the game. Then Xn + 1 with probability p xn+l Xn 1 with probability 1 p xn+l xn for xn=o or Xn = T for O < xn < T Xn is a Markov chain. The gambler starts with Xo dollars, where 0 < Xo < T. (a) Construct the (one-step) transition matrix (b) Let T 3 and p = 0.6. Find the probabilities of winning T dollars when the initial capital of the gambler is 1, . , T 1 dollars. (c) For T 3 and p 0.4, find the probability of going bankrupt when the initial capital is 1, T 1 dollars.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started