Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Please help solve this in Matlab ussed this week (Newton's method and section Search) work very well for minimizing functions of one variable, but they

Please help solve this in Matlab

Please help solve this in Matlab

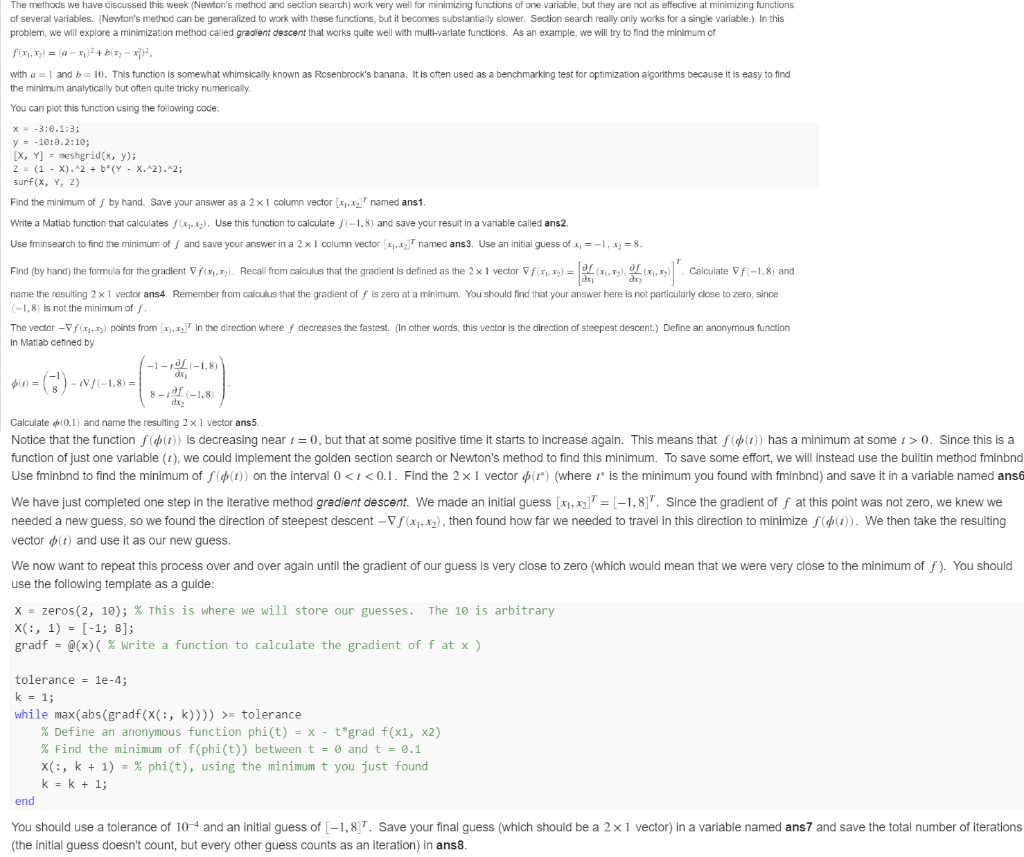

ussed this week (Newton's method and section Search) work very well for minimizing functions of one variable, but they are not as effective at minimizing functions of several variables. (Newton's method can be generalized to work with these functions, but it becomes substantially slower. Section search really only works for a single variable.) In this problem, we will explore a minimization method called gradient descent that works quite well with multi-variate functions. As an example, we will try to find the minimum of f(x, y) = (a + bx2-x, with a = 1 and b = 10. This function is somewhat whimsically known as Rosenbrock's banana. It is often used as a benchmarking test for optimization algorithms because it is easy to find the minimum analytically but often quite tricky numerically. You can plot this function using the following code. X = -3:0.1:3; y = -16:0.2:10; [X, Y] = meshgrid(x, y); Z = (1 - X).^2 + b*(Y - X.-2).^2; surf(x, y, z) Find the minimum of f by hand. Save your answer as a 2 x 1 column vector 1.2 named ans1. Write a Matlab function that calculates fx.). Use this function to calculate (-1,8) and save your result in a variable called ans2. Use fminsearch to find the minimum off and save your answer in a 2 x 1 column vector named ans 3. Use an initial guess of x = -1,. = 8. Find (by hand) the formula for the gradient V f(x ) Recall from calculus that the gradient is defined as the 2 x 1 vector f(x ) = (x ). (x,x) . Calculate f(-1,8) and name the resulting 2 x 1 vector ans4. Remember from calculus that the gradient of f is zero at a minimum. You should find that your answer here is not particularly close to zero, since (-1,8) is not the minimum of f. The vector - Vf (2,1 points from 2n in the direction where f decreases the fastest. (In other words, this vector is the direction of steepest descent.) Define an anonymous function In Matlab defined by |--10--1,8) b) = G) - VS(-1,8) = 1 Calculate (0.1) and name the resulting 2 x 1 vector ans5. Notice that the function f (t) is decreasing near t = 0, but that at some positive time it starts to increase again. This means that f (t)) has a minimum at some > 0. Since this is a function of just one variable (t), we could implement the golden section search or Newton's method to find this minimum. To save some effort, we will instead use the builtin method fminbnd Use fminbnd to find the minimum of f ) on the interval 0 = tolerance % Define an anonymous function phi(t) = x - t*grad f(x1, x2) % Find the minimum of f(phi(t)) between t = 0 and t = 0.1 X(:, k + 1) = % phi(t), using the minimum t you just found k = k + 1; end You should use a tolerance of 10- and an initial guess of [-1,8. Save your final guess (which should be a 2x 1 vector) in a variable named ans7 and save the total number of iterations (the initial guess doesn't count, but every other guess counts as an iteration) in ans8. ussed this week (Newton's method and section Search) work very well for minimizing functions of one variable, but they are not as effective at minimizing functions of several variables. (Newton's method can be generalized to work with these functions, but it becomes substantially slower. Section search really only works for a single variable.) In this problem, we will explore a minimization method called gradient descent that works quite well with multi-variate functions. As an example, we will try to find the minimum of f(x, y) = (a + bx2-x, with a = 1 and b = 10. This function is somewhat whimsically known as Rosenbrock's banana. It is often used as a benchmarking test for optimization algorithms because it is easy to find the minimum analytically but often quite tricky numerically. You can plot this function using the following code. X = -3:0.1:3; y = -16:0.2:10; [X, Y] = meshgrid(x, y); Z = (1 - X).^2 + b*(Y - X.-2).^2; surf(x, y, z) Find the minimum of f by hand. Save your answer as a 2 x 1 column vector 1.2 named ans1. Write a Matlab function that calculates fx.). Use this function to calculate (-1,8) and save your result in a variable called ans2. Use fminsearch to find the minimum off and save your answer in a 2 x 1 column vector named ans 3. Use an initial guess of x = -1,. = 8. Find (by hand) the formula for the gradient V f(x ) Recall from calculus that the gradient is defined as the 2 x 1 vector f(x ) = (x ). (x,x) . Calculate f(-1,8) and name the resulting 2 x 1 vector ans4. Remember from calculus that the gradient of f is zero at a minimum. You should find that your answer here is not particularly close to zero, since (-1,8) is not the minimum of f. The vector - Vf (2,1 points from 2n in the direction where f decreases the fastest. (In other words, this vector is the direction of steepest descent.) Define an anonymous function In Matlab defined by |--10--1,8) b) = G) - VS(-1,8) = 1 Calculate (0.1) and name the resulting 2 x 1 vector ans5. Notice that the function f (t) is decreasing near t = 0, but that at some positive time it starts to increase again. This means that f (t)) has a minimum at some > 0. Since this is a function of just one variable (t), we could implement the golden section search or Newton's method to find this minimum. To save some effort, we will instead use the builtin method fminbnd Use fminbnd to find the minimum of f ) on the interval 0 = tolerance % Define an anonymous function phi(t) = x - t*grad f(x1, x2) % Find the minimum of f(phi(t)) between t = 0 and t = 0.1 X(:, k + 1) = % phi(t), using the minimum t you just found k = k + 1; end You should use a tolerance of 10- and an initial guess of [-1,8. Save your final guess (which should be a 2x 1 vector) in a variable named ans7 and save the total number of iterations (the initial guess doesn't count, but every other guess counts as an iteration) in ans8

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started