Answered step by step

Verified Expert Solution

Question

1 Approved Answer

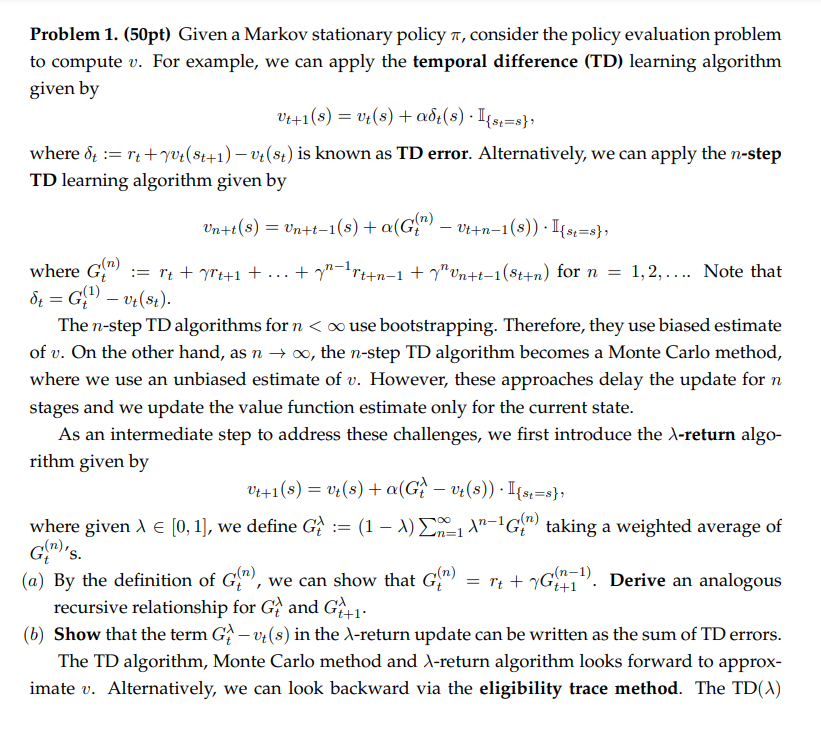

Problem 1 . ( 5 0 pt ) Given a Markov stationary policy , consider the policy evaluation problem to compute v . For example,

Problem pt Given a Markov stationary policy consider the policy evaluation problem

to compute For example, we can apply the temporal difference TD learning algorithm

given by

where : is known as TD error. Alternatively, we can apply the step

TD learning algorithm given by

where :dots for dots. Note that

The step TD algorithms for use bootstrapping. Therefore, they use biased estimate

of On the other hand, as the step TD algorithm becomes a Monte Carlo method,

where we use an unbiased estimate of However, these approaches delay the update for

stages and we update the value function estimate only for the current state.

As an intermediate step to address these challenges, we first introduce the return algo

rithm given by

where given we define : taking a weighted average of

s

a By the definition of we can show that Derive an analogous

recursive relationship for and

b Show that the term in the return update can be written as the sum of TD errors.

The TD algorithm, Monte Carlo method and return algorithm looks forward to approx

imate Alternatively, we can look backward via the eligibility trace method. TheTD

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started