Answered step by step

Verified Expert Solution

Question

1 Approved Answer

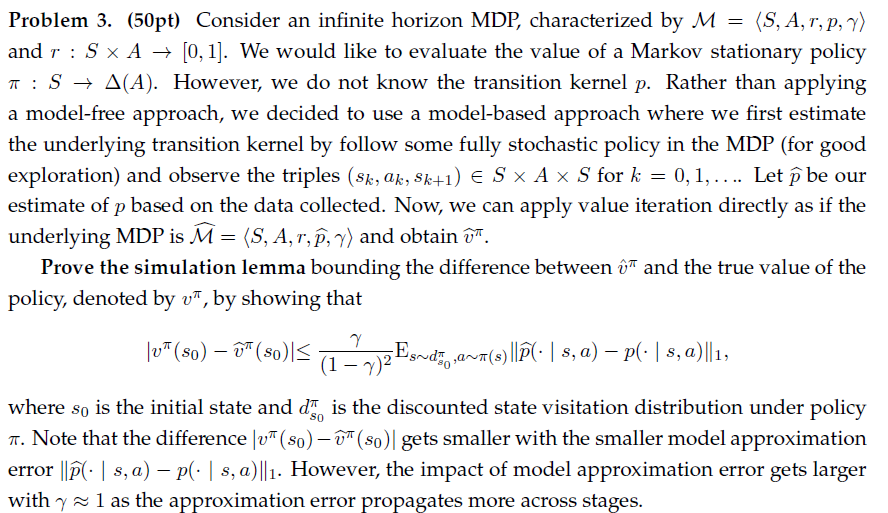

Problem 3 . ( 5 0 pt ) Consider an infinite horizon MDP , characterized by M = ( : S , A , r

Problem pt Consider an infinite horizon MDP characterized by ::

and : We would like to evaluate the value of a Markov stationary policy

: However, we do not know the transition kernel Rather than applying

a modelfree approach, we decided to use a modelbased approach where we first estimate

the underlying transition kernel by follow some fully stochastic policy in the MDP for good

exploration and observe the triples for dots Let hat be our

estimate of based on the data collected. Now, we can apply value iteration directly as if the

underlying MDP is widehat:widehat: and obtain widehat

Prove the simulation lemma bounding the difference between hat and the true value of the

policy, denoted by by showing that

widehat

where is the initial state and is the discounted state visitation distribution under policy

Note that the difference widehat gets smaller with the smaller model approximation

error However, the impact of model approximation error gets larger

with ~~ as the approximation error propagates more across stages.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started