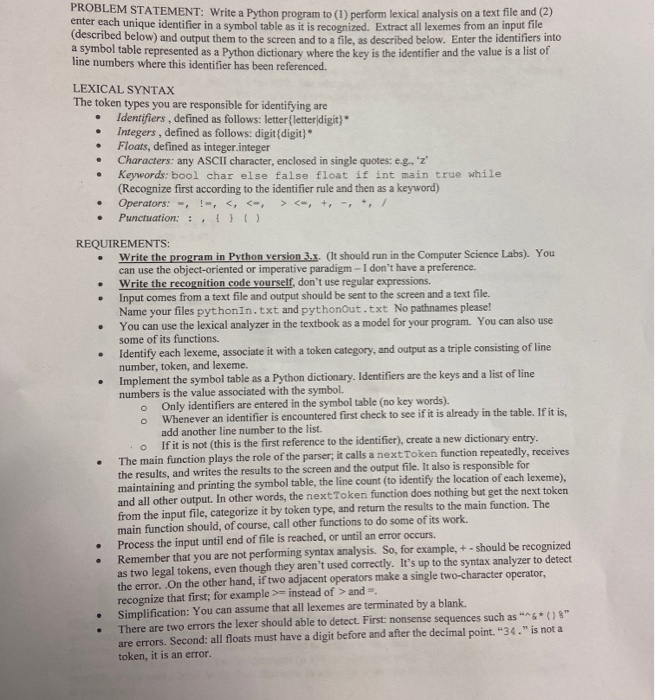

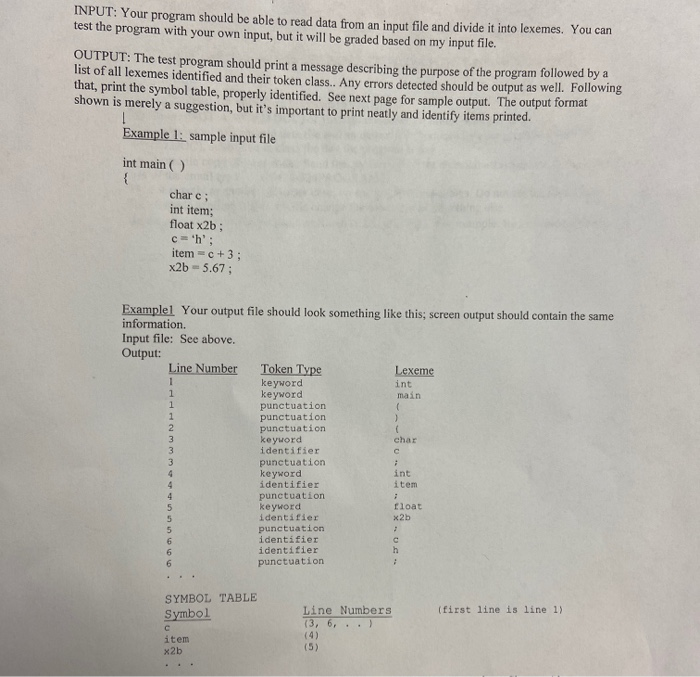

PROBLEM STATEMENT: Write a Python program to (1) perform lexical analysis on a text file and (2) enter each unique identifier in a symbol table as it is recognized. Extract all lexemes from an mo (described below) and output them to the screen and to a file, as described below. Enter the identifiers into a symbol table represented as a Python dictionary where the key is the identifier and the value is a list of line numbers where this identifier has been referenced. LEXICAL SYNTAX The token types you are responsible for identifying are Identifiers, defined as follows: letter{letter digit} * Integers, defined as follows: digit{digit) Floats, defined as integer.integer Characters: any ASCII character, enclosed in single quotes: e.g. "Z Keywords: bool char else false float if int main true while (Recognize first according to the identifier rule and then as a keyword) Operators: -, !-, C-, +, -, *, / Punctuation: : { } ( ) REQUIREMENTS: Write the program in Python version 3.x. (It should run in the Computer Science Labs). You can use the object-oriented or imperative paradigm - I don't have a preference. Write the recognition code vourself, don't use regular expressions. Input comes from a text file and output should be sent to the screen and a text file. Name your files python In.txt and pythonOut.txt No pathnames please! You can use the lexical analyzer in the textbook as a model for your program. You can also use some of its functions. Identify each lexeme, associate it with a token category, and output as a triple consisting of line number, token, and lexeme. Implement the symbol table as a Python dictionary. Identifiers are the keys and a list of line numbers is the value associated with the symbol o Only identifiers are entered in the symbol table (no key words) Whenever an identifier is encountered first check to see if it is already in the table. If it is. add another line number to the list. If it is not (this is the first reference to the identifier), create a new dictionary entry. The main function plays the role of the parser; it calls a nextToken function repeatedly, receives the results, and writes the results to the screen and the output file. It also is responsible for maintaining and printing the symbol table, the line count(to identify the location of each lexeme). and all other output. In other words, the nextToken function does nothing but get the next token from the input file, categorize it by token type, and return the results to the main function. The main function should, of course, call other functions to do some of its work. Process the input until end of file is reached, or until an error occurs. Remember that you are not performing syntax analysis. So, for example, + - should be recognized as two legal tokens, even though they aren't used correctly. It's up to the syntax analyzer to detect the error. On the other hand, if two adjacent operators make a single two-character operator, recognize that first, for example >instead of > and Simplification: You can assume that all lexemes are terminated by a blank. There are two errors the lexer should able to detect. First: nonsense sequences such as "A " are errors. Second: all floats must have a digit before and after the decimal point. "34." is not a token, it is an error. INPUT: Your program should be able to read data from an input file and divide it into lexemes. You can test the program with your own input, but it will be graded based on my input file. OUTPUT: The test program should print a message describing the purpose of the program followed by a list of all lexemes identified and their token class.. Any errors detected should be output as well. Following that, print the symbol table, properly identified. See next page for sample output. The output format shown is merely a suggestion, but it's important to print neatly and identify items printed Example 1: sample input file int main() chare; int item; float x2b; c='h'; item =c+3; X2b = 5.67; Examplel Your output file should look something like this; screen output should contain the same information. Input file: See above. Output: Line Number Token Type Lexeme keyword int keyword main punctuation punctuation punctuation keyword char identifier punctuation keyword int identifier item punctuation keyword float identifier x2b punctuation identifier identifier punctuation SYMBOL TABLE Symbol (first line is line 1) Line Numbers (3, 6, ..) item x2b (4) (5) PROBLEM STATEMENT: Write a Python program to (1) perform lexical analysis on a text file and (2) enter each unique identifier in a symbol table as it is recognized. Extract all lexemes from an mo (described below) and output them to the screen and to a file, as described below. Enter the identifiers into a symbol table represented as a Python dictionary where the key is the identifier and the value is a list of line numbers where this identifier has been referenced. LEXICAL SYNTAX The token types you are responsible for identifying are Identifiers, defined as follows: letter{letter digit} * Integers, defined as follows: digit{digit) Floats, defined as integer.integer Characters: any ASCII character, enclosed in single quotes: e.g. "Z Keywords: bool char else false float if int main true while (Recognize first according to the identifier rule and then as a keyword) Operators: -, !-, C-, +, -, *, / Punctuation: : { } ( ) REQUIREMENTS: Write the program in Python version 3.x. (It should run in the Computer Science Labs). You can use the object-oriented or imperative paradigm - I don't have a preference. Write the recognition code vourself, don't use regular expressions. Input comes from a text file and output should be sent to the screen and a text file. Name your files python In.txt and pythonOut.txt No pathnames please! You can use the lexical analyzer in the textbook as a model for your program. You can also use some of its functions. Identify each lexeme, associate it with a token category, and output as a triple consisting of line number, token, and lexeme. Implement the symbol table as a Python dictionary. Identifiers are the keys and a list of line numbers is the value associated with the symbol o Only identifiers are entered in the symbol table (no key words) Whenever an identifier is encountered first check to see if it is already in the table. If it is. add another line number to the list. If it is not (this is the first reference to the identifier), create a new dictionary entry. The main function plays the role of the parser; it calls a nextToken function repeatedly, receives the results, and writes the results to the screen and the output file. It also is responsible for maintaining and printing the symbol table, the line count(to identify the location of each lexeme). and all other output. In other words, the nextToken function does nothing but get the next token from the input file, categorize it by token type, and return the results to the main function. The main function should, of course, call other functions to do some of its work. Process the input until end of file is reached, or until an error occurs. Remember that you are not performing syntax analysis. So, for example, + - should be recognized as two legal tokens, even though they aren't used correctly. It's up to the syntax analyzer to detect the error. On the other hand, if two adjacent operators make a single two-character operator, recognize that first, for example >instead of > and Simplification: You can assume that all lexemes are terminated by a blank. There are two errors the lexer should able to detect. First: nonsense sequences such as "A " are errors. Second: all floats must have a digit before and after the decimal point. "34." is not a token, it is an error. INPUT: Your program should be able to read data from an input file and divide it into lexemes. You can test the program with your own input, but it will be graded based on my input file. OUTPUT: The test program should print a message describing the purpose of the program followed by a list of all lexemes identified and their token class.. Any errors detected should be output as well. Following that, print the symbol table, properly identified. See next page for sample output. The output format shown is merely a suggestion, but it's important to print neatly and identify items printed Example 1: sample input file int main() chare; int item; float x2b; c='h'; item =c+3; X2b = 5.67; Examplel Your output file should look something like this; screen output should contain the same information. Input file: See above. Output: Line Number Token Type Lexeme keyword int keyword main punctuation punctuation punctuation keyword char identifier punctuation keyword int identifier item punctuation keyword float identifier x2b punctuation identifier identifier punctuation SYMBOL TABLE Symbol (first line is line 1) Line Numbers (3, 6, ..) item x2b (4)